Geospatial datasets are critical for decision makers across industries, including life sciences and social sciences, as many organizations and data scientists rely on geospatial data analysis in various areas to generate impactful insights and support informed decisions.

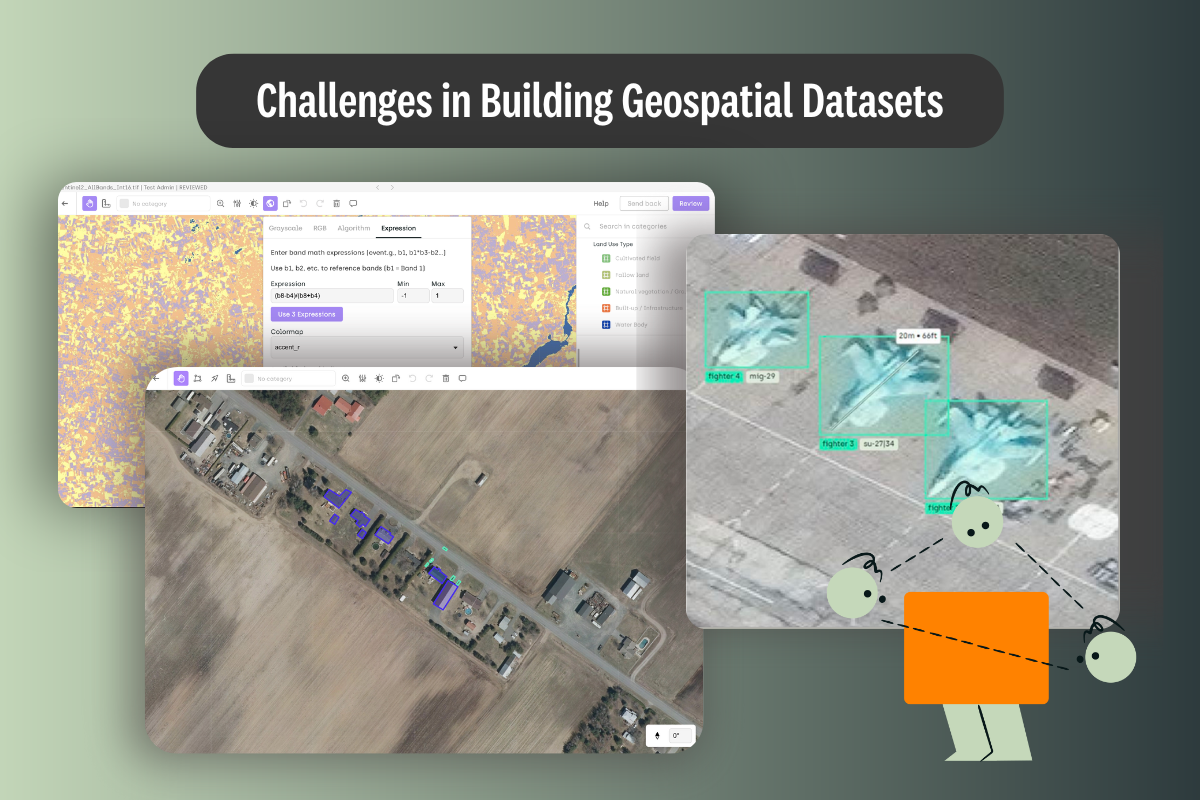

However, building reliable geospatial datasets for AI training poses unique technical challenges that differ significantly from those in traditional computer vision applications.

This article examines the technical obstacles and considerations that machine learning engineers face when developing geospatial AI systems, drawing insights from our recent webinar with Enabled Intelligence on building reliable geospatial datasets and comprehensive industry research.

The Foundational Challenge: Data Pipeline and Format Interoperability

The most fundamental and persistent challenge in building geospatial AI systems lies in bridging the deep chasm between geospatial data formats and AI training requirements. This "geospace-to-pixel-space" translation problem forms the bedrock of any GeoAI workflow, with its complexity dictating the feasibility, accuracy, and scalability of the entire system.

The Bi-Directional Translation Problem

Geospatial imagery comes in specialized formats like GeoTIFF, NITF, and KML files that contain rich metadata including coordinate systems, projections, and georeferencing information. This metadata includes important attributes and attribute information that are critical for spatial analysis. This metadata is invaluable for spatial analysis but creates fundamental incompatibilities with standard AI training pipelines.

AI computer vision models train in pixel space, not geospace. This creates a critical bi-directional conversion challenge that pervades every aspect of the data pipeline:

- Forward Translation Complexity: Labels must be created using geocoordinates for spatial accuracy, then precisely converted to pixel coordinates for model training. This conversion requires sophisticated handling of coordinate system transformations, projection changes, and geometric corrections.

- Reverse Translation Precision: Model outputs in pixel coordinates must be translated back to geocoordinates for practical geospatial applications. Any accumulated errors in this process render predictions spatially inaccurate when mapped to real-world locations.

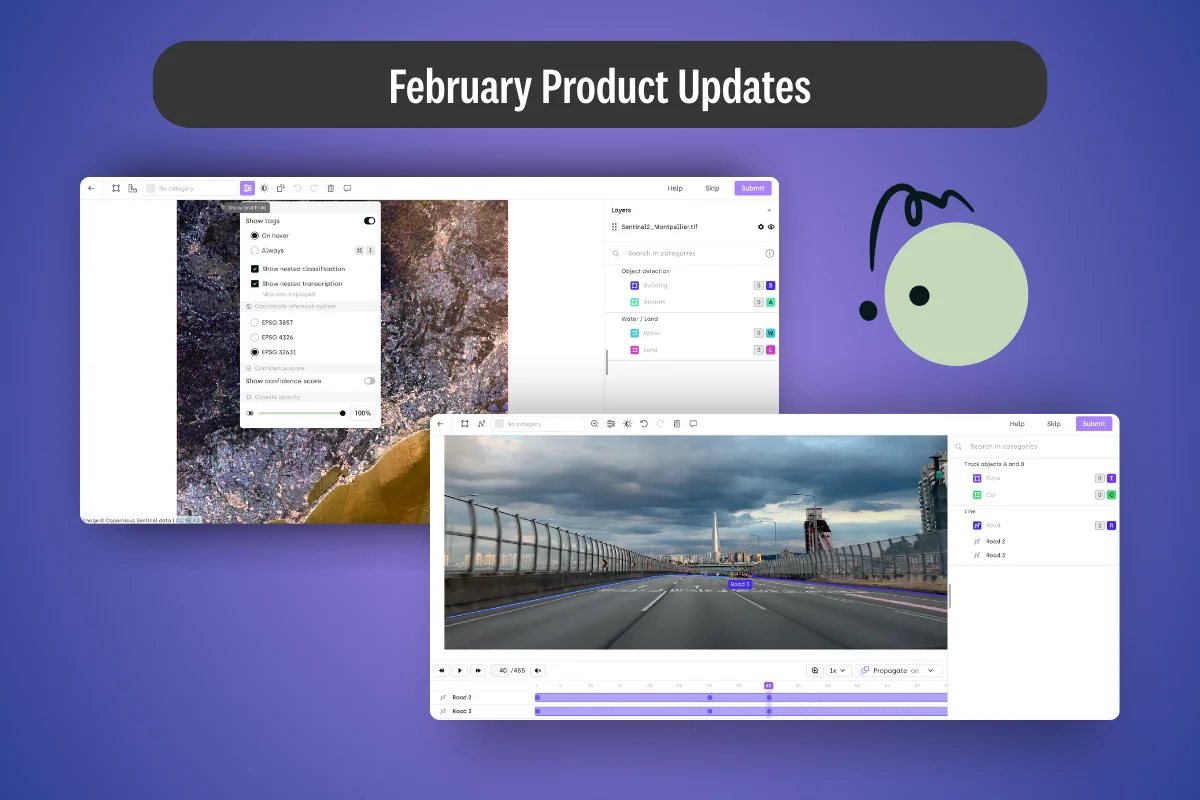

Modern annotation platforms address this challenge through native support for geospatial workflows. We enable labeling in geocoordinates while automatically handling the conversion to pixel coordinates for AI training. This dual-coordinate approach preserves the spatial integrity essential for geospatial analysis while maintaining compatibility with standard machine learning pipelines.

However, even with automated conversion tools, every step in this translation chain requires strategic consideration of accuracy versus efficiency trade-offs. An inaccurate geotransform, mismatched projection, or incorrect interpolation method during resampling introduces spatial inaccuracies that compound through the pipeline. The challenge becomes particularly acute for off-nadir imagery where perspective distortions create complex 3D-to-2D projection effects, requiring teams to choose between accepting geometric distortions or investing in specialized correction algorithms based on their application’s precision requirements.

Format-Specific Technical Barriers

Standard GeoTIFF Limitations: Traditional GeoTIFF format creates significant inefficiencies in cloud-based workflows. The linear “strip” organization means accessing small regions often requires downloading entire large files, creating bandwidth and latency bottlenecks. GeoTIFF and COG are primarily used for raster data, which represents geographic information as pixel grids, in contrast to vector data that models features as points, lines, and polygons. Working with raw data in these formats can present unique challenges, such as the need for specialized tools to process and analyze both raster and vector data efficiently.

Cloud Optimized GeoTIFF (COG) Evolution: The industry has responded to traditional GeoTIFF limitations with Cloud Optimized GeoTIFF, which enables efficient access to large geospatial datasets through dynamic tiling and on-the-fly rendering. We now provide native COG support, allowing teams to work with files up to 5GB while maintaining responsive performance through intelligent caching and progressive loading. This advancement significantly reduces the bandwidth bottlenecks that previously hindered cloud-based geospatial workflows.

NITF Complexity: NITF files present extreme complexity as multi-segment containers packaging imagery with vast amounts of metadata through Tagged Record Extensions (TREs) and Data Extension Segments (DESs). This specialized format requires sophisticated parsing capabilities that most standard AI tools lack.

Metadata Integration Strategy: While COG and modern platforms address many format compatibility issues, the rich geospatial metadata essential for spatial accuracy requires strategic integration choices with AI frameworks. Teams must balance preserving complete spatial context against maintaining compatibility with standard ML pipelines, often choosing to implement custom engineering solutions that best serve their specific application requirements.

Data Heterogeneity: The Persistent Domain Shift Challenge

Unlike the relatively uniform world of consumer photos, Earth observation data exhibits immense heterogeneity across multiple dimensions. This heterogeneity arises from the use of various types of spatial data, including remotely sensed data such as imagery, lidar, and video. Each variation introduces domain shift, where statistical properties of training data differ from inference data, creating fundamental reliability challenges. Analyzing and having analyzed these diverse spatial data sources is essential for understanding and mitigating these challenges.

Resolution Dependencies and Ground Sample Distance Sensitivity

A model’s extreme sensitivity to Ground Sample Distance (GSD) represents one of the most significant technical barriers. An object detector trained on 0.3-meter resolution imagery, where a car might occupy a 15x30 pixel area, will fail catastrophically when applied to 5-meter imagery where the same car becomes a single pixel or less. Satellite images at different resolutions present unique challenges for model training and inference.

This sensitivity creates important scaling decisions that impact both technical architecture and resource allocation:

- Training Data Strategy: Supporting multiple resolutions requires choosing between broad model capabilities or focused precision, with each approach demanding different data collection strategies

- Model Architecture Decisions: Teams must decide between building specialized models for specific resolutions or investing in larger foundational models capable of handling resolution diversity

- Computational Resource Planning: Broader models capable of handling multiple resolutions require significantly more computational resources, necessitating infrastructure planning that aligns with application requirements

Sensor and Modality Divergence

Even at identical resolutions and specifications, imagery from different sensors exhibits profound differences that create domain adaptation challenges. SAR imagery from different providers like Capella and ICEYE, despite identical specifications, requires separate training considerations due to subtle differences in sensor physics and processing chains.

The optical-to-SAR domain gap presents particularly formidable challenges:

- Physical Property Differences: Optical images capture reflected light revealing color and texture, while SAR measures microwave backscatter revealing surface roughness and dielectric properties

- Noise Characteristics: SAR imagery contains significant speckle noise that fundamentally alters feature appearance

- Spectral Signature Variations: The same object can have completely different signatures across modalities

Off-Nadir Geometric Distortion Complexity

Geometric distortions from off-nadir viewing angles present severe technical challenges that compound with increasing view angles:

- Building Lean Effects: Tall structures appear to lean away from image center, creating perspective distortions that confound standard detection algorithms

- Variable Ground Resolution: Effective resolution degrades with increasing off-nadir angles, creating inconsistent feature scales within single images

- Shadow Elongation: Shadows become elongated and can obscure features, creating occlusion patterns not present in nadir imagery

- Geometric Measurement Errors: Standard measurement tools become inaccurate without complex geometric correction factors

Environmental and Temporal Dynamics: The Moving Target Problem

Geospatial data captures a world in constant flux, where environmental conditions dramatically alter landscape appearance and object characteristics. For example, with climate data, predicting environmental and temporal dynamics, including long-term climate trends, from this imagery is exceedingly difficult, as the data itself is constantly changing. This creates fundamental challenges for model generalization and reliability.

Seasonal and Weather-Driven Domain Shifts

Environmental variations create dramatic changes in spectral signatures and visual appearance that render models brittle across conditions. For an AI model analyzing aerial imagery, these seasonal and weather-driven changes create a complex and unreliable visual landscape:

- Seasonal Spectral Variations: The same agricultural field exhibits completely different spectral characteristics across growing seasons, from bare soil to full canopy coverage

- Snow Cover Complications: Snow fundamentally alters both spectral signatures and geometric appearance of features, masking underlying objects and creating false positives

- Weather Impact: Cloud shadows, atmospheric haze, and precipitation create dynamic lighting conditions that affect feature visibility and classification accuracy

Biome and Geographic Generalization Challenges

Models trained in specific geographic regions or biomes face severe generalization challenges:

- Architectural Variations: Building styles and urban patterns vary dramatically across regions, making object detection models trained on one geographic area unreliable in others

- Vegetation Differences: Forest classification models trained on temperate deciduous forests fail in tropical rainforests or boreal coniferous forests

- Geological Diversity: Terrain characteristics, soil types, and geological formations create background variations that affect feature detection accuracy

Temporal Dimension Modeling Complexity

The temporal dimension in geospatial data presents unique technical challenges that standard computer vision approaches cannot address:

- Irregular Time Series: Real-world satellite data suffers from irregular collection schedules due to cloud cover, satellite orbits, and data availability

- Multi-temporal Registration: Aligning images from different collection times requires accounting for seasonal changes, construction activities, and natural disasters

- Temporal Sparsity: Critical events may occur between satellite passes, creating temporal gaps that limit change detection accuracy

The Model Architecture Dilemma: Scale vs. Specialization

The choice between large foundational models and specialized fine-tuned models presents fundamental trade-offs that significantly impact development strategies and operational requirements. This trade-off is central to modern geospatial artificial intelligence, forcing engineers to decide between adapting massive, pretrained deep learning models for general use, or applying specialized deep learning techniques to build models for very specific tasks.

Foundational Model Complexity and Limitations

Computational Barriers:

- Extreme Training Costs: Pre-training requires millions of GPU hours and massive, curated datasets that place development beyond most organizations' reach

- Memory Requirements: Large models demand substantial memory during training and inference, limiting deployment options

- Inference Latency: Model size creates latency challenges for real-time applications

Performance Trade-offs:

- Generalization vs. Precision: While offering broad applicability, GeoFMs may underperform specialized models for specific tasks

- Interpretability Challenges: Model complexity makes it difficult to understand decision processes, problematic for high-stakes applications

- Domain Drift Susceptibility: Large models require continuous monitoring and expensive retraining to maintain performance

Specialist Model Brittleness

Fine-tuned specialist models face their own set of technical limitations:

Generalization Failures:

- Narrow Applicability: Models optimized for specific sensors, regions, or object classes fail to generalize beyond their training domain

- Data Dependence: Each new application requires extensive labeled datasets, creating bottlenecks in model development

- Maintenance Complexity: Managing portfolios of specialist models creates significant operational overhead

Scaling Challenges:

- Model Proliferation: Organizations end up maintaining numerous models, each with separate training data, code, and update cycles

- Resource Fragmentation: Development resources become divided across multiple narrow-use models rather than consolidated capabilities

Advanced Image Processing and Annotation Challenges

The unique characteristics of geospatial imagery create fundamental challenges in image processing and annotation that don’t exist in traditional computer vision applications. Modern geospatial technology and remote sensing systems produce vast and complex geographic data, making the task of extracting accurate information a significant hurdle. These challenges are especially pronounced in high-stakes applications, such as monitoring critical infrastructure, where errors can have serious consequences.

The Computational Scale Problem

Geospatial images often exceed 40,000 pixels on each side, creating computational challenges that force difficult trade-offs between processing efficiency and spatial context preservation:

Memory and Processing Constraints:

- GPU Memory Limitations: Standard GPUs cannot process full-resolution geospatial images directly

- Processing Time Escalation: Full-image processing becomes prohibitively slow for large datasets

- Storage and Transfer Overhead: Large files create bandwidth bottlenecks and storage cost challenges

The Chipping Strategy Decision: Breaking large images into smaller, manageable tiles represents a fundamental design choice with clear trade-offs:

- Context vs. Efficiency: Tiling optimizes processing speed and memory usage but requires careful overlap planning to preserve spatial relationships

- Boundary Management: Objects spanning tile boundaries demand sophisticated restitching strategies, with the complexity justified by improved processing efficiency

- Annotation Workflow Optimization: Empty tiles can be filtered programmatically, and intelligent tiling algorithms can focus annotation effort on information-rich regions

- Reconstruction Planning: Reassembling predictions from tiles requires algorithm design decisions that balance accuracy with computational overhead

Intelligent Scale Management Solutions: We address the scale challenge through dynamic tiling systems that handle massive geospatial files by loading only necessary portions while maintaining full spatial context through interactive navigation tools like mini-maps and coordinate-based positioning. This allows teams to optimize their workflow for either processing speed or spatial awareness based on their specific analytical requirements.

Geospatial Precision Requirements

Geospatial annotation demands maintaining real-world coordinate accuracy throughout the labeling process, creating unique technical requirements:

Coordinate System Integrity:

- Multi-Projection Support: Different datasets may use various coordinate systems requiring complex transformations

- Precision Maintenance: Small errors in coordinate transformations accumulate and can render datasets spatially inaccurate

- Datum Consistency: Ensuring consistent reference datums across multi-source datasets requires careful validation

Mensuration and Real-World Accuracy: Beyond coordinate integrity, geospatial annotation demands precise real-world measurements that standard computer vision annotation cannot provide:

- Real-World Measurement Accuracy: Annotation tools must provide accurate distance and area measurements in real-world units

- Scale Validation: Annotations must maintain correct scale relationships for measurement-dependent applications

- Geometric Validation: Ensuring annotated features conform to realistic geometric constraints

Balancing Measurement and Training Requirements: We integrate measurement capabilities directly into the labeling workflow. These tools enable annotators to measure objects in real-world coordinates while maintaining pixel-perfect accuracy for model training. For applications requiring precise measurements—such as aircraft identification, cargo assessment, or infrastructure analysis—this capability bridges the gap between geospatial analysis requirements and AI training needs. The dual requirement for both measurement precision and training compatibility creates implementation decisions that teams must navigate based on their specific accuracy thresholds and computational resources.

Multi-Layer Registration Challenges

Advanced geospatial applications require simultaneous viewing and annotation of multiple data layers:

Technical Registration Requirements:

- Sub-Pixel Accuracy: Co-registering images from different sensors or times requires sub-pixel precision

- Temporal Alignment: Aligning data collected at different times while accounting for changes in the scene

- Multi-Spectral Coordination: Registering different spectral bands while preserving radiometric accuracy

Strategic Data Fusion Implementation: We support multi-layer workflows through sophisticated image stacking capabilities. Annotators can overlay different sensor types, temporal captures, or spectral bands, adjusting opacity and toggling layers to gain comprehensive understanding of the scene. This multi-layer approach proves particularly valuable for SAR analysis, where combining optical and radar imagery provides orientation context while preserving the unique characteristics of each sensor modality.

Teams must strategically balance the complexity of multi-source integration against their analytical requirements. Different data sources operating at varying spatial and temporal resolutions require preprocessing decisions that optimize either processing efficiency or information richness, with the choice depending on specific application needs and available computational resources.

Quality Assurance: Beyond Standard Computer Vision Metrics

Geospatial QA faces fundamental challenges that extend far beyond traditional computer vision validation approaches. The requirement to validate both semantic accuracy and geometric precision simultaneously creates complex validation frameworks. These quality assurance processes must therefore include an analysis of broader spatial patterns to identify potential spatial problems, such as illogical object placements. This deeper level of interpretation often relies on the visual representation of geospatial data, using interactive maps and imagery for effective validation.

Geospatial-Specific Quality Validation Challenges

Geometric Accuracy Validation:

- Spatial Precision Measurement: Quantifying how precisely annotated geometry aligns with true object boundaries requires sophisticated spatial accuracy metrics

- Multi-Scale Validation: Accuracy requirements vary significantly across different spatial scales and application domains

- Coordinate System Validation: Ensuring annotations maintain accuracy across coordinate system transformations

Spatial Consistency Challenges:

- Topological Validation: Ensuring labels adhere to logical geographic relationships and constraints

- Cross-Layer Consistency: Validating consistency across multiple data layers and annotation types

- Temporal Consistency: Maintaining logical relationships in time-series annotation datasets

Multi-Layered Quality Assurance Complexity

Geospatial QA requires sophisticated frameworks combining multiple validation approaches:

Inter-Annotator Agreement Challenges:

- Subjective Boundary Definition: Natural features often have ambiguous boundaries that create disagreement among annotators

- Expertise Requirements: Geospatial annotation often requires domain expertise that creates annotator skill disparities

- Consensus Threshold Determination: Establishing appropriate agreement thresholds for different feature types and applications

- See also: annotation errors

Expert Review Bottlenecks:

- Subject Matter Expert Scarcity: Limited availability of experts capable of authoritative adjudication

- Scalability Constraints: Expert review doesn't scale effectively with large dataset requirements

- Consistency Maintenance: Ensuring consistent expert decisions across large annotation campaigns

Programmatic Validation Limitations:

- Rule Definition Complexity: Encoding complex geospatial business rules into automated validation systems requires extensive domain knowledge

- False Positive Management: Automated systems often flag valid annotations as errors, requiring manual review overhead

- Context Sensitivity: Many validation rules require contextual understanding that automated systems struggle to capture

Quality Metric Integration Challenges

Semantic vs. Spatial Accuracy Trade-offs:

- Metric Weighting: Balancing importance of semantic correctness versus geometric precision across different applications

- Threshold Optimization: Determining appropriate IoU thresholds and spatial tolerance levels for different feature types

- Application-Specific Requirements: Quality requirements vary dramatically across defense, environmental, and commercial applications

Implementing Systematic Quality Assurance

The unique validation requirements of geospatial data necessitate QA frameworks that go far beyond traditional computer vision metrics. Professional geospatial annotation requires systematic quality assurance that addresses both semantic accuracy and spatial precision simultaneously.

Enterprise-Grade QA Workflows: We enable customizable QA workflows that can scale from basic single-annotator processes to enterprise-grade multi-step validation. For high-accuracy applications requiring near-ground-truth quality, teams can implement dual-annotator workflows where two independent annotators label the same imagery. Our automated comparison engines then analyze the results, measuring intersection over union, object count agreement, and classification consistency, with discrepancies automatically flagged and routed to quality control analysts for resolution.

Adaptive Quality Management Strategy: The flexibility to customize QA intensity based on data difficulty enables teams to optimize resource allocation while maintaining quality standards. Straightforward annotation campaigns might use 10% random sampling for quality review, while challenging datasets with complex ontologies might require 100% quality control review. Real-time metrics tracking enables teams to adjust QA parameters dynamically, increasing review levels when error rates climb or reducing oversight when annotators demonstrate consistent accuracy.

This adaptive approach ensures datasets achieve the 95% accuracy standards required for reliable AI training while optimizing resource allocation across large-scale annotation campaigns. Teams can strategically choose their quality assurance intensity based on application requirements, balancing the need for spatial precision against project timelines and budget constraints.

Got questions about quality?

Our team regularly works with companies that require their datasets to achieve 95%+ accuracy. See how Kili Technology can empower your teams to reach the same precision standards.

Book a demo

Conclusion

Building reliable geospatial datasets for AI training requires navigating a complex landscape of technical challenges that span data engineering, computer vision, and geospatial science. These challenges are fundamentally different from traditional computer vision applications due to the spatial precision requirements, format complexity, environmental variability, and scale demands inherent in geospatial data.

The technical obstacles—from the foundational geospace-to-pixel-space translation problem to the nuances of multi-layered quality assurance—underscore that success in GeoAI requires strategic engineering decisions rather than one-size-fits-all solutions. Modern geospatial annotation platforms have evolved to address many fundamental challenges through specialized capabilities—including native coordinate workflow support, intelligent handling of massive file sizes, multi-layer visualization tools, and sophisticated quality assurance frameworks—providing teams with the flexibility to optimize their approach based on specific application requirements.

The interconnected nature of these challenges means that solving one aspect often creates new opportunities for optimization in another. For instance, while automated coordinate conversion tools reduce translation errors, teams can choose to invest in specialized geometric correction algorithms for applications requiring maximum precision. Similarly, dynamic tiling solutions enable teams to prioritize either processing efficiency or spatial context preservation based on their specific analytical needs.

As the field continues evolving with multi-agent AI systems and increasingly sophisticated foundation models, these foundational challenges will remain critical bottlenecks that must be understood and carefully managed. The complexity and precision requirements of real-world geospatial applications demand that machine learning engineers approach these challenges with deep technical understanding and systematic engineering discipline.

The path forward requires recognizing that geospatial AI is inherently a multi-disciplinary frontier where success depends on mastering the unique technical challenges that arise at the intersection of spatial science, computer vision, and machine learning.

.png)

_logo%201.svg)

.webp)