The satellite imagery analysis pipeline has undergone a fundamental transformation. Traditional workflows—characterized by extensive manual annotation and custom model development—are giving way to intelligent systems that leverage foundation models and AI-assisted processing. This evolution represents not just incremental improvement, but a reimagining of how organizations approach earth observation at scale.

This article examines the modern pipeline for satellite imagery projects, exploring how advances in data processing, annotation technology, and model architecture are reshaping the field. We'll use land use classification as a lens through which to understand these broader transformations.

The Paradigm Shift in Satellite Imagery Analysis

The transition from traditional to modern pipelines reflects broader changes in computer vision and machine learning. Where once each satellite imagery project required building specialized models from scratch, today's approaches leverage pre-trained foundation models that understand visual concepts across domains.

This shift addresses fundamental challenges that have long constrained satellite imagery projects:

- The exponential growth in data volume from modern satellite constellations

- The scarcity of domain expertise for accurate annotation

- The computational cost of training large models from scratch

- The need for models that generalize across geographic regions

Rethinking Data Processing for Scale

The Foundation of Modern Pipelines

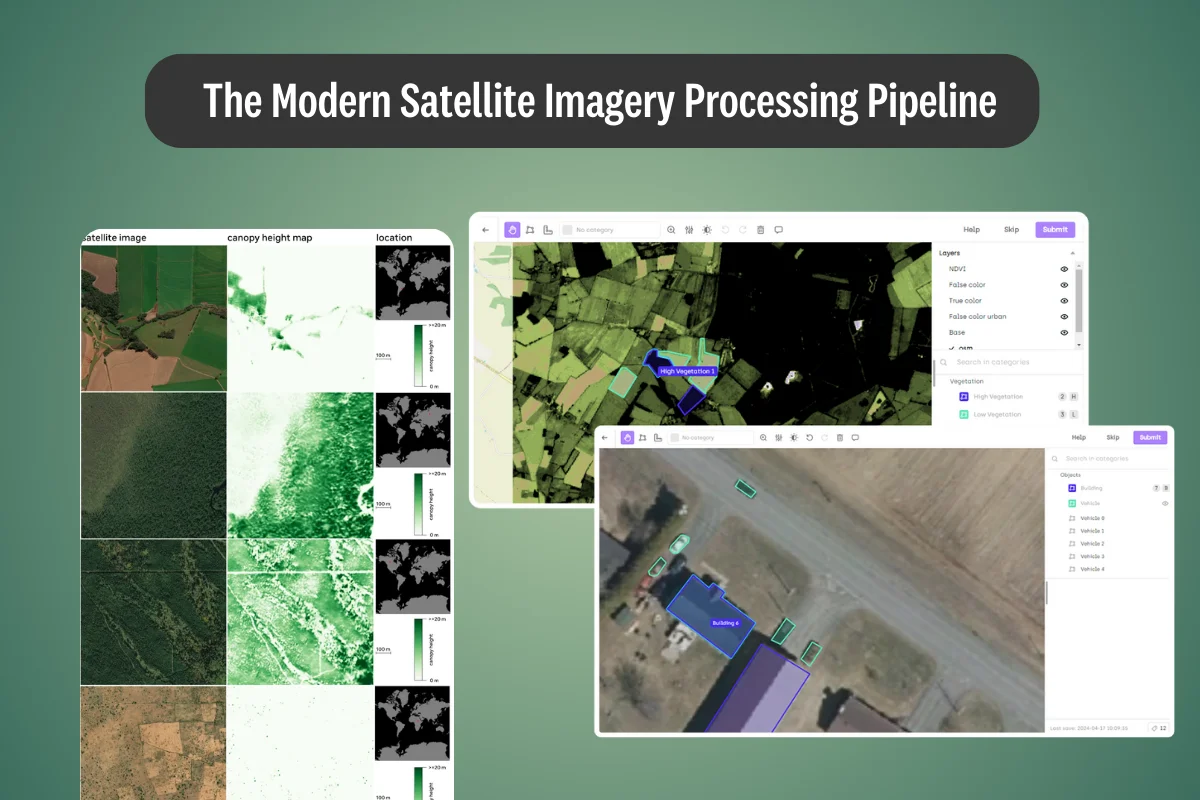

Contemporary data processing goes beyond simple image handling. Modern platforms demonstrate how intelligent preprocessing can preserve the rich information in satellite imagery while enabling efficient downstream processing. Native support for geospatial formats (GeoTIFF, JP2, NITF) and automatic optimization for multi-gigabyte files represents a significant evolution from traditional image processing workflows.

The key insight is that satellite imagery is fundamentally different from natural images. Each pixel carries geographic meaning, spectral bands encode information beyond visible light, and temporal sequences reveal dynamic processes. Modern pipelines preserve and leverage these unique characteristics rather than treating satellite images as generic visual data.

Architectural Considerations

Effective data processing architectures now incorporate:

- Hierarchical tiling systems that enable smooth navigation across vast imagery while maintaining full resolution access

- Lazy loading strategies that handle datasets with thousands of annotations without memory constraints

- Spectral band management that preserves multispectral information throughout the pipeline

- Coordinate system preservation ensuring geographic accuracy from ingestion through deployment

Geospatial Annotation with AI Integration

SAM 2: A Case Study in Native AI Integration

The integration of advanced AI models directly into annotation platforms represents a watershed moment for satellite imagery workflows. Our native implementation of SAM 2 (Segment Anything Model 2) exemplifies this transformation, addressing fundamental challenges in computer vision data labeling that have long constrained geospatial projects.

Rather than treating AI assistance as an add-on, we embed these capabilities deeply into the annotation workflow. Our implementation demonstrates the power of this approach through a dual-model strategy—offering both a Rapid Model for large-scale initial annotation and a High-res Model for precision tasks. This architecture reflects an understanding that different project phases require different tools, allowing teams to balance speed and accuracy according to their specific requirements.

Our enhanced toolkit introduces interactive Point and Bounding Box tools that adapt intelligently to different zoom levels, ensuring consistent accuracy whether analyzing broad geographical areas or focusing on specific details. A particularly significant advancement is our seamless handling of both tiled and non-tiled images, eliminating traditional constraints of working with large-scale geospatial data.

This native integration transforms annotation from a purely manual task to a human-AI collaboration. Annotators guide the AI through interactive refinement, leveraging machine intelligence for routine segmentation while applying human judgment for complex decisions. The result leads to faster annotation, and a fundamental change in how labelers interact with satellite imagery data.

Foundation Models: The New Starting Point

Understanding the Foundation Model Paradigm

The emergence of foundation models has transformed the model development landscape for satellite data. These models, pre-trained on vast datasets without task-specific labels, provide robust visual representations that transfer well to satellite imagery tasks and use cases such as change detection, land cover classification.

The key innovation lies in self-supervised learning: rather than requiring millions of labeled examples, foundation models learn visual concepts from the structure of images themselves. This approach is particularly valuable for geospatial data, where comprehensive labeling is expensive and often impractical at scale.

Foundation Models Across the Geospatial Landscape

Source: A screenshot of IBM and NASA's announcement of Prithvi.

Foundation models are demonstrating remarkable versatility across specific geospatial analysis tasks. NASA and IBM's Prithvi models is a great example of this potential through their application to multiple earth observation challenges. Prithvi-EO-1.0, trained on NASA's Harmonized Landsat Sentinel-2 data, has been successfully fine-tuned for flood detection, wildfire burn scar mapping, and multi-temporal crop classification. The model achieved state-of-the-art performance in flood mapping using significantly smaller training samples, demonstrating the efficiency gains possible with foundation model approaches.

Building on this success, Prithvi-EO-2.0 expands capabilities to global applications, processing 4.2 million data points and demonstrating practical impact through real-world deployments. IBM researchers applied the model to map flood extents in Valencia, Spain following devastating 2024 flooding, and to track deforestation patterns in the Bolivian Amazon by analyzing canopy height changes from 2016 to 2024.

Source: AI2's paper discussing their dataset for training geospatial foundation models.

SatlasPretrain models, developed by the Allen Institute for AI, showcase another approach to geospatial foundation modeling. These models have proven effective for global-scale applications, powering the Satlas platform to automatically detect and map wind turbines, solar farm installations, offshore platforms, and tree cover density across diverse geographic regions. The models were trained on datasets covering 50 times more Earth surface than previous largest datasets, enabling robust generalization across different environmental conditions.

DINOv2: A computer vision model fine-tuned for geospatial use cases

Among these foundation models, DINOv2 serves as a particularly interesting example of how pre-trained representations can accelerate satellite imagery projects. Unlike the previously mentioned models, DINOV2 is first and foremost, a computer vision model. We have extensive experience with DINOv2 fine-tuning workflows, having developed comprehensive recipes for adapting the model to specific computer vision tasks through our platform's automation capabilities.

Researchers have successfully applied the model to geological image analysis, where it demonstrated remarkable performance in classifying and segmenting rock samples from CT-scan imagery. Recent studies show DINOv2's versatility extends to satellite image processing.

In the following examples, the model's ability to capture geological patterns using its latent representations proved effective even without explicit training in the geological domain, showcasing its robust transfer learning capabilities across specialized scientific applications.

In remote sensing object detection, DINOv2 has shown impressive discrimination abilities for distinguishing similar object types in satellite imagery. Research demonstrates the model's effectiveness in few-shot object detection scenarios, where it successfully identified different aircraft types, vehicle categories, and infrastructure elements with minimal training examples. The model's visual features proved particularly suitable for remote sensing detection tasks, outperforming text-supervised models in scenarios where fine-grained object understanding is crucial.

Visual place recognition represents another successful application domain, where DINOv2's robust feature extraction capabilities enable accurate geographical localization from satellite and aerial imagery. The model's training on diverse visual data allows it to handle complex environmental conditions including lighting variations, seasonal changes, and partial occlusions—challenges that traditionally limit geospatial recognition systems.

Source: Meta's paper using DINOV2 for analyzing forest canopy

Meta's collaboration with the World Resources Institute to map global forest canopy height demonstrates DINOv2's remarkable potential in large-scale environmental monitoring. By leveraging DINOv2's pre-trained representations, the team achieved remarkable results documented in their research.

The model was pre-trained on 18 million unlabeled satellite images, learning general visual features without any task-specific annotations. For the specific task of canopy height estimation, they fine-tuned on aerial LiDAR data from sites across the USA. Despite this limited geographic training scope, the model generalized globally, achieving 2.8-meter accuracy and enabling individual tree detection at planetary scale.

This success illustrates the foundation model advantage: massive reduction in labeled data requirements, superior generalization across domains, and the ability to leverage unlabeled data for learning robust representations.

Strategic Approaches to Fine-Tuning

Fine-tuning foundation models for satellite imagery requires thoughtful strategy rather than simple transfer learning. Successful approaches typically involve:

Selective Layer Adaptation: Freezing early layers that capture general visual features while adapting later layers to satellite-specific patterns. This preserves the model's broad visual understanding while specializing for earth observation tasks.

Multi-Scale Training: Satellite imagery spans multiple resolutions and scales. Effective fine-tuning incorporates this variety, ensuring models perform well from individual building detection to landscape-level classification.

Domain-Specific Augmentation: Unlike natural image augmentation, satellite imagery benefits from spectral augmentation, temporal variations, and geographic diversity in training samples.

Quality Assurance in the Modern Pipeline

Multi-Dimensional Validation

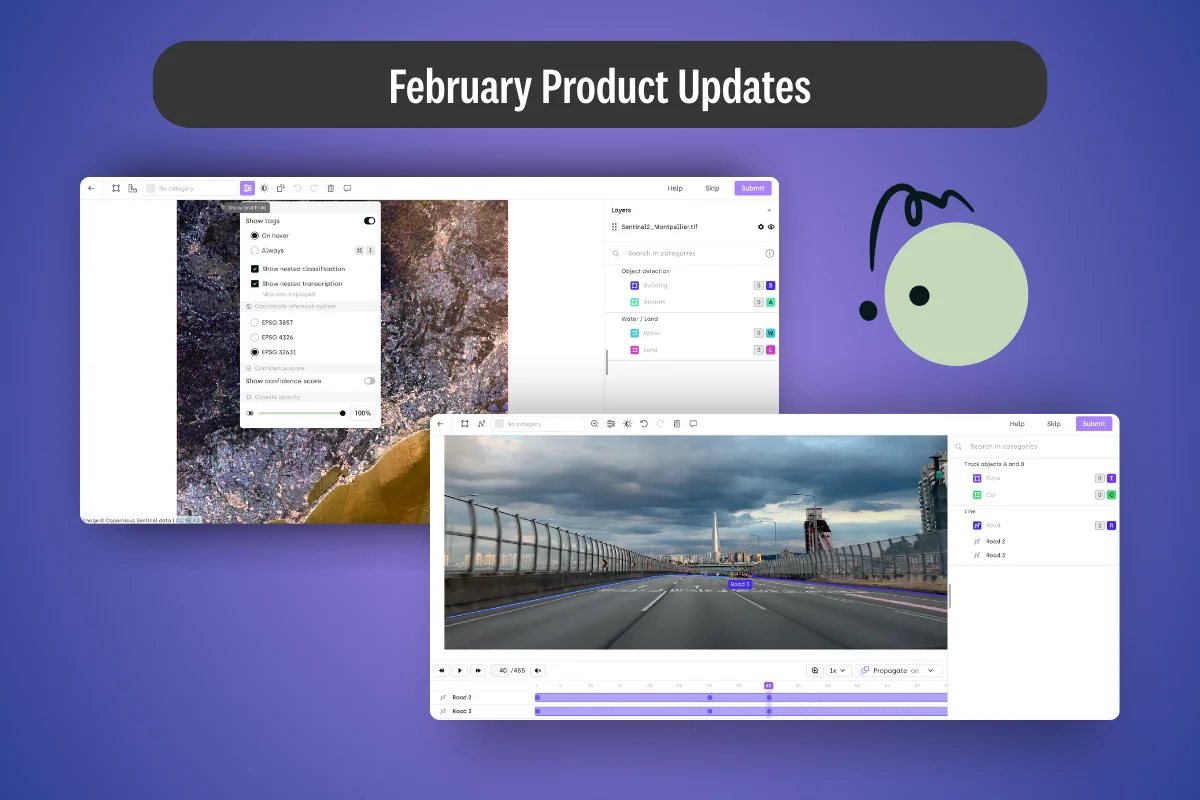

Modern pipelines implement sophisticated validation strategies that go beyond simple accuracy metrics. Geographic cross-validation ensures models generalize across regions, while temporal validation confirms robustness to seasonal variations. This multi-dimensional approach to validation reflects the complex nature of earth observation applications.

The stakes for accuracy in geospatial applications are particularly high, with many mission-critical systems requiring precision rates above 95%. As Enabled Intelligence demonstrates, achieving these standards requires systematic approaches that track diverse quality metrics across object detection accuracy, classification consistency, and spatial precision.

Systematic Review Architectures

Leading organizations have developed structured approaches to quality assurance that operate across multiple validation layers. These systems begin with parallel annotation strategies, where independent teams label identical imagery to surface areas of uncertainty or disagreement before they propagate through the pipeline.

The most effective workflows then progress through graduated review stages. Initial automated validation uses computational metrics to identify discrepancies in boundary alignment and object counts, efficiently flagging potential issues without immediate human intervention. Subsequent expert review stages bring domain specialists to bear on ambiguous cases, applying contextual knowledge to resolve conflicts and establish definitive classifications.

Final validation typically employs statistical sampling approaches, where representative portions of completed annotations undergo independent verification. This layered approach ensures systematic errors are caught before they compromise dataset integrity, while optimizing the balance between thoroughness and resource efficiency.

We integrate human expertise throughout this process. While AI systems excel at routine tasks, human judgment remains essential for edge cases, quality control, and ensuring that model outputs align with domain knowledge. Our platform facilitates this through collaborative review workflows that leverage both human and machine intelligence.

Continuous Learning and Adaptation

Production satellite imagery systems must adapt to changing conditions: new sensors, seasonal variations, and evolving land use patterns. Modern pipelines incorporate feedback loops that enable continuous model improvement without full retraining. This adaptive capacity is essential for maintaining performance in dynamic earth observation applications.

The Transformation in Project Timelines

The cumulative effect of these innovations is a dramatic compression of project timelines. What traditionally required months of effort can now be accomplished in weeks. This acceleration isn't simply about working faster—it's about fundamental changes in how work is organized and executed.

Consider a typical land use classification project. Traditional approaches might involve months of manual annotation, followed by weeks of model training and validation. Modern pipelines compress this through parallel processing: AI-assisted annotation proceeds concurrently with model development, validation happens continuously rather than as a final step, and deployment can begin with partial coverage and expand progressively.

Implications for Earth Observation

Democratization of Satellite Analysis

The modern pipeline makes sophisticated earth observation accessible to a broader range of organizations. Previously, only well-resourced institutions could afford the time and expertise required for satellite imagery projects. Today, the combination of foundation models, AI-assisted annotation, and integrated platforms enables smaller teams to tackle ambitious earth observation challenges.

New Applications and Possibilities

The efficiency gains from modern pipelines enable applications previously considered impractical. Real-time deforestation monitoring, continuous urban growth tracking, and rapid disaster response mapping are now feasible at global scales. The Meta forest mapping project demonstrates this potential: creating a global 1-meter resolution canopy height map would have been inconceivable with traditional methods.

The Evolving Role of Domain Experts

Rather than replacing human expertise, modern pipelines amplify it. Domain experts spend less time on routine annotation and more time on high-value activities: defining classification schemas, validating model outputs, and interpreting results. This shift enables deeper scientific insights and more sophisticated applications of earth observation data.

Future Directions

Multimodal Integration

The integration of multiple data modalities—optical imagery, synthetic aperture radar, elevation models—promises richer understanding of earth systems. Modern pipelines are beginning to incorporate these diverse data sources, moving toward comprehensive earth observation platforms.

Automated Quality Assurance

Advances in anomaly detection and uncertainty quantification will enable more sophisticated automated quality control. Future pipelines may automatically identify areas requiring human review, optimizing the allocation of expert attention.

Conclusion

The modern satellite imagery pipeline represents more than technological advancement—it's a fundamental rethinking of how we transform earth observation data into actionable insights. By combining intelligent data processing, native AI integration through tools like SAM2, and the power of foundation models like DINOv2, organizations can now undertake earth observation projects that were previously impossible.

The key insight is that these components work synergistically. Foundation models provide the baseline intelligence, AI-assisted annotation tools make data preparation feasible at scale, and modern training approaches ensure models can be rapidly adapted to specific needs. Together, they create a pipeline that is not just faster, but fundamentally more capable.

As we look forward, the continued evolution of these technologies promises to make global-scale earth observation not just possible, but routine. The implications for environmental monitoring, urban planning, agriculture, and countless other fields are profound. We stand at the threshold of an era where understanding our planet through satellite imagery becomes as accessible as querying a database—a transformation that will reshape how we monitor, understand, and protect Earth's systems.

Start building geospatial models more efficiently

Ready to implement modern satellite imagery pipelines? Explore how our platform, with native SAM2 integration and support for foundation model workflows, can transform your earth observation capabilities. Learn more about our geospatial annotation solutions.

Or talk to our team directly for a demonstration tailored to your needs.

.png)

_logo%201.svg)

.webp)