Llama 2 is an advanced open-source large language model (LLM) crafted by Meta, the technological innovator. This second-generation model is a dynamic tool for constructing cutting-edge chatbots akin to ChatGPT or Google Bard. Through extensive training on an immense corpus of data, Llama 2 has honed the ability to produce coherent and exceptionally natural-sounding text outputs.

Llama 2 stands as the evolutionary successor to its predecessor, Llama 1, which made its debut earlier in the year. Llama 1 was known for its exclusivity, being "closely guarded" and accessible only upon request.

This newly unveiled Llama 2 model marks a transformative shift, as it is made available to both research and commercial spheres, a joint effort between Meta and Microsoft. The applications crafted using Llama 2 will soon extend their reach beyond Windows PCs, seamlessly integrating with phones and laptops fueled by Qualcomm’s Snapdragon SoCs.

Noteworthy advancements distinguish Llama 2, with its training encompassing a remarkable 40% more data and a doubled context length compared to the original model. This leap translates into an unparalleled language model, delivering responses that emulate human-like precision and depth. Llama 2's achievements are further underscored by its top-ranking performance on the OpenLLM Leaderboard, cementing its position as a frontrunner in the realm of language generation technology.

.webp)

Understanding Llama 2

Quality is All You Need

In pursuing enhancing Llama 2, the paper's "Quality is All You Need" section underscores the paramount importance of data quality. Recognizing the limitations of third-party SFT data for guiding dialogue-style instruction, the focus shifted to meticulously curating a select set of high-quality examples. By prioritizing their vendor-based annotations over third-party sources, Llama 2s performance significantly improved.

This approach aligns with a similar study by Zhou et al. (2023), emphasizing that a concise, clean instruction-tuning dataset can yield exceptional results. Llama 2's success was evident through its attainment of remarkable quality with tens of thousands of SFT annotations, reaching a high standard with 27,540 annotations.

Furthermore, the paper highlights the influence of annotation platforms and vendors on model performance, underscoring the ongoing need for thorough data checks. The team rigorously validated data quality by comparing human annotations with model-generated outputs, revealing the model's competitive prowess in generating high-quality text.

Ultimately, Llama 2's journey showcases data quality's pivotal role in achieving language model excellence, leading to an improved understanding of dialogue-style instructions and heightened performance.

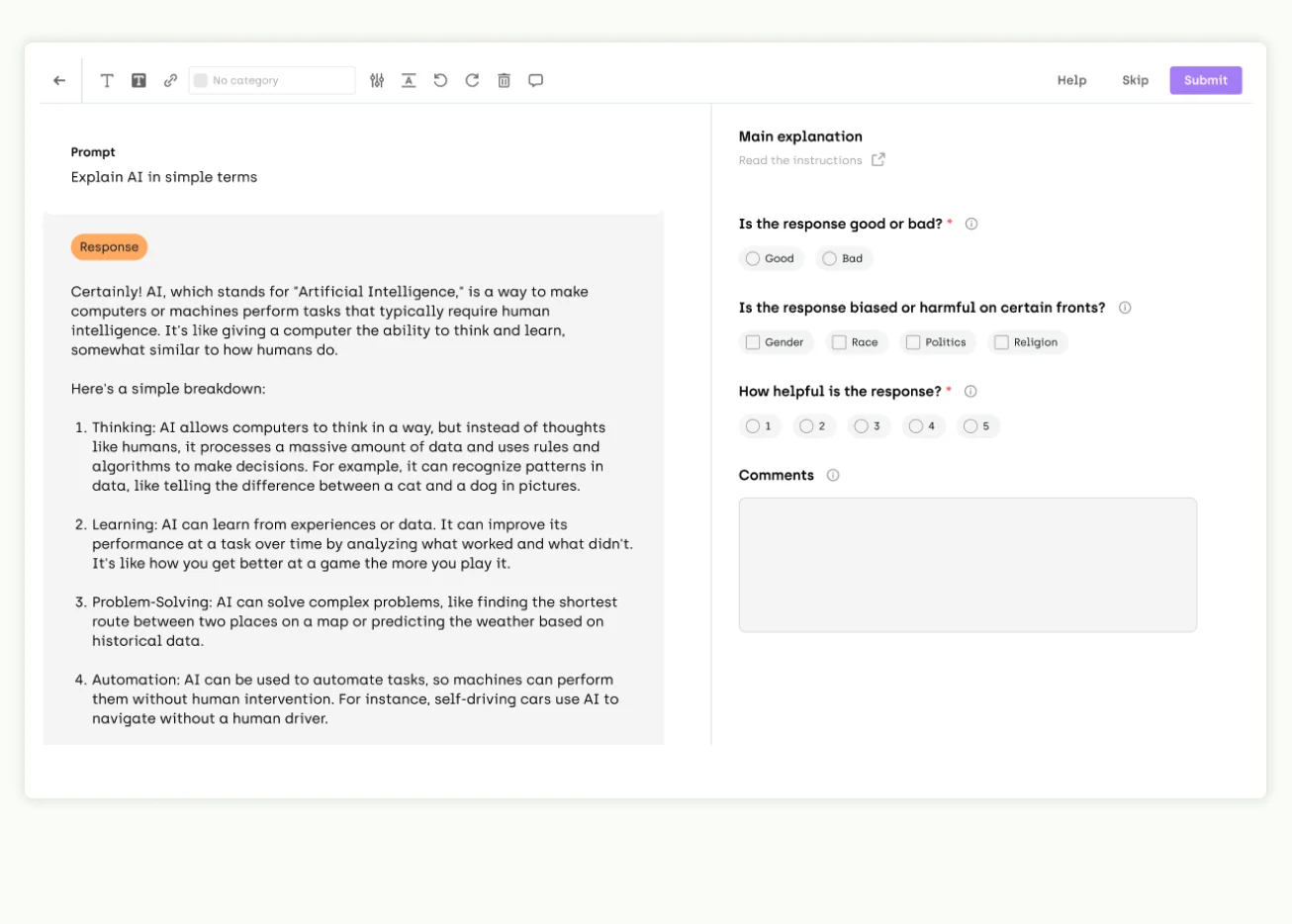

In addition, Llama 2 employs Reinforcement Learning from Human Feedback (RLHF) to fine-tune its behavior by human preferences and instructions. This iterative process enhances the model's alignment with desired outcomes.

During RLHF, human annotators rank two model-generated outputs based on their preferences, yielding a dataset of empirical human choices. This data is then harnessed to train a reward model, which learns the intricate patterns of human preferences. As a result, the reward model becomes adept at autonomously making preference-based decisions, refining Llama 2's text generation to match human expectations better. This iterative approach enriches the model's capacity to produce language that resonates more closely with human sensibilities.

.webp)

Using Llama LLM for Data Labeling Tasks

Llama presents a groundbreaking opportunity for pre-annotations and automated data labeling, revolutionizing data annotation workflows. Llama's advanced language understanding empowers it to swiftly propose initial annotations, offering labels or tags for diverse data types. This preliminary annotation phase significantly expedites subsequent human labeling efforts, enhancing overall efficiency.

Moreover, Llama's remarkable comprehension of context and semantics positions it as a potent tool for automating data labeling tasks. After meticulous training, Llama internalizes labeling criteria and mimics human judgment, autonomously assigning labels to unlabeled data. This automation not only accelerates the labeling process but also optimizes resource allocation.

Fine-tuning Llama for specific domains is imperative for precision, while human oversight remains vital to address complexities and ensure accuracy. The integration of Llama for pre-annotations and automated labeling presents industries with an unprecedented advantage, streamlining processes and fostering innovation through the synergy of AI and human expertise.

Leveraging Kili's platform at this stage can significantly enhance procedural expediency, as it furnishes a user interface (UI) tailored for the meticulous fine-tuning of the model on a limited set of labeled data. After this refinement phase, the platform seamlessly generates pre-annotations for the entirety of the dataset. This strategic utilization of Kili's platform streamlines and accelerates the overall workflow, presenting an efficacious means to optimize data annotation processes.

The simplest way to build high-quality LLM fine-tuning datasets

Our professional workforce is ready to start your data labeling project in 48 hours. We guarantee high-quality datasets delivered fast.

Llama and other Large Language Models with Kili Technology

The confluence of Large Language Models (LLMs) like Llama and Kili Technology brings forth a potent synergy, yielding remarkable advancements in data labeling tasks and creating high-quality datasets. This collaboration harnesses the strengths of cutting-edge AI capabilities and innovative data annotation tools, culminating in a transformative impact across industries.

With their profound language understanding, LLMs play a pivotal role in generating pre-annotations and automating data labeling processes. LLMs accelerate the labeling workflow by swiftly proposing initial annotations and autonomously assigning labels to unlabeled data, augmenting operational efficiency and resource optimization.

In parallel, Kili Technology provides a sophisticated platform that empowers human annotators to fine-tune LLMs on a targeted subset of labeled data. This iterative process refines the model's responses, ensuring alignment with specific domain contexts and nuances. Subsequently, Kili's platform seamlessly generates pre-annotations for the complete dataset, significantly reducing the manual effort required. For example:

- Text Classification

- LLMs and Kili's platform facilitate rapid and accurate text classification. Pre-annotations generated by LLMs expedite the process, while Kili empowers human annotators to fine-tune and validate these suggestions. This synergy ensures precise labeling and accelerates data preparation, culminating in a high-quality dataset conducive to robust text classification models.

- Sentiment Analysis

- In sentiment analysis, LLMs' contextual awareness can pre-annotate text with sentiment labels. Kili's platform aids in validating and refining these annotations through human expertise. The result is a sentiment-labeled dataset of exceptional quality, enabling sentiment analysis models to capture nuances and intricacies more accurately.

- Named Entity Recognition (NER)

- LLMs excel at recognizing entities generating pre-annotations. Kili's platform empowers human annotators to enhance and validate entity annotations, leading to a meticulously curated dataset. This symbiotic approach ensures precise NER model training, accommodating diverse entity types and variations.

- Question Answering

- LLMs' language comprehension generates initial question-answer pairs, further refined and validated by Kili's tools. This collaboration results in a dataset characterized by well-structured, accurate QA pairs. The synergy enhances model training, enabling question-answering systems to provide detailed, relevant responses.

- Data Augmentation

- In data augmentation, LLMs automatically generate diverse paraphrased versions of text, while Kili's platform ensures the authenticity and quality of these variations. The fusion creates an augmented dataset enriched with varied perspectives, enhancing model generalization and performance.

Across these contexts, the LLM-Kili alliance redefines data labeling. It optimizes resources, accelerates workflows, and fosters unparalleled dataset quality, creating more precise, robust, and reliable AI models across diverse applications.

Use Kili Technology's SDK to combine LLM-powered automations with high-quality human reviews

Kili Technology uses a powerful and handy SDK so you can use LLMs to automate pre-annotations and upload them to the Kili Platform with ease.

Our machine learning experts have developed several recipes on GitHub using different LLMs to give you a headstart in combining the power of LLMs with Kili Technology. From text classification, to counterfactual data augmentation, and even fine-tuning LLMs for your domain-specific needs, Kili Technology provides you with the perfect foundation to put those LLMs to work.

Additionally, Kili Technology supports Open AI's GPT natively as a powerful co-pilot tool to generate pre-annotations with a single click on our UI.

How to fine-tune Llama 2 for your domain-specific needs

Now let's get back to Llama. Fine-tuning Llama 2 for domain-specific needs involves adapting the pre-trained model to perform tasks like sentiment analysis, text classification, or question-answering. This optimization process capitalizes on the Llama 2's pre-existing language knowledge, aiming to apply it effectively to the task at hand.

The process unfolds in these steps:

- Initialization: Begin by initializing Llama 2 with its pre-trained weights.

- Task-Specific Customization: Add a task-specific component to Llama 2, tailoring it to your specific objective.

- Dataset Training: Train Llama 2 on a task-specific dataset, fine-tuning the task-specific component and the core model.

- Validation: Evaluate the fine-tuned model's performance on a validation dataset.

- Iteration: Repeat steps 3 and 4 iteratively until the model reaches desired performance levels.

This approach capitalizes on the efficacy of fine-tuning, enabling Llama 2 to excel across diverse tasks by leveraging its foundational language understanding. This method has demonstrated impressive performance enhancement with minimal additional training, thus ensuring a versatile and high-performing model. For an in-depth tutorial, please refer to our comprehensive guide at this link.

Build high-quality LLM fine-tuning datasets

The best AI agents are built on the best datasets. See how our tool can help you build the best dataset to fine-tune an LLM.

Conclusion

In the dynamic landscape of data annotation and model optimization, the synergy between Large Language Models (LLMs) and Kili Technology, exemplified by the innovative Llama 2, emerges as a transformative force. This collaborative partnership redefines traditional data labeling approaches across diverse contexts, catalyzing the creation of high-quality datasets that underpin advanced AI applications.

The convergence of LLMs and Kili's platform introduces a streamlined workflow, wherein the fine-tuning of Llama 2 for domain-specific needs is a testament to this symbiotic process. Guided by meticulous steps, this approach empowers industries to expedite data annotation, enhance precision, and ultimately unleash the potential of their datasets. The result is a harmonious amalgamation of Llama 2's AI capabilities and human expertise, ushering in an era of data-driven innovation.

From text classification and sentiment analysis to named entity recognition and question-answering, the LLM-Kili-Llama 2 alliance optimizes resources, accelerates workflows, and elevates dataset quality. As industries navigate the evolving demands of the AI landscape, this strategic collaboration paves the way for refined models, informed decisions, and pioneering advancements. The journey undertaken in this discourse underscores the significance of seamless human-AI collaboration, charting a course toward a future empowered by data excellence, Llama 2's prowess, and AI ingenuity.

.png)

_logo%201.svg)

.webp)