When ChatGPT was launched, the public saw the future of AI and large language models (LLMs). At a glance, ChatGPT looks similar to a regular chatbot except that it converses in ways unbelievably similar to a human does. ChatGPT impresses machine learning engineers and the non-technical community alike by responding coherently and sensibly to questions and statements.

So, what's behind ChatGPT's success?

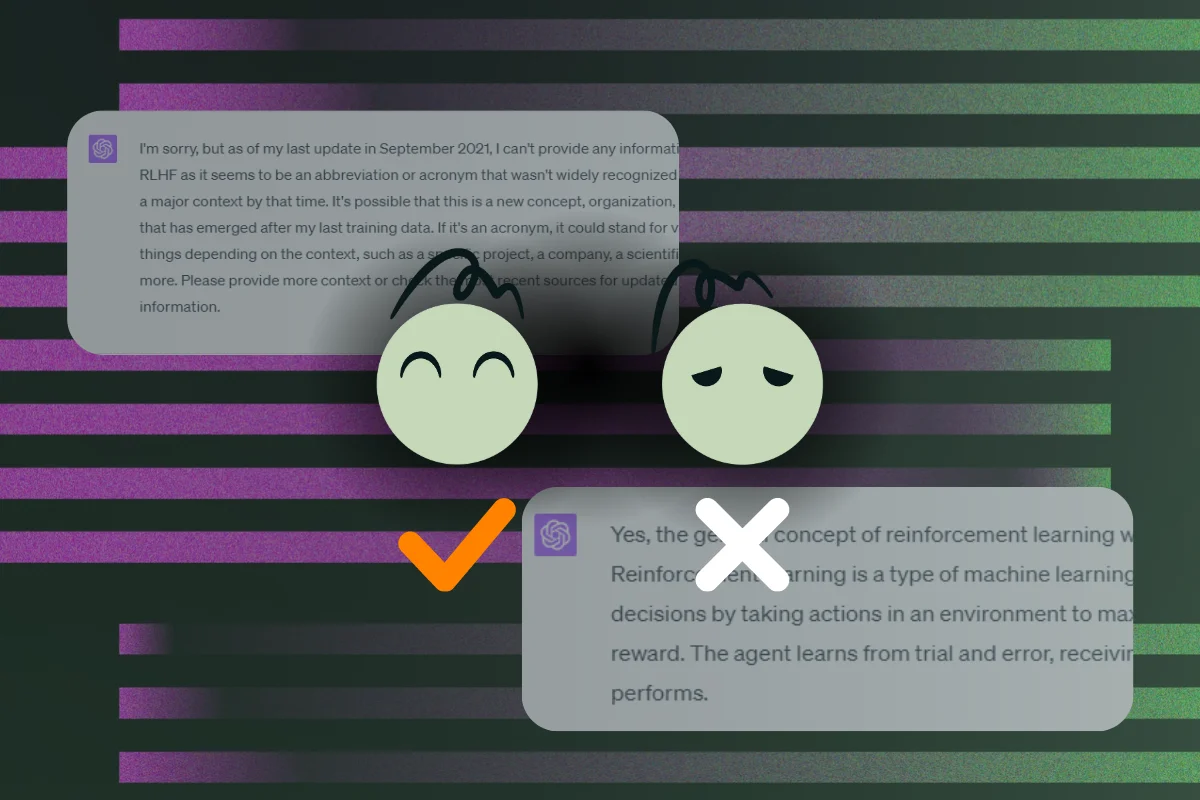

Much of that answer lies in reinforcement learning from human feedback or RLHF. When developing ChatGPT, OpenAI applies RLHF to the GPT model to produce the response users want. Otherwise, ChatGPT may not be able to answer more complex questions and adapt to human preferences the way it does today.

In this article, we’ll explain how RLHF works, its importance in fine-tuning large language models, and the challenges machine learning teams should know when applying the technique.

Understanding RLHF and Its Process

Reinforcement learning from human feedback (RLHF) is an approach to training and fine-tuning a large language model, allowing it to follow human instructions correctly. With RLHF, the LLM model can understand the user’s intention even if it is not described explicitly. RLHF enables the model to interpret the instruction correctly and learn from previous conversations.

Why RLHF matters for LLMs

To understand RLHF better, it’s essential to be aware of the fundamental characteristics of a large language model. Large language models are designed to predict the next token or word to complete a sentence. For example, you provide the phrase “The fox jumped off the tree...” to a GPT model, and it completes the sentence with ‘and landed on its feet.”

However, the LLM is more helpful if it can understand simple instructions like “Create a short story about a fox and a tree.” As an initial language model, it struggles to interpret the purpose of such a statement. As a result, the model might provide ambiguous responses, such as describing ways to write a creative story instead of telling the story.

RLHF enables an LLM to expand its capabilities beyond being auto-completion software. It involves creating a reward system, augmented by human feedback, to teach the foundational model of which response is more aligned with human preferences. Simply put, RLHF makes an LLM capable of providing human-quality judgments.

RLHF vs. Traditional Reinforcement Learning

Large language models traditionally learn through an enclosed environment. In traditional reinforcement learning, the pre-trained language model interacts with a specific environment to optimize its policy through a reward system. Here, the model acts as a reinforcement learning (RL) agent, attempting to maximize its reward through trial and error.

Reinforcement learning from human feedback enhances traditional reinforcement learning methods by incorporating human feedback into the reward model. With RLHF, the RL agent speeds up its overall training process by applying additional feedback from domain experts. It combines AI-generated feedback with guidance and demonstrations from humans. As such, the RL agent can perform consistently and relevantly in different real-life environments.

How RLHF works

RLHF is an advanced machine learning technique that augments the self-supervised learning that large models go through. It is not viable as a standalone model learning method because of the potentially costly involvement of human trainers. Instead, AI companies use RLHF to fine-tune a pre-trained model.

Here’s how it works.

Step 1 - Start with a pre-trained model.

First, you identify and choose a pre-trained model. For example, ChatGPT was developed from an existing GPT model. Such models have undergone self-supervised learning and can predict and form sentences.

Step 2 - Supervised fine-tuning

Then, fine-tune the pre-trained model to enhance its capabilities further. At this stage, human annotators prepare sets of prompts and results to train the model to recognize specific patterns and align its predictive response. For example, annotators guide the model toward a desired output with the following training data.

Prompt: Write a simple explanation about artificial intelligence.

Response: Artificial intelligence is a science that … “

Step 3 - Create a reward model

A reward model is a large language model designated for sending a ranking signal to the original language model in training. The idea is for the reward model to evaluate the foundational model’s output and return a scalar reward signal. Then, the main LLM uses the reward signal to optimize its parameter.

To train the reward model, human annotators first create comparison data from one or several LLMs. They generate several prompt-answer pairs and rank them according to their preference. Note that this process is subjective and is influenced by how humans perceive the results generated by the linguistic model.

With the ranked datasets, the reward model learns to produce a scalar signal that represents the relevance of the generated response based on human preference. After sufficient training, the reward model can automatically rank the RL agent’s output without human intervention.

Step 4 - Train the RL policy with the reward model

Once the reward model is ready, we create a feedback loop to train and fine-tune the RL policy. The RL policy is a copy of the original LLM that takes the reward signal and adjusts its behavior. At the same time, it also sends its output to the reward model for evaluation.

Based on the reward score, the RL policy will generate responses that it deems preferable, according to the feedback from the human-trained reward system. This process goes on iteratively until the reinforcement learning agent achieves desirable performance.

RLHF in Action and Its Impact

Reinforcement learning from human feedback has far-reaching implications for natural language processing AI systems. It turns large language models that operate indiscriminately and aimlessly into purposeful, intelligent, and safer applications.

Case studies: Writing an email, solving math problems, generating code

Let’s compare how an RLHF model compares against its non-RLHF counterpart in typical applications.

Email writing

A non-RLHF model has difficulty creating an email from a simple prompt. For example, you prompt the model with the instruction, “Write an email requesting an interview.”

Instead of writing an email, a non-RLHF model might mistake the prompt as the start of a to-do list and complete it with the following text.

- Call to follow up.

- Print out your resume.

- Confirm the interview details.

- Be on time.

Meanwhile, a fine-tuned RLHF model knows that the user expects an email they could send to the employer. It creates an output.

Dear [Hiring Manager's Name],

I hope this email finds you well. I am writing to express my keen interest in the [Position/Job Title] at [Company Name], as advertised on [Source of Job Posting]. After carefully reviewing the job description and requirements, I am confident that my skills and qualifications align well with the position. I believe I would be a valuable addition to your team.

Can you generate the correct response from a non-RLHF model? Probably so. But you’ll need to use complex prompts that non-technical users struggle to create. The additional complexity thus defeats the purpose of using a conversational AI model, which is meant to simplify everyday tasks.

Mathematical problems

Large language models are primarily engineered to perform linguistic tasks. But that doesn’t mean they are incapable of solving mathematical problems. The difference lies in whether an LLM is adequately trained, particularly with RLHF, for arithmetic use cases.

By default, a foundational LLM model would perceive a mathematical question as a linguistic prompt.

For example, if you prompt a non-RLHF model with ‘What is 5 + 5?” it will attempt to complete the phrase as a storyteller. Instead of solving the equation, it may respond with “Use a calculator to get an accurate answer’.

However, an RLHF model correctly understands the question as a request for a mathematical solution. It replies with a straightforward ‘5’, instead of adding more linguistic context. To do that, the LLM needs input from mathematical experts during the RLHF stage.

Code generation

Large language models are inherently experts in writing codes. However, depending on how they are trained, LLMs might not return the appropriate output as a programmer would.

For example, prompting a non-RLHF model with "Write a simple Java code that adds two integers” could return the following response.

“First, install a Java programming environment on your computer. Then, launch the code editor.”

Or, the model might switch into the storytelling mode, describing how coding is challenging for new learners, like:

“This might work,

public static int add integers(int num1, int num2) { return num1 + num2; }

But it’s better to test it out for errors.

On the other hand, a well-trained RLHF model will provide a complete example of the requested code. It will also explain how the code works and the expected output when you run it.

How RLHF improves the performance of LLM

Large language models are neural networks capable of performing advanced natural language processing tasks. Unlike its predecessors, an LLM has a more significant number of parameters, which elevate its performance. Neural network parameters, such as weights and biases entrenched in the hidden layers, enable the model to produce a more coherent and accurate response.

An LLM trains its parameters with supervised or self-supervised learning. The model would adjust its parameters accordingly, hoping to produce human-like outputs. However, they often fall short in this respect. Despite training on sizeable parameters, LLMs demonstrate inconsistency when given specific instructions.

Like the above examples, LLMs may not interpret the prompt’s intent perfectly unless they are expressed explicitly. This deviates from natural human language, where we use nuances or phrases that imply certain meanings. In simpler words, LLMs cannot be repurposed for other use cases unless they can behave predictably, safely, and consistently.

With the introduction of RLHF, the LLM’s performance improved dramatically. OpenAI documented its observation when developing InstructGPT, the predecessor of ChatGPT. With RLHF, a model with 1.3 billion parameters outperforms a foundational model with 175 billion parameters.

OpenAI’s early works with RLHF underscore the importance of human involvement when fine-tuning large language models. RLHF allows the model to better adapt to the wide distribution of linguistic data. Also, human input proves valuable in providing better signal quality and contextual relevance to the model.

As such, the model can provide better responses despite training with smaller datasets. While not perfect, an RLHF-trained model demonstrates notable improvement in these areas.

- The model becomes better at following instructions even without complex prompts.

- There is less toxicity and dangerous behavior when the model is fine-tuned with RLHF.

- Hallucination, a phenomenon where the model fabricates and produces incorrect facts, is also reduced in an HRLF-trained model.

- The model is more adaptable to use cases not extensively exposed during training.

How RLHF shifts LLMs from autocompletion to conversational understanding

The emergence of large language models is an essential milestone for developments in linguistic AI systems. They are deep-learning models trained on voluminous texts from various sources. By itself, LLMs are capable of forming coherence and grammatically correct sentences from human input.

However, they are confined within the limited scope of usage within the data science community. At best, LLMs help enable auto-completing features, such as Gmail’s smart composer. It generates phrases based on specific words the user typed, which the user could then insert the generated text into the email.

Still, LLMs have much to offer to the consumer space, provided they can bridge the gap in understanding human conversation. Unlike structured prompts, human conversation varies in style, nuances, cultural influences, and intent. These are elements that a pre-trained LLM model like GPT would only comprehend with further fine-tuning.

Reinforcement learning from human feedback breaks the stereotypical autocompletion image of LLMs and unlocks possibilities for new applications. It gives birth to technologies like Conversational AI, where chatbots evolve to become more than a basic question-answer application.

Today, companies use RLHF to enable various downstream capabilities of pre-trained LLM models. We share several examples below.

- Ecommerce virtual assistant that recommends specific products based on queries like “Show me trendy winter wear for men”.

- Healthcare LLM systems, such as BioGPT-JSL, allow clinicians to summarize diagnoses and enquire about medical conditions by asking simple health-related questions.

- Financial institutions fine-tune LLMs to recommend relevant products to customers and identify insights from financial data. For example, BloombergGPT is fine-tuned with specific domain data, making it one of the best-performing LLM models for the finance industry.

- In education, trained large language models allow learners to personalize their learnings and get prompt assessments. Such AI models also reduce teachers’ burden by generating high-quality questions for classroom education.

The role of RLHF in the development of models like OpenAI’s ChatGPT and Anthropic’s Claude

ChatGPT grabbed the limelight as millions signed up for this seemingly human AI chatbot within days of its launch. But ChatGPT isn’t the only chatbot with such revolutionary capabilities. Anthropic’s Claude and Deep Mind Sparrow are linguistic models with similar, if not better, capabilities than ChatGPT.

When tailoring these models for public use cases, OpenAI, Anthropic, and Google tried to solve two common problems plaguing pre-trained models. Specifically, the companies tried to make safer and more purposeful models. Even with supervised fine-tuning, these models fall short of meeting the criteria for public use.

For example, given a prompt like ‘How does meditation work?’, the model might attempt to complete it by providing more context, with ‘..for beginners’ instead of answering the question. The output is grammatically sound but not aligned with the prompt’s context. In natural conversation, the ideal response is an informative description of the meditation practice itself.

There is, however, a more worrying concern with pre-trained LLMs. Without human guidelines, they cannot discern between ethically correct and dangerous behavior. For example, the model might mislead the users with made-up facts. Or use inappropriate, violent, or discriminative language.

RLHF allows OpenAI and its competitors to resolve these challenging issues. In RLHF, the language model is presented with ranked data from multiple models. This helps the AI gradually build a contextual understanding and develop the proper reward functions. More importantly, human trainers impose specific guidelines that prevent such models from resorting to indiscriminate behaviors.

Anthropic took an interesting approach in the RLHF to reduce further the possibility of Claude generating harmful responses. It uses red-teaming, a process where human reviewers create adversarial prompts to elicit harmful behaviors. This step, called constitutional AI, probes the model for weaknesses in the reinforced ethical compliance measures to create a safer AI environment.

Challenges, Limitations, and Future of RLHF

RLHF allows LLMs to break through from their rigid linguistic capabilities to solutions impacting industries. Yet, RLHF is not perfect, and there are various hurdles that machine learning engineers must overcome in the near future.

The complexity and cost of gathering human preference data

RLHF brings humans to the loop of LLM training, along with the former’s subjectivities and differences in perceptive values. Often, RLHF involves outsourcing the rating to a group of human reviewers. When doing so, it’s important to ensure diversity in the group of reviewers or risk producing biased evaluations.

The entire procedure of generating the training data for the reward function is potentially costly because of heavy human involvement. Companies may pay expensive fees but still face bottlenecks when scaling the RLHF process. Human annotators may work at varying efficiency, and there is no assurance that the outcome meets the desired objectivity and quality.

The challenge of aligning models with complex human values

RLHF intends to align models with human preference, but it’s easier said than done. On paper, collecting feedback from humans and integrating it into the training loop appears to solve the problem. In reality, human raters make mistakes and are subject to their individual beliefs, biases, and environments.

For example, ChatGPT spent a considerable sum to fine-tune its foundational model with RLHF, but it still occasionally suffers from bias and hallucination. This underscores the need to enroll trained and qualified human raters, but doing so would further inflate the cost of RLHF. Even then, generating training data that fully aligns with values across all demographics is impossible.

The limitations of current RLHF systems

Existing RLHF implementations could be better, both in design and principles.. It’s important to note that humans don’t train the RL agent directly. Instead, the reward model serves as a proxy trained with human input. Both interact in ways that steer the RLHF system towards a specific goal to maximize the reward. An RLHF model may also exhibit incentivized deception, generating responses deemed approvable to humans but not necessarily grounded in facts.

Furthering the potential of RLHF in improving the performance of LLMs

RLHF has taken LLMs to new heights but could do much more. This, of course, depends on the efforts to improve existing RLHF systems. At the moment of writing, data scientists and researchers are studying ways to address known process limitations. For example, researchers from Princeton University demonstrated an algorithm that needs less human feedback to align the training policy.

Meanwhile, researchers from New York University attempted to solve the discrepancies from human raters in the RLHF model. They recommended an approach called Training Language Models with Language Feedback. Instead of training the language model with a rated prompt-results pair, annotators provide descriptive explanations of what the output should be. Then, the language model uses feedback to refine the model further.

Unexplored design options in RLHF

The onus now lies in AI companies and ML teams to advance existing RLHF capabilities. Still in its infancy, there is room for improvement in implementing RLHF. Proximal policy optimization (PPO) is the common policy gradient method used in the reinforcement learning process but struggles with instability and efficiency training. Therefore, researchers are experimenting with different ways to optimize the algorithm.

For example, researchers from Shangai University proposed a mixed distributed proximal policy optimization (MDPPO) algorithm, allowing multiple policies to train simultaneously. Meanwhile, OpenAI introduced PPO2, an enhanced version of its predecessor capable of running on GPUs. These are some examples that highlight the possibilities and efforts the ML community dedicates to building a better RLHF model.

Data Labeling, an essential part of RLHF

RLHF will continue to evolve in different directions but remain persistent in incorporating human feedback. Collecting human feedback and creating training data to train the reinforcement learning policy requires an organized data labeling workflow. Whether the model needs rated prompts, descriptive feedback, or specific guidelines to eradicate harmful behavior, AI companies still rely on human annotators to prepare the necessary datasets.

Rather than quantity, newer RLHF models will emphasize quality training data. In this respect, data labeling tools are essential to collect, clean, and annotate data efficiently. Besides, companies also seek ways to process and prepare a larger number of data as AI use cases grow in complexity.

Kili Technology is a data labeling platform with helpful features to meet modern RLHF training needs. ML teams use Kili Technology software to collaborate with labelers and reviewers to streamline the labeling of various textual sources. Kili Technology offers several features that help overcome the scalability and cost issues of labeling the workforce in RLHF.

- A customized interface allows the project leader to assign specific tasks and guidelines to labelers and reviewers.

- Issue-tracker allows labeling teams to identify and resolve issues promptly.

- Analytics for assessing labeling efficiency from relevant key metrics.

- Intuitive navigation enables labelers and reviewers to inspect important datasets efficiently.

- ML-assisted pre-labeling to improve NER and text classification task performance by up to 30%.

Conclusion

Reinforcement learning from human feedback (RLHF) has opened up a new frontier in machine learning, specifically downstream LLM applications. It helps LLMs to follow human instructions, behave more predictably, and provide a safer AI environment. We’ve shown examples of how RLHF impacts performance in various AI systems. Also, we’ve highlighted the limitations of existing RLHF models and avenues of improvement, including streamlining data labeling workflow.

If you're looking to enhance your LLM projects with high-quality RLHF training data, Kili Technology's expert team is here to help. Visit our LLM Building platform to learn how our solutions can streamline your data processes and boost efficiency. Contact us today to get started.

Resources

https://bdtechtalks.com/2023/01/16/what-is-rlhf/

https://huggingface.co/blog/rlhf

https://www.analyticsvidhya.com/blog/2023/05/reinforcement-learning-from-human-feedback/

https://appen.com/blog/the-5-steps-of-reinforcement-learning-with-human-feedback/

https://openai.com/research/learning-from-human-preferences

https://openai.com/research/instruction-following

https://seerbi.uk/reinforcement-learning-in-traditional-industries

https://www.linkedin.com/pulse/reinforcement-learning-from-human-feedback-rlhf-train-shankar-kanap/

https://scale.com/blog/chatgpt-vs-claude

https://huyenchip.com/2023/05/02/rlhf.html

https://www.lesswrong.com/posts/d6DvuCKH5bSoT62DB/compendium-of-problems-with-rlhf

.png)

_logo%201.svg)