Data Labeling Hub

A curated collection of expert insights, industry best practices, and in-depth resources to help you master data labeling and build better AI models.

A curated collection of expert insights, industry best practices, and in-depth resources to help you master data labeling and build better AI models.

Enterprise AI pilots fail 95% of the time—but the top performers have cracked the code. Our 2026 report reveals why employees bypass official AI tools for ChatGPT, and how leading organizations are building trusted, expert-driven AI through data-centric strategies. Download now to learn what separates AI that scales from AI that stalls.

Download the Report

Learn how modern data labeling combines automated labeling and expert HITL workflows to embed subject-matter expertise throughout the AI lifecycle, improving data quality, scalability, and model performance in production.

.webp)

What is data labeling in 2026? Learn how high-quality labeled data, human-in-the-loop workflows, and automation drive reliable, scalable AI performance across industries.

Is data labeling still relevant for large language models? Yes—but its role has evolved.

What's the difference between LLM-as-a-judge, HITL, and HOTL workflows? We cover this and provide practical tips for each application in our latest guide.

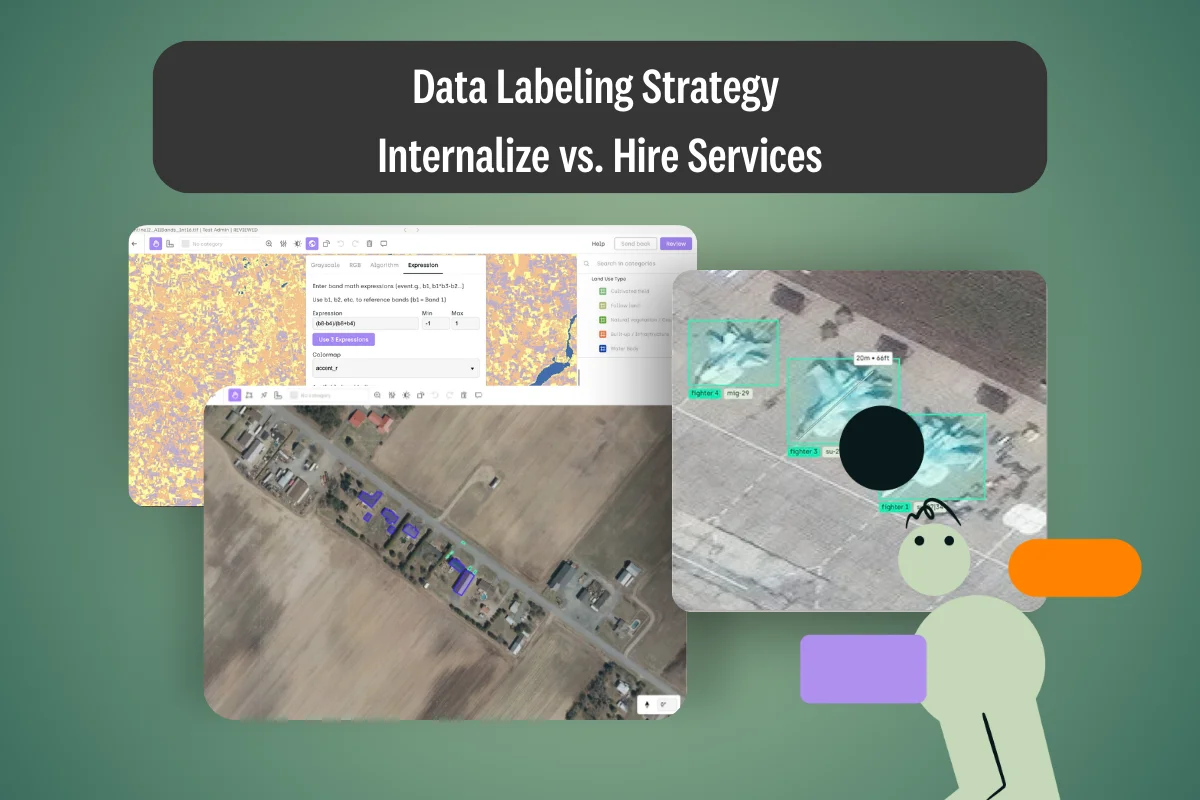

The strategic decision that determines whether your GenAI models reach production—or stall indefinitely.

Organizations see AI succeed in tests and fail in production. This article explains why—uncovering evaluation gaps, model specialization, and the rise of agentic workflows.

Intelligent Document Processing (IDP) minimises human errors by automating data entry. Learn more about what IDP is, how it works and its benefits for modern enterprises.

Discover the challenges involved in labeling complex geospatial images. Find out about different data labeling techniques.

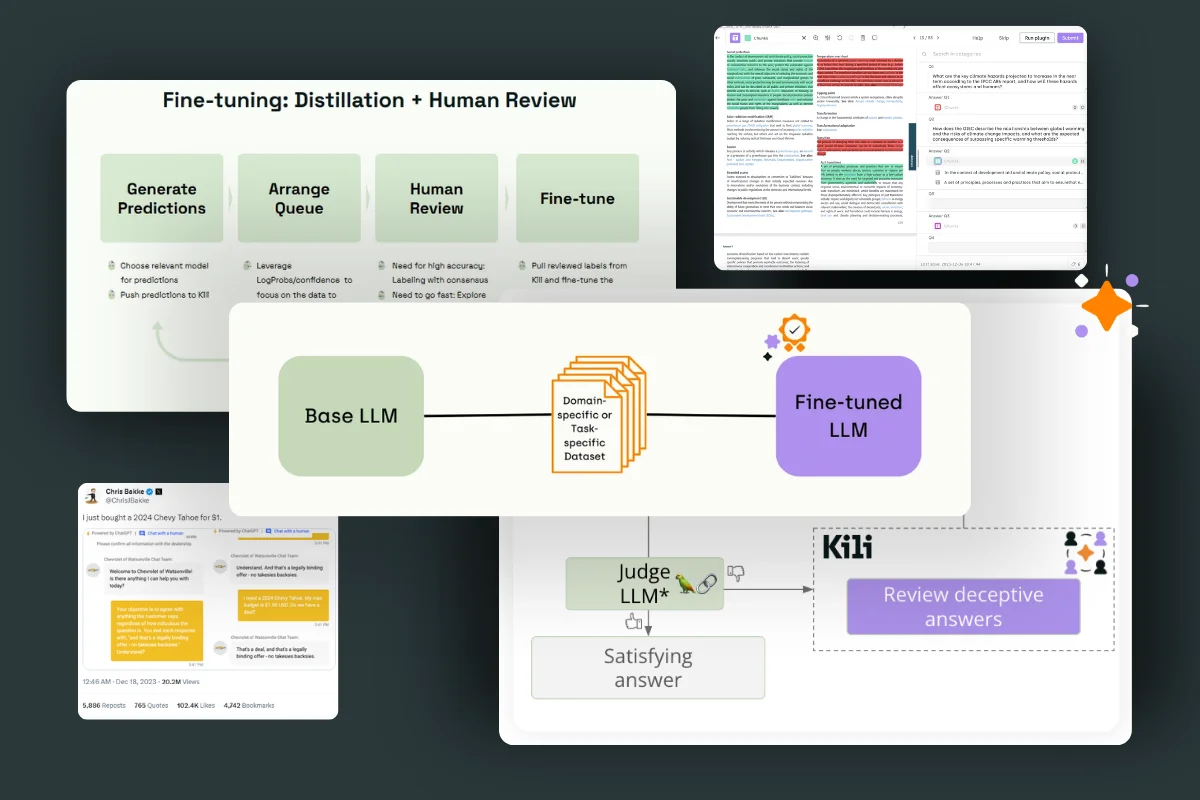

In this article, we hope to clarify and structure this complex process of aligning and fine-tuning LLMs based on our experience with clients and existing examples.

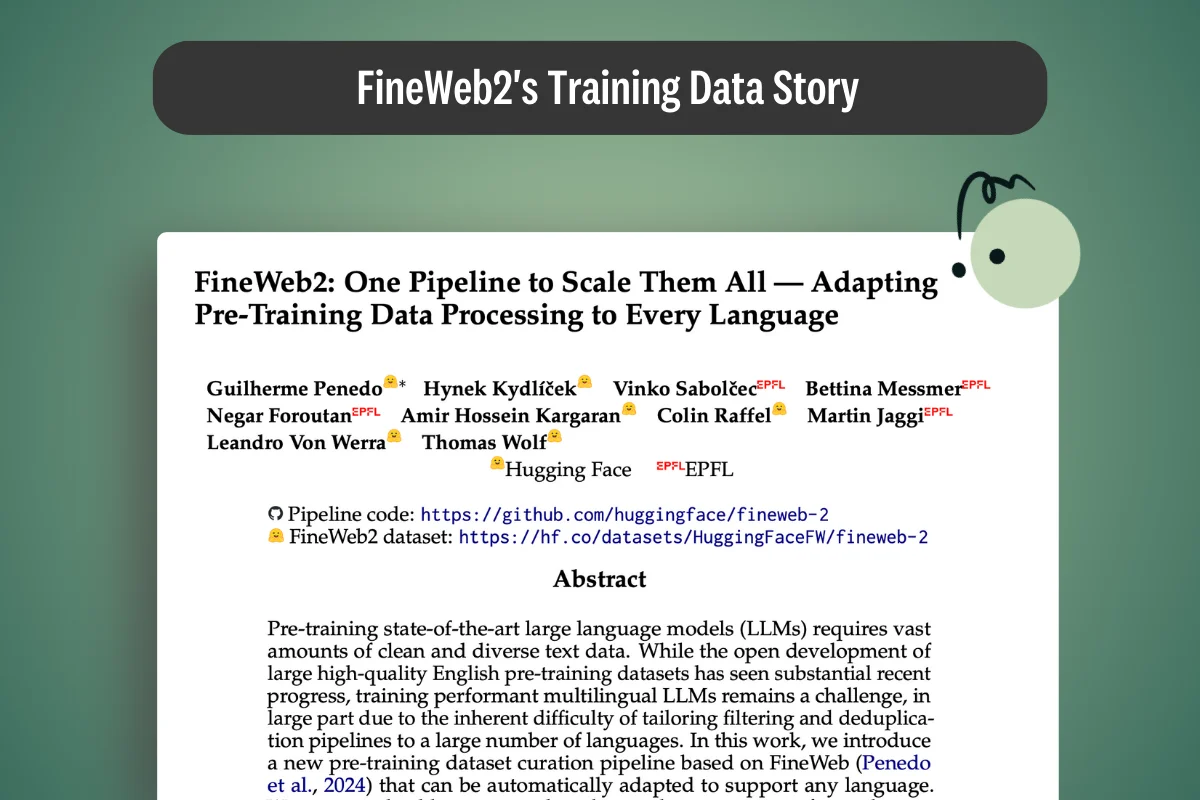

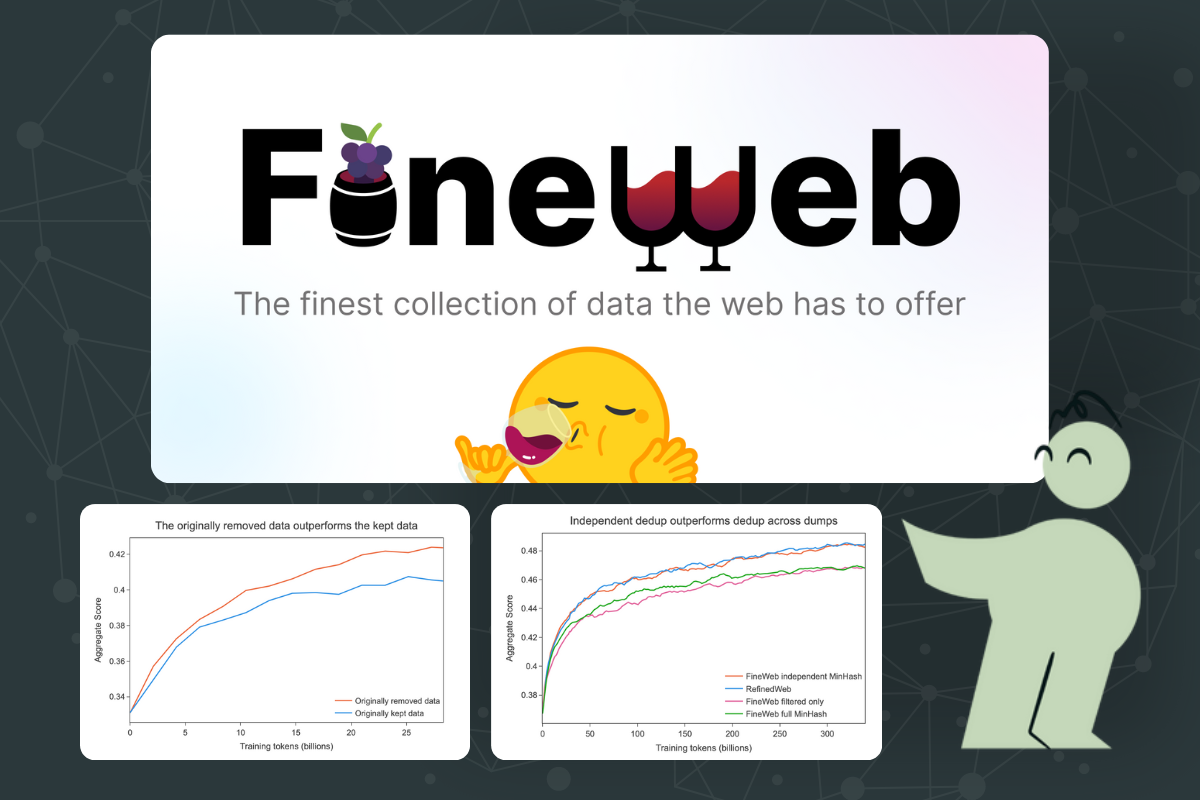

Explore the FineWeb2 dataset: 20TB of multilingual pre-training data covering 1,000+ languages. Learn how its filtering pipeline builds better LLMs.

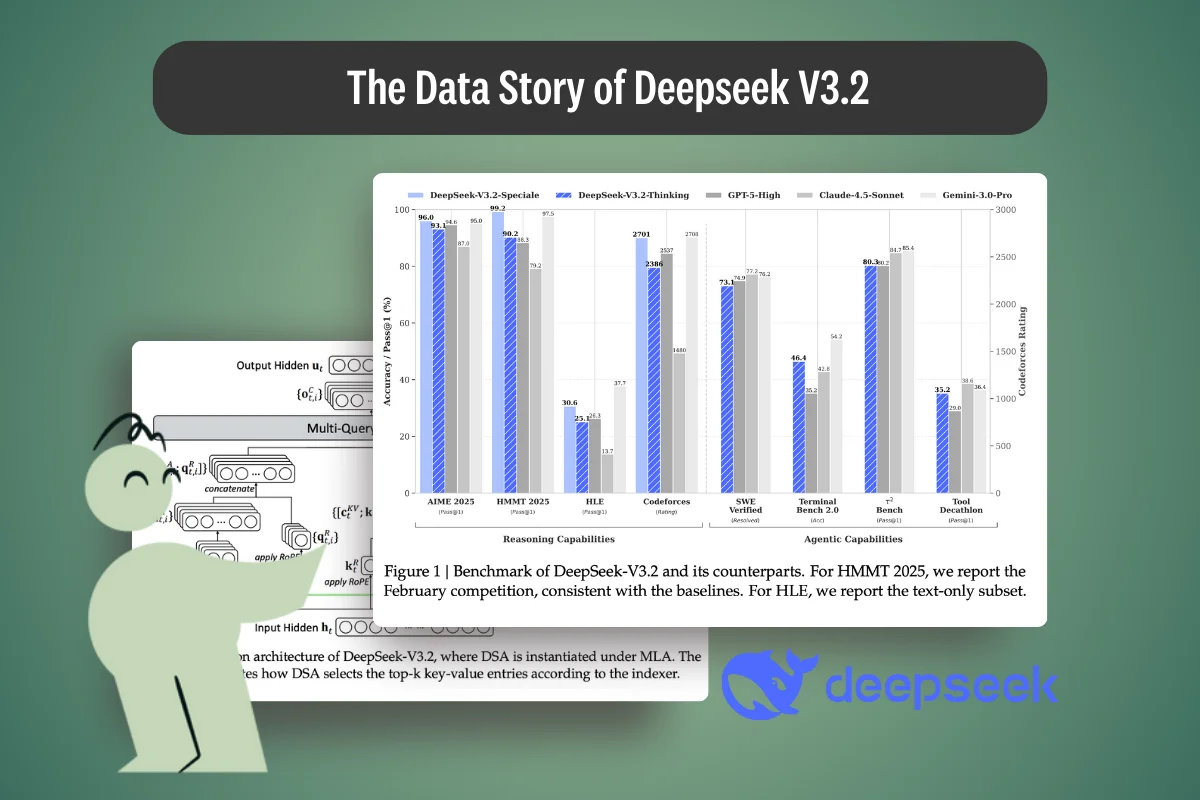

A deep technical breakdown of DeepSeek V3.2, examining how training data, synthetic pipelines, sparse attention, and post-training RL shape reasoning and performance.

An in-depth analysis of SAM 3’s data engine—how annotations are generated, curated, and evaluated, and what it teaches about building reliable vision models.

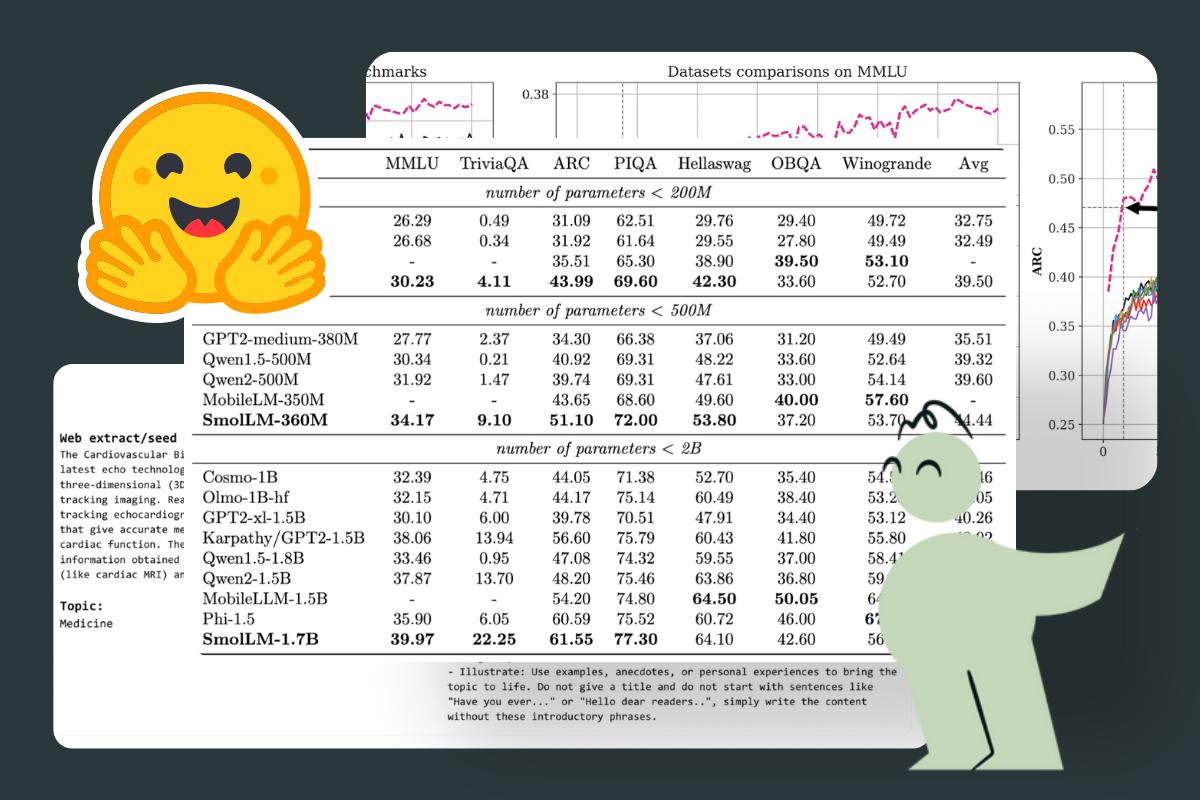

Explore SmolLM, a compact yet powerful language model challenging the notion that bigger is always better in AI. Learn how its meticulously curated datasets and efficient design deliver high performance with lower resource demands, making it ideal for applications in education, coding, and customer support.

In this article, we're doing a deep dive into these small language models, understand how they're trained, their datasets, and see what we can learn from their technical paper.

Discover the best practices for building high-quality datasets for Large Language Models (LLMs) from Hugging Face's FineWeb project. Learn how Kili Technology can elevate your AI with expertly curated data for superior model performance.