Artificial intelligence (AI), specifically its subfield of deep learning, is poised to reshape the insurance industry in the coming years. 65% of insurance companies plan to invest $10 million or more into AI technologies in the next three years. Whether expanding health insurance coverage or disbursing regular unemployment insurance benefits, AI will augment insurance companies licensed in their respective regions to enable better customer satisfaction.

However, insurance AI models will only be as effective as the datasets they are trained on. This underscores the importance of annotation best practices and tools to enable high-performing AI models. Throughout the years, insurance providers have used Kili to annotate datasets, enabling AI systems capable of automating risk assessment and claim processing, leading to better customer experience.

In this article, we’ll dive into the importance, challenges, and ways to develop reliable AI systems with high-quality datasets.

Why Quality Datasets are Crucial in Insurance AI

Insurance companies, use deep learning models to build AI systems that learn from massive datasets. However, the efficacy of these models is directly related to the quality of the datasets they are trained on. Poorly compiled datasets can yield biased or inaccurate results, affecting critical operations like underwriting and claims processing.

A dataset’s quality is characterized by its volume, diversity, presence of noise, and labeling accuracy. High-quality datasets are pivotal to training consistent, accurate, and reliable AI models in insurance use cases.

We share several proven benefits that high-quality datasets offer when training insurance AI systems.

Improving customer experience

Customers expect timely responses throughout their entire journey when interacting with insurers. Whether signing up for a new policy, submitting initial claims, or following up with a premium payment, consumers prefer insurance companies that consistently meet their expectations. In that respect, using high-quality datasets to train AI models helps.

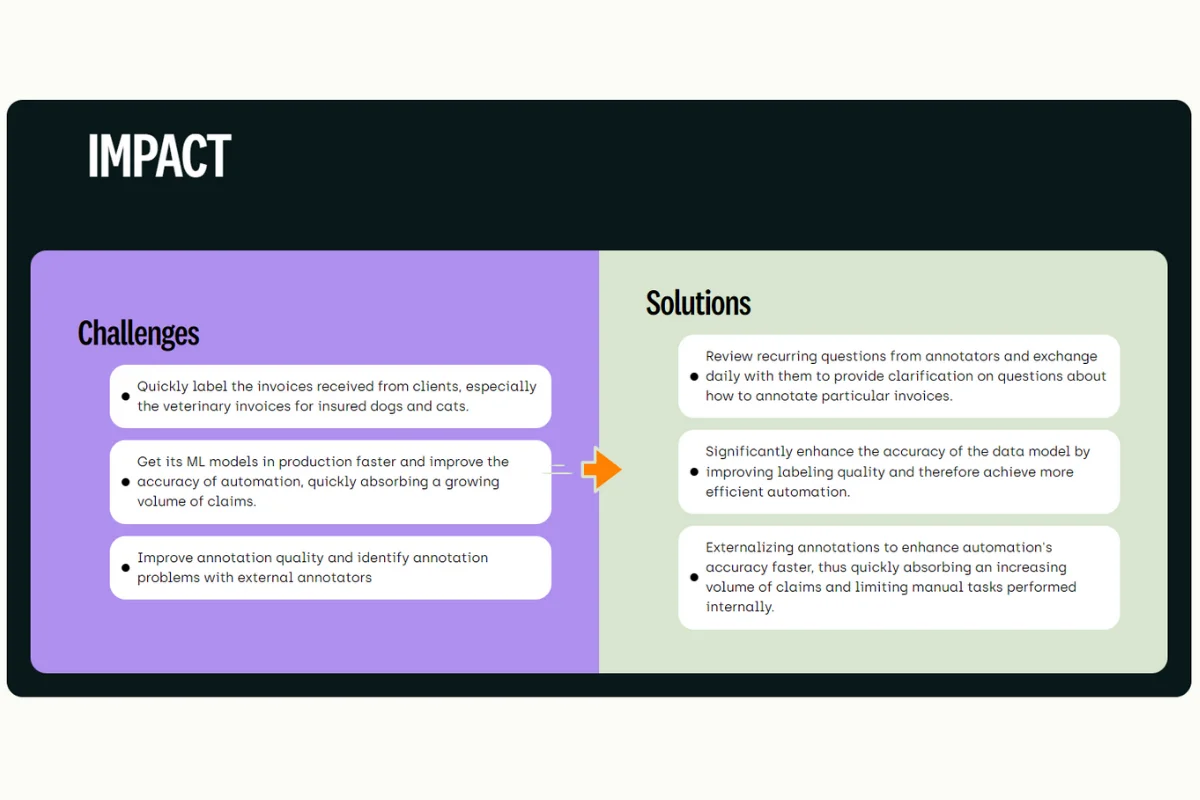

For example, Luko integrated an AI system into their claim processing workflow, hoping to accelerate the process. To their dismay, the AI system only achieved up to 40% accuracy when processing invoices insured consumers submitted. After switching to Kili for their labeling efforts, their model experienced an impressive improvement, charting up to 70% accuracy in various tasks.

Streamlining insurance operations

Insurance companies manage different workflows with varying complexity, including underwriting, risk assessment, claim processing, marketing, and more. AI systems can optimize these workflows by injecting efficiency, consistency, and accuracy. , Using a clean and well-annotated insurance dataset is vital to unlocking AI’s potential in the above applications.

For example, a global insurance provider we worked with understood the benefits that AI offers. Initially, they trained the AI with publicly available datasets but couldn’t achieve the desired performance. Public datasets may contain monthly counts of billing rates, dental plans, health insurance coverage, and other aggregated information from various demographics. Yet, they were not tailored to our client’s requirements, resulting in subpar model performance.

Eventually, our client used Kili to rebuild their AI-automated claim processing system, producing clean labeled data with feedback from human experts. The insurer witnessed a remarkable improvement in claims processing time, optimizing processes that usually took weeks to 15 minutes.

Driving economic gains

As for-profit organizations, insurers strive to balance exceptional service delivery with healthy profit margins and revenue growth. This means reducing inefficiencies, human errors, and redundant processes that impact their financial bottom line. With high-quality datasets, insurers can train AI models to drive them towards positive economic outcomes.

For example, a global auto insurer faced challenges assessing car damages and responding to customer queries because it relies on manual processes. They used Kili to annotate high-quality datasets to train a computer vision model for analyzing damages from submitted photos. The effort results in up to 17% savings in unforeseen repair costs and reduces unnecessary follow-up calls by 32%.

Accelerating AI innovations

Attentiveness, expertise, and communication are crucial to producing high-quality datasets. On top of that, using a suitable data labeling platform accelerates AI development, enabling insurers to optimize and automate their workflow faster. For example, insurance companies use Kili to help labelers, reviewers, and insurance experts collaborate seamlessly when annotating datasets.

Navigating the Challenges in AI Adoption

Insurance providers are well aware of the benefits that deep learning AI offers. Yet, many remain hesitant to implement AI solutions because of unaddressed concerns.

Data quality and availability

Training, or fine-tuning, a deep learning model for insurance use cases requires access to vast and diverse datasets. Unfortunately, data in this domain is scarce, low-quality, and often incomplete. Despite their best efforts, insurers may struggle to curate sufficiently large training samples they need.

A recent study suggested an alternative solution – by using smaller but high-quality training samples. Researchers found that fine-tuning a pre-trained model with carefully curated datasets leads to remarkable results.

Lower data quality means doubling the amount of data you need in a model. Inconsistent data labeling can cause a 10% reduction in label accuracy, which in turn results in a 2-5% decrease in overall model accuracy. You'll need to annotate twice as much data to fix this issue to improve the model's performance.

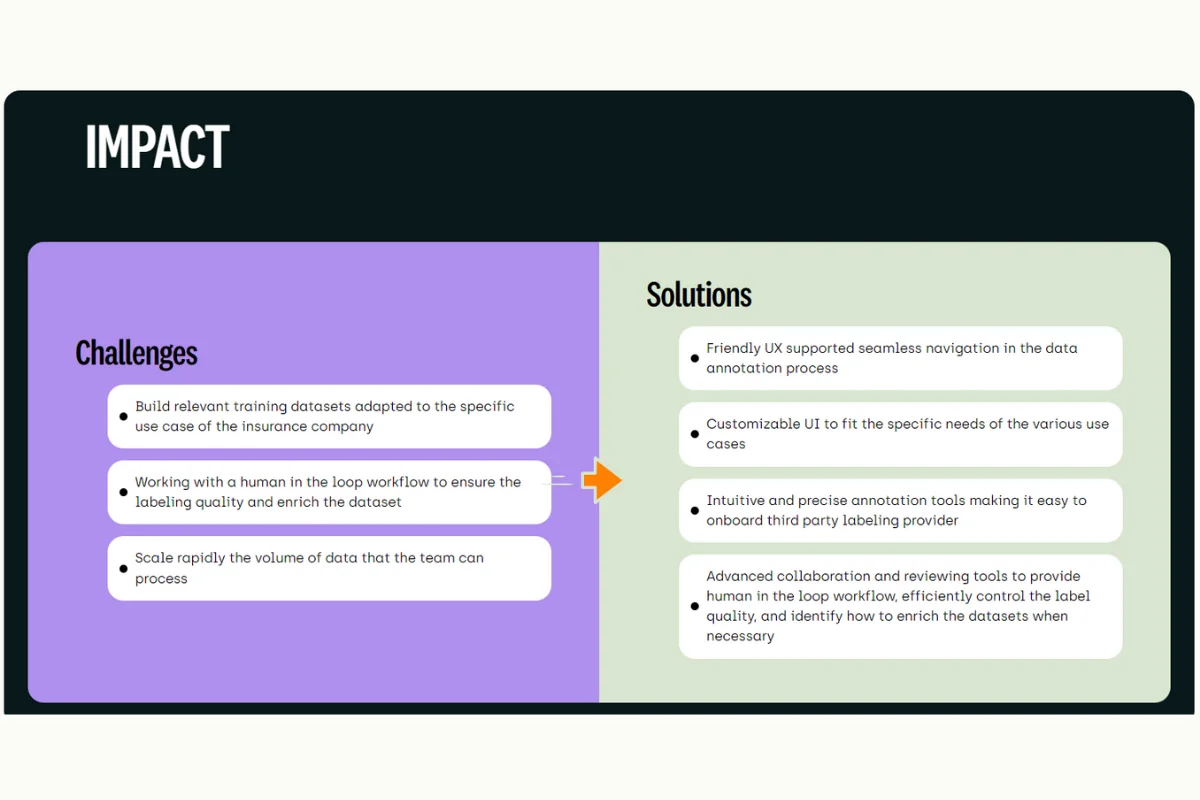

ML team collaboration

Data annotation is critical to preparing high-quality datasets. It involves tight collaboration amongst project managers, labelers, reviewers, domain experts, and machine learning engineers to ensure datasets are labeled according to business requirements. Given the task’s complexity, human errors and miscommunication might occur, resulting in delays, reworks, or subpar model performance.

ML teams must use platforms with flexible, collaborative interfaces to resolve issues that enable an effective end-to-end annotation pipeline.

Document processing complexity

Developing insurance AI systems requires training deep learning models to detect, extract, and process textual and visual elements from documents. Human labelers would classify specific parts of curated documents with tags to train the AI models. Such practices, when undertaken manually, are tedious and prone to errors.

Therefore, insurers use a robust data labeling tool to simplify complex workflows and enable document annotation at scale.

Regulatory compliance

Deep learning models train and feed on massive datasets for risk assessment, policy underwriting, and other insurance-related tasks. Security negligence might subject the AI system to data breaches and non-compliance with regulations like HIPAA and GPDR. Ensure your data labeling tool complies with these standards to maintain consumer trust.

Build a Solid Quality-Focused Data Strategy

Deep learning algorithms hold tremendous potential to redefine the insurance space positively. The key lies in mitigating the challenges we describe. Here’s how:

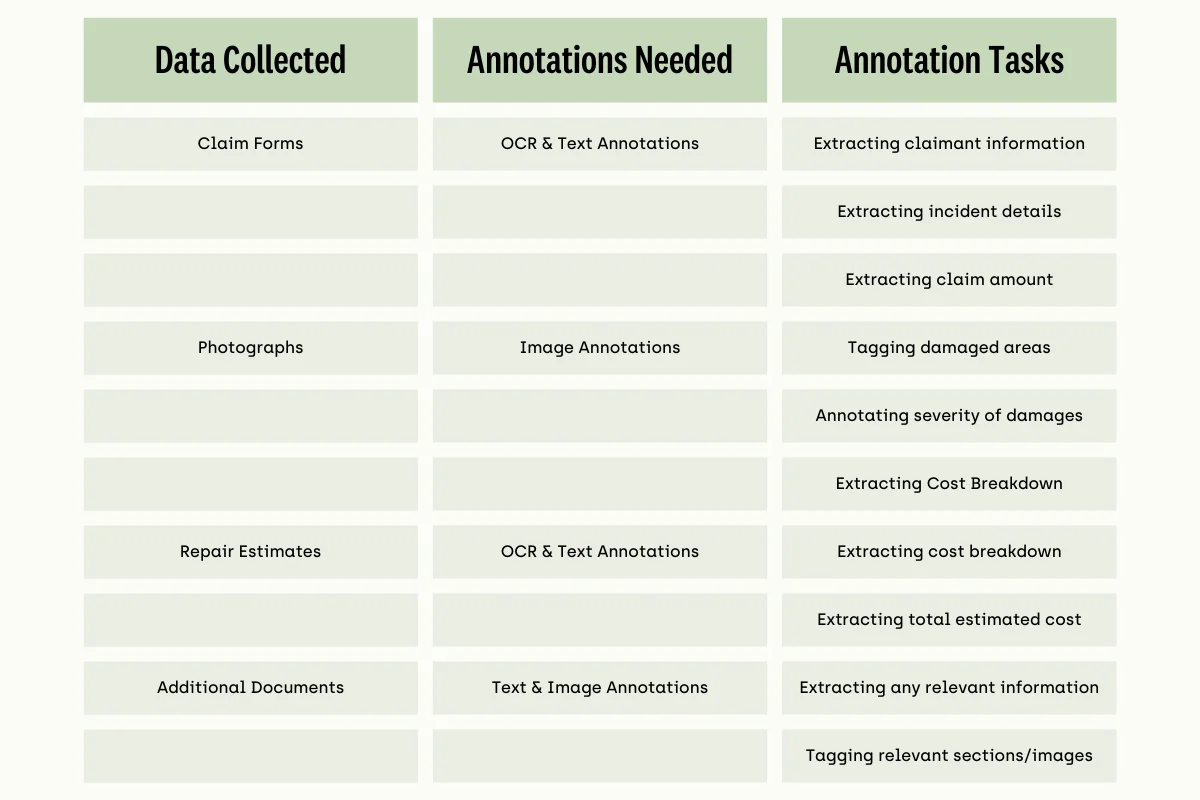

- Define the project clearly. Understand your objectives as it sets the foundation for data collection, annotation types, and resource allocation. For example, you have to develop an AI model to automate claim processing. If that is the case, your requirements may look like this:

- Determine your stakeholders, collaborators, their roles, and their needs. This step ensures the developed AI solution aligns with all relevant parties' needs and constraints. Stakeholders may encompass a broad range of individuals and groups, such as policyholders, claims adjusters, underwriters, and insurers.

Collaborators could include data labelers, machine learning engineers, and data scientists. Understanding their roles and needs will help align the AI solution with the business goals, improve collaboration, and ensure the solution is functional and user-friendly.- Focusing on data diversity is crucial to avoid bias and cover as many scenarios as possible. For instance, in an insurance claim processing model, diverse data can include claims from various regions, demographics, and types of insurance (e.g., auto, home, health). By ensuring a broad spectrum of data, the model can better understand different types of claims and make more accurate assessments. This can include data on different types of accidents, various damages, and claims from both urban and rural areas, among others, to ensure the model is well-rounded and robust against diverse real-world inputs.

Data augmentation techniques like image transformations can enrich the dataset, covering more damage types or incident conditions. Furthermore, synthetic data can be generated to represent underrepresented scenarios in the collected data, entrusting a balanced dataset, which, in turn, aids in training a more robust, unbiased AI model.

- Have strict and automated quality workflows. Scaling your data labeling project will grow challenging over time. Ensure you’re working with a tool incorporating automated quality workflows to help build your datasets quickly without compromising quality. For example:

Watch Video

- Having smooth and continuous feedback between data labelers and reviewers can ensure issues are handled immediately.

- Automated randomized review queueing can eliminate human bias when assigning assets to be reviewed.

- Use programmatic QA, where your tool can trigger common issues immediately, saving time for your labelers and reviewers.

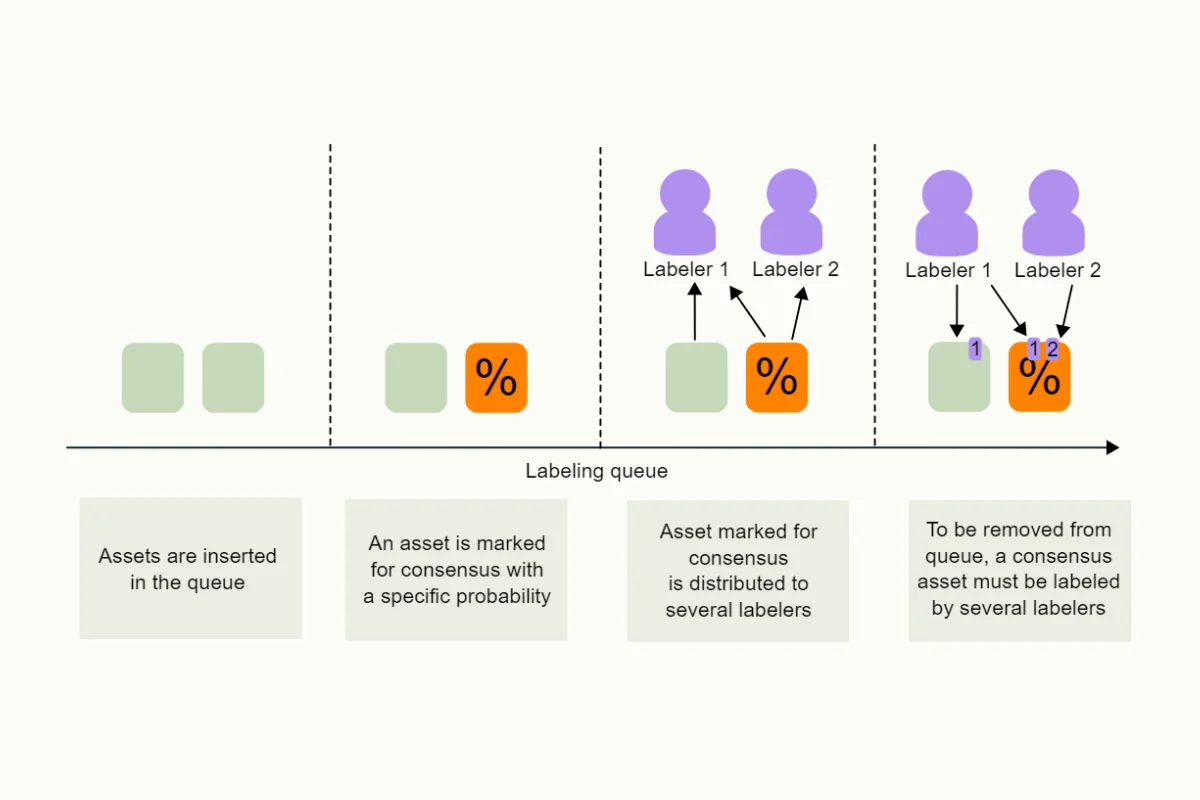

An overview of how Consensus works

Using quality metrics such as Consensus and Honeypot is vital to having more control over the quality of your labeled data. These metrics provide insights into annotator agreement and help identify potentially mislabeled data. By leveraging these metrics, project managers can better understand the quality and reliability of annotations, make informed decisions to improve the annotation process, and ultimately enhance the accuracy and performance of the machine learning models trained on this data.

- Iterate on your data quality again and again. In a typical insurance case study where an ML model is deployed to automate claims processing, the model's performance hinges on the quality of the training dataset. Post-annotation, continuous monitoring, and analysis of model outputs are paramount as the model transitions from testing to production.

This embodies a data-centric approach to AI: the emphasis is placed on refining the data to enhance model accuracy in identifying valid claims rather than tweaking the algorithm. Over time, this iterative quality refinement aids in maintaining a high level of accuracy in claims processing, adjusting to new types of claims and evolving scenarios.Consider a case study revolving around fraud detection in insurance claims using machine learning. In this scenario, it's essential to iterate at every project stage, akin to lean and agile methodologies. For example:- Number of Assets to Annotate: Adjust based on emerging fraud tactics.

- Percentage of Consensus: Tweak to achieve better clarity on ambiguous claims.

- Dataset Composition: Revise to cover a broader spectrum of fraud cases.

- Guidelines to Workforce: Update to ensure sharper identification of fraudulent indicators.

- Dataset Enrichment: Incorporate new real-world parameters, like incorporating economic downturns that might spike fraudulent claims. This ensures your ML model stays adept at identifying fraudulent activities amidst evolving real-world dynamics.

Learn More

Data-Centric AI is a system that focuses on data instead of code. It is the practice of systematically engineering the data used to build AI systems, combining the valuable elements of both code and data. Learn about DCAI's best practices through our eBook.

Should you outsource your data labeling project?

Building high-quality labeled datasets can be daunting, especially if you don't have the workforce with the capacity. A Google study states, "Paradoxically, data is the most under-valued and de-glamorised aspect of AI." But however tempting it is to outsource a massive data labeling project, some potential risks and questions need to be answered first.

- Loss of Control: One of the main drawbacks of outsourcing is losing control over the data labeling process. The insurance company may not have the same level of oversight or influence on the quality and speed of work as it would with an in-house team.

- Security Risks: Outsourcing data labeling can pose security risks, especially when dealing with sensitive information. Insurance companies handle personal and confidential data that could be exposed to third-party vendors during labeling.

- Communication Barriers: There can be communication barriers or misunderstandings between the insurance company and the outsourcing service provider. This can lead to errors in data labeling, impacting the performance of machine-learning models.

Therefore, if you cannot label data in-house, then choosing a data labeling service provider with experience handling sensitive data across industries is essential. Going through different insurance case studies and studying the security and compliance policies and practices put in place can help you decide on which data labeling tool to go with.

Conclusion

Never compromise dataset quality when developing AI systems for insurance use cases. Customers deserve the responsiveness, integrity, and value-added protection insurance promises and upholds in their day-to-day service. As AI systems become more prominent in the strictly regulated industry, preparing clean, well-annotated, high-quality datasets is essential.

The simplest way to build high-quality datasets

Our professional workforce is ready to start your data labeling project in 48 hours. We guarantee high-quality datasets delivered fast.

.png)

_logo%201.svg)

.webp)