High-quality labeled data is the bedrock of successful machine learning models. It enables them to learn underlying patterns relevant to the problem the model is designed to solve and generate accurate predictions.

In computer vision (CV), image annotation techniques enable AI practitioners to curate high-quality vision datasets. They are critical in determining prediction quality for several CV tasks, such as object recognition, image classification, semantic segmentation, etc. Together, these tasks enable some of the most advanced CV applications, like autonomous cars and medical imaging.

For instance, computer vision models can recognize anomalies in X-rays, MRI, or CT scans and quickly notify healthcare professionals to help them create an effective treatment and recovery plan for the patient. In self-driving cars, object detection models can recognize pedestrians, vehicles, traffic signs, etc., for efficient navigation.

CV models are also helpful in E-commerce, allowing online shoppers to query items and retrieve relevant products to streamline their online shopping journey. More sophisticated use cases include Augmented and Virtual Reality (AR and VR) in E-commerce apps to help customers get a more immersive experience by letting them visualize products in different scenarios.

All such CV applications need high-quality data, which, in turn, requires a robust image annotation process that lets data scientists and computer vision experts train models more effectively. However, maintaining high data quality is challenging due to increasing data volumes and complexity.

One solution is to develop an efficient data curation workflow that ensures quality remains intact throughout the model development lifecycle. However, given the large scale of the data ecosystem, manual data curation is not feasible.

Researchers are actively developing techniques that can automate data curation workflow for CV tasks. For instance, Meta's DinoV2 is a collection of self-supervised visual foundation models for extracting global visual features. It builds an automated pipeline to curate a diverse image dataset that can extract the best available all-purpose features. The research signifies the importance of having control over data quality and diversity for efficient feature extraction.

Likewise, research shows better data quality considerably improves and builds trust in deep learning models that perform safety-critical tasks, such as medical diagnosis, where a slight error can lead to severe consequences.

The easiest way to build high-quality datasets for your CV model

Your CV model’s performance relies on the quality of datasets it’s trained on. However, building high-quality datasets can be a challenging task. Simplify it with our platform today.

Importance of Data Quality in Image Annotation

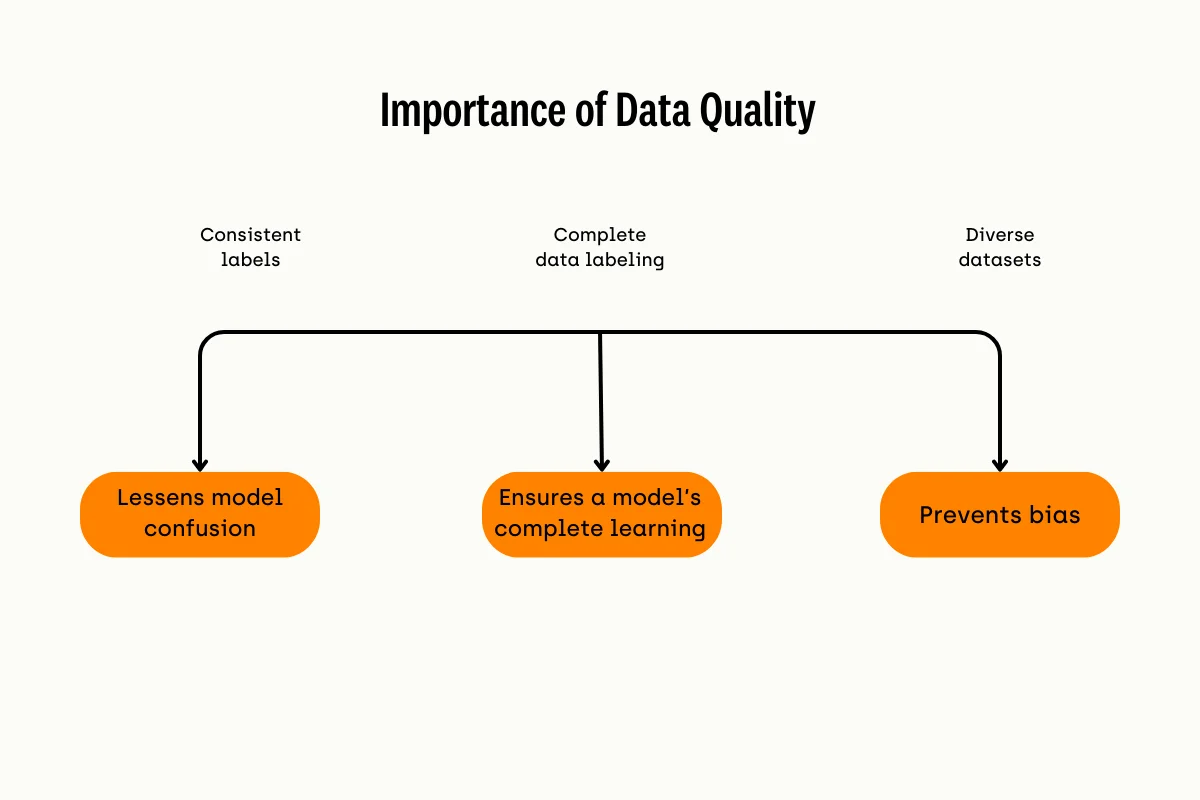

Image annotation is critical to data quality and requires experts to build consistent, complete, and diverse training data for effective model training. The following explains how these three factors contribute to data quality and make ML models more reliable.

Data quality factors

Consistency and Model Confusion

It's often challenging for experts to annotate images with the correct caption or description, as multiple ways exist to describe or identify an image.

For example, labeling an image of a baby cat as "cat," "feline," or "kitten" is acceptable since all have similar interpretations. When a model encounters inconsistent annotations, it struggles to generalize from the training data to unseen data. For example, if an object is labeled as a "dog" in some images and a "canine" in others, the model may treat them as two classes, leading to confusion and decreased performance.

As such, it's crucial to have consistent labels across all images for an efficient annotation process. For example, instead of labeling some cat images as "cat" while others as "kitten," it's better to either label all as "cat" or as "kitten." Inconsistent image annotations can cause misclassification and decrease a model's overall accuracy.

Completeness and Incomplete Learning

Completeness in this context refers to the thoroughness of annotations. Missing labels or partially annotated images can result in incomplete learning, where the model fails to capture the full range of variability in the data.

Several image annotation tasks require annotators to label multiple objects or instances within a single image. In such cases, ensuring completeness is vital as partially labeled data can prevent models from learning the underlying features or context-level information, causing the model to perform poorly.

For instance, annotating images for training an object detection model for self-driving cars requires annotators to label all relevant objects, such as pedestrians, trees, vehicles, bicycles, etc., for accurate predictions.

Bias and Diversity of Data

While consistency and completeness are essential for high-quality training datasets, they're only part of the story. One pressing issue that most AI models can face is biased predictions.

For example, a facial recognition model may wrongly classify people with darker skin color than people with a light skin tone. The reason behind such unfair results is the absence of diverse image data.

Recent research in medical imaging is a case in point where AI models for diagnosing head and neck cancer performed poorly due to class imbalance and lack of data fairness.

Similarly, another study demonstrated how medical image computing (MIC) systems show a strong bias by underdiagnosing black patients due to a lack of representation of the target population and diversity in training data.

Annotation Process

Let's discuss how a typical organization can begin the image annotation process by considering all the abovementioned factors.

The following lists a standard framework businesses can use to build an effective annotation plan.

Preliminary Steps

The first step is to determine the best tool for the job. It should offer top-notch data quality, consistency, and a user-friendly interface for seamless collaboration. With versatile labeling tools that cater to different data types, it allows for comprehensive data annotation. Scalability is also essential, ensuring the platform can handle growing data sets effectively. Additionally, it prioritizes data security, providing a secure environment for sensitive information.

Next, organizations must develop a training plan suited to the tool to ensure annotators have the necessary skills to use the tool efficiently. Businesses can choose solutions that provide sufficient training materials and customer support.

Annotation Guidelines

Data science teams can develop detailed annotation guidelines with templates to ensure consistency and accuracy across data labels. For instance, a company can issue directives to maintain an image's aspect ratio when drawing bounding boxes and suggest the optimal labeling technique by use case.

The template should include clear visual examples of correct and incorrect annotations that annotators can refer to while labeling objects. In addition, the guidelines should mention common labeling pitfalls and mitigation strategies to prevent labeling blunders and help annotators deal with issues such as occlusion, blurred images, and hidden objects.

Quality Checks

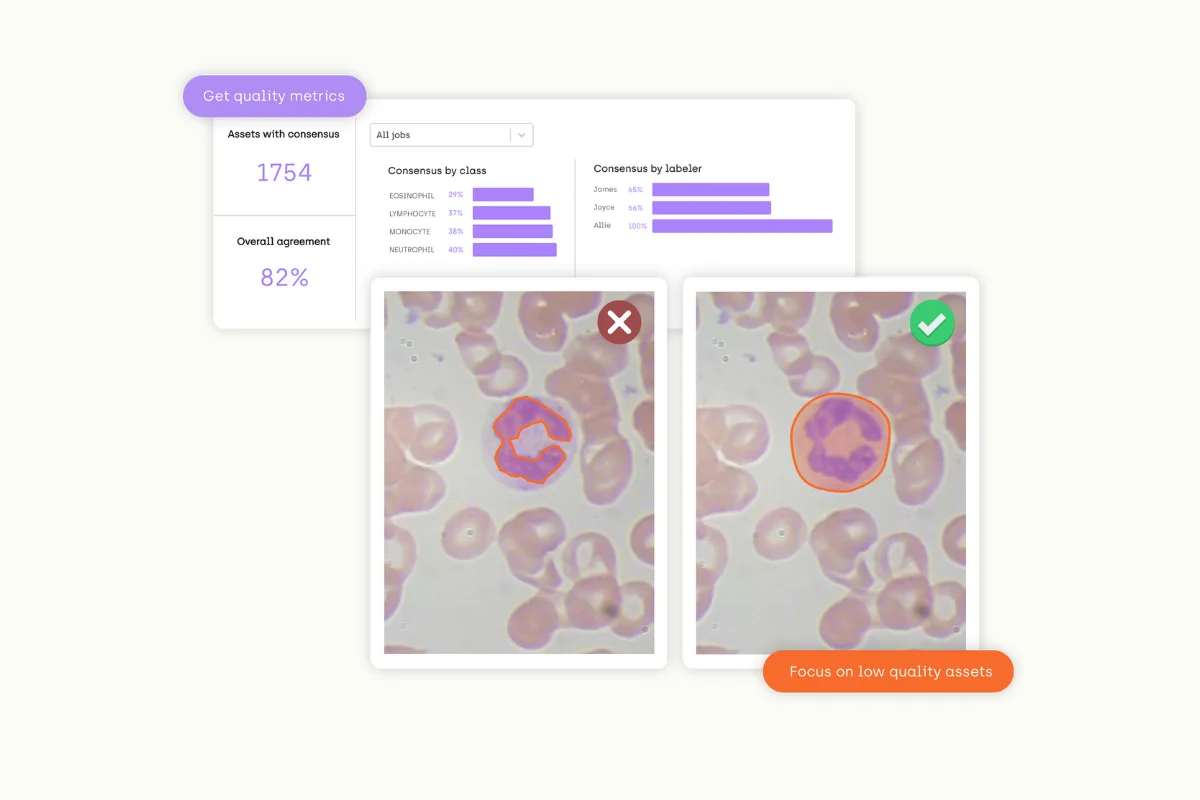

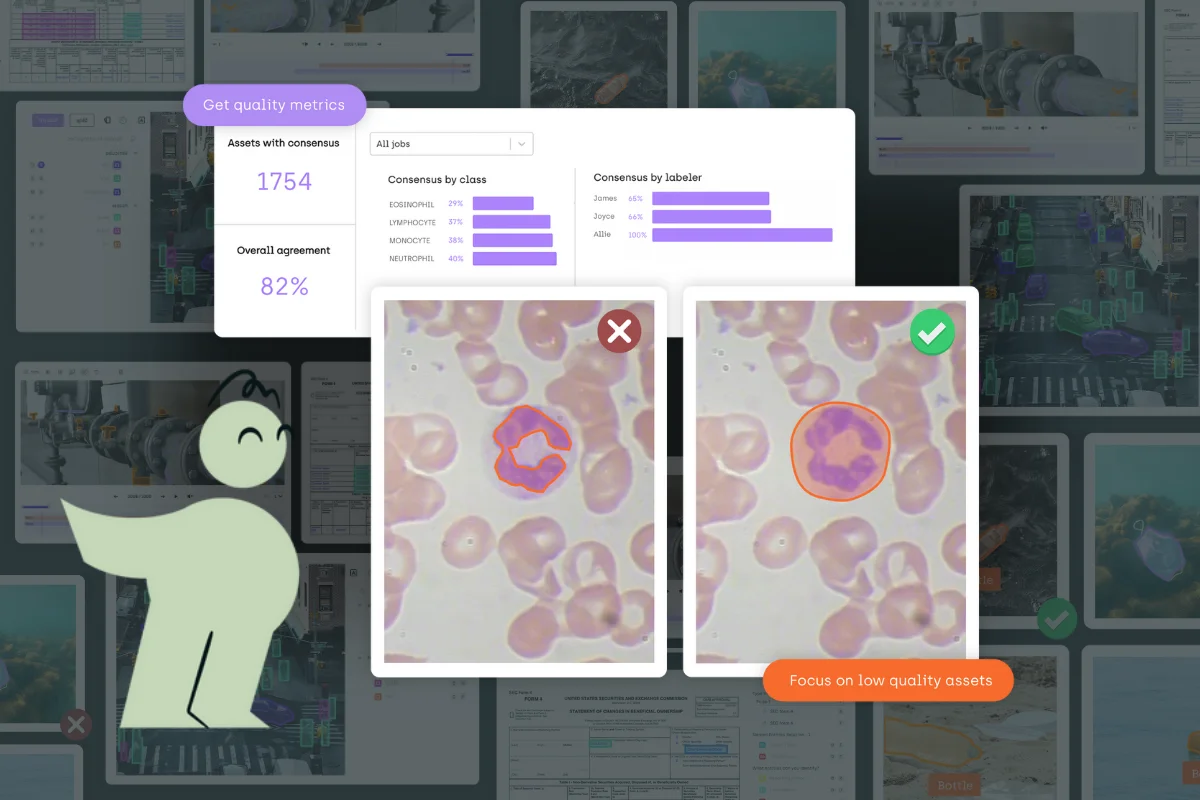

Businesses must include robust quality checks to ensure data labeling meets the expected quality standards. A practical method is to regularly select a small random subset of data points for review and check annotation consistency across samples.

It's also a best practice to establish consensus-based labeling where annotators discuss the correct labels and descriptions for different images and agree on a standard labeling vocabulary with the help of domain experts.

Additionally, organizations can use a honeypot dataset - a small number of data samples with ground truth labels - to track annotation performance. Lastly, annotation tools with automated checks can help notify annotators of potential issues. For instance, a tool can detect a bounding box that doesn't align with a particular object's region.

Feedback Loop

Clear communication channels with a feedback system are necessary to facilitate collaboration across annotators and domain experts. This mechanism encourages continuous improvement as experts provide valuable feedback to optimize the annotation workflow and allow annotators to present their concerns regarding annotation procedures and guidelines. Such channels can include online surveys, chat groups, discussion panels, etc., with a governance system for conflict resolution.

Metrics for Quality and Performance Assessment

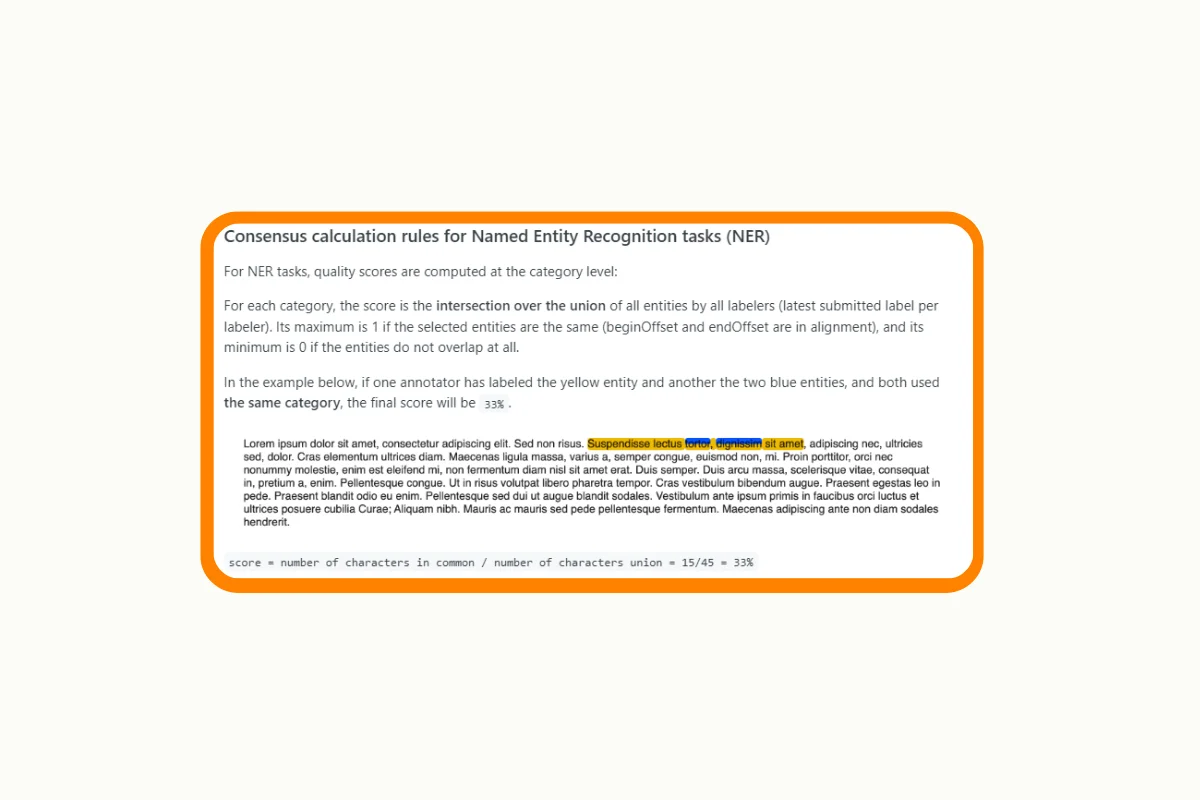

While quality checks and feedback channels help establish the desired quality standards, businesses must accompany them with reliable assessment metrics to see whether the system works according to expectations.

Metrics that compute inter-annotator agreement can help measure consensus among annotators and track consistency across data labels. Moreover, measuring annotation speed is also critical for meeting deadlines. Organizations can develop custom metrics such as completed annotations per day, average time per annotation, average completion rates, etc.

Planning and Strategy

Optimizing data quality requires planning and strategizing for image annotation projects to ensure efficient results and a quick return on investment (ROI). Below is a comprehensive list of best practices organizations must follow to undertake complex computer vision projects and achieve stellar performance.

Define Objectives Clearly

Well-defined objectives aligning with an organization's overall strategy, mission, and vision can help convince the executive management to invest in image annotation tools and frameworks that streamline the annotation process.

Such objectives can include establishing annotation goals based on the type of computer vision model the company wishes to build. For instance, is the model for image classification, object detection, instance segmentation, or another task?

It also involves determining the criteria for annotating images. For instance, some CV tasks require a bounding box annotation, while others may need key point annotation, pose estimation, polygons, polylines, etc. As several types of image annotation exist, deciding the most suitable option early on can save significant costs.

Understand the End Goal and Stakeholder Involvement

Image annotation requires an understanding of the ultimate goals. In particular, businesses must consider how a specific annotation strategy will help the end users achieve their objectives.

Identifying the needs and expectations of the target audience can be the first step in establishing the end goal of any annotation workflow. Next, it's crucial to map out all the use cases for the computer vision model to develop a suitable annotation strategy. For instance, a company may require manual annotation from healthcare professionals if the task is to diagnose complex medical images.

Lastly, involving all the relevant stakeholders, such as data scientists, annotation experts, project managers, etc., is advisable to avoid future conflicts and encourage cross-team collaboration for more significant insights and clarification of requirements. It will also help businesses build and collect the right image dataset for training computer vision models.

Budget and Time Constraints in an Image Annotation Project

Image annotation can be long and tedious depending on the computer vision task a business wants to achieve. It requires a strong commitment from higher management to ensure sufficient funding is available to manage the entire project along the development lifecycle and provide insurance against unexpected incidents.

As such, organizations should consider the following guidelines for building an effective annotation strategy.

Assessing Project Scope

A comprehensive feasibility assessment is necessary to ensure companies have the appropriate infrastructure and resources to support their image annotation efforts.

The following is a list of factors they must assess before undertaking an annotation project.

- Tool and Team Capabilities: Since complete manual annotation of complex datasets is impractical, companies mostly use an annotation tool to automate data labeling. But this means the company must search for a suitable tool that supports the relevant image annotation techniques. It must also see whether data teams have sufficient skill sets or require additional training to understand and review the tool's output for preventing data labeling errors.

- Data Volume: The company must estimate the amount of raw data it expects to process and whether the image annotation tool can support such volumes. Organizations must also consider upgrading their data stack to store and process large image data volumes and see if their budget allows for cloud-based storage platforms and image retrieval systems for better searchability.

- Complexity: Assessing the project's complexity is crucial for understanding resource requirements. Labeling images for complex CV tasks, such as image segmentation, can require more sophisticated solutions that support several labeling techniques for detecting objects and complex shapes.

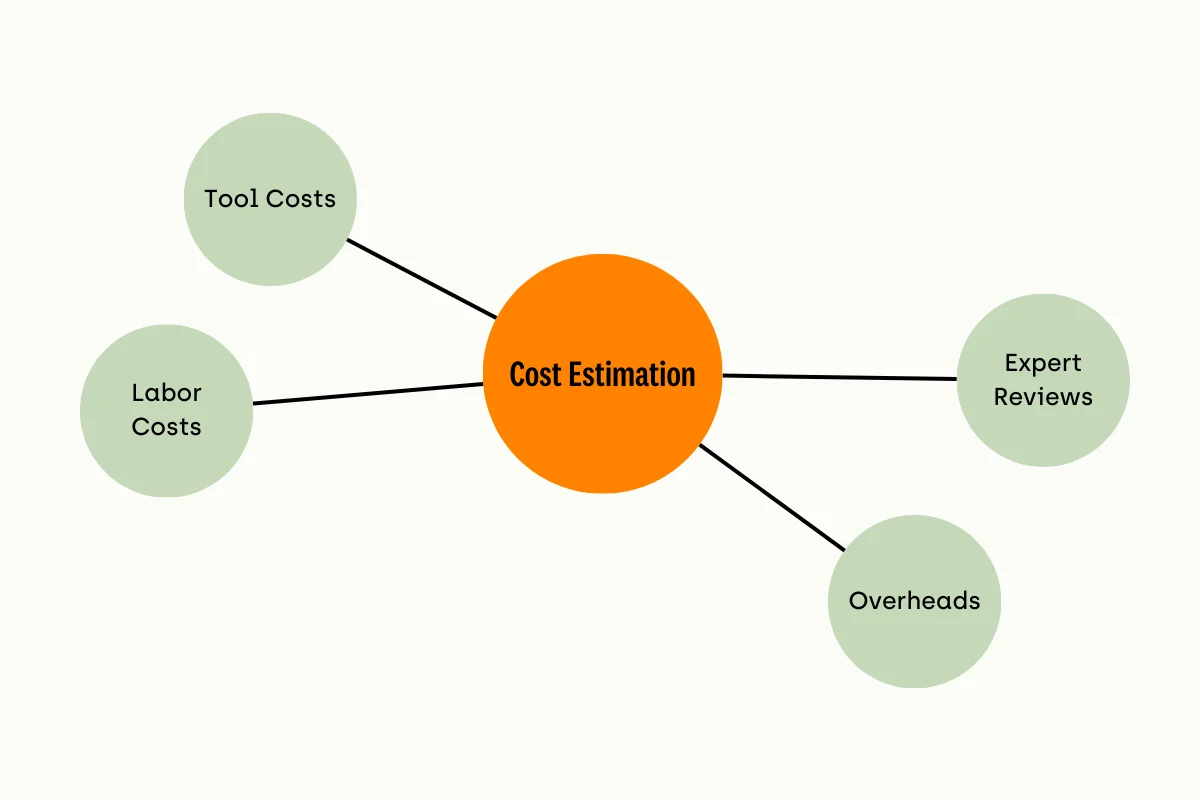

Cost Estimation

Cost estimation factors for an image annotation project

- Tool Costs: This includes the licensing or subscription plans for the computer vision annotation tool organizations plan to purchase, installation, and configuration costs. Alternatively, organizations can build a custom in-house tool if it has the required resources. Businesses must assess the cost and benefits of each option and decide accordingly.

- Labor Costs: Companies may require data labelers to enhance annotation speed and accuracy, especially if the tool cannot handle specific edge cases. Also, intelligent labeling methods such as active learning need human input for effective data labeling.

- Expert Review: Complex annotation tasks, such as medical imaging, require domain experts to review label quality. The cost of hiring such experts should be a factor in estimating annotation costs.

- Overheads: Image annotation work is long and tedious, and planning for every contingency is infeasible. Businesses must include a buffer for unexpected expenditures like infrastructure upgrades, cloud migration, specialized training, etc.

Time Estimation

Estimating completion timelines is essential for optimal resource allocation. The following are a few best practices for assessing the project's duration.

Time estimation factors for an image annotation project

- Pilot Phase: Conducting a small-scale pilot project to judge the time required for the entire image annotation process to finish can help strategize workflows more effectively.

- Scaling: The data gathered from the pilot phase can help managers estimate the timelines more accurately for scaling up the project.

- Milestones: Project managers can break down the project requirements into smaller tasks to better manage deadlines and objectives.

Quality vs. Quantity

Organizations must avoid establishing goals based on the number of annotated images a tool can produce within a specified timeline. Instead, they can consider the factors below to help balance the quality and quantity of image annotation.

- Tool Accuracy: A reliable image annotation tool consists of several accuracy metrics that can help businesses assess the quality of annotated data. For speed, organizations must see whether the tool supports features such as automatic bounding boxes, image segmentation, object detection, etc., to reduce manual workload.

- Review Cycles: Regular reviews can help mitigate labeling errors and prevent issues later in the modeling lifecycle. Experts can utilize an annotation tool's built-in review features or a custom validation process to ensure the labeled data meets the required quality standards.Also, domain experts can help considerably during the review phase by detecting anomalies and providing valuable insights to speed up the annotation workflow. A formal feedback process must be in place to ensure labeling teams address all issues and optimize quality where applicable.

Resource Allocation

The entire purpose of planning is to provide project managers with relevant data for optimal resource allocation. Assigning team members the proper responsibilities and facilitating the workforce by providing the tools they need for labeling data is critical to the project's success. In particular, managers must address allocation issues in light of the following factors.

- Human Resources: Data labelers and reviewers are the two most significant groups in the annotation process. As such, businesses must see how many annotators will be sufficient to annotate images in time and the number of reviewers that can efficiently validate the entire labeled image data. Assessing the annotation tool's automation features can help with these estimations.

- Training: Project managers must allocate sufficient time for training the annotation team so they understand the different types of image annotation the tool supports and learn to use its features effectively for domain-specific tasks. The training process must also inform them of the required quality standards and project timelines to ensure alignment with the company's objectives.

Monitoring and Adjustments

The last component of an effective budgeting strategy involves developing a monitoring framework to track progress and resolve issues proactively along the project's lifecycle. Below are the factors that every monitoring system must have.

- Key Performance Indicators (KPIs): Managers must establish realistic KPIs for measuring quality and annotation team performance. They must also set standards for annotation tools to see whether their investments are paying off.

- Audits: Regular audits should be a part of the system to identify issues early on and implement relevant fixes before things go off track.

- Adjustments: The data gathered from audits and KPIs must inform future strategies and adjustments to avoid costly decisions.

Conclusion

Image annotation in machine learning can be daunting at first. However, with correct planning and strategy, businesses can optimize their annotation workflow to ensure a quick return on investment.

An effective strategy establishes annotation objectives and end goals, addresses budgetary constraints, and identifies the relevant annotation techniques for the project.

Companies can use the above-mentioned annotation framework to streamline their data labeling efforts and allow continuous improvement along the annotation lifecycle.

Get your images annotated by the experts

Labeling images can be complex. Let us do the hard work for you. Our professional workforce is equipped with the best tools and training to guarantee the best results. Start your project in 48 hours.

.png)

_logo%201.svg)

.webp)