For the purpose of this blog, we will assume that the structure of the labeling task (ontology) has already been constructed and validated. This step, strongly dependent on the AI use case goal, is critical in the process and has to be treated beforehand (see Kili blog article for insights on classification topics if required).

In this guide, we will provide:

- Macro tips and potential pitfalls to avoid when building this labeling guide.

- Example of a detailed plan for such a guide.

- Some specificities to consider depending on the type of AI Use Case you are dealing with (computer vision or NLP).

Pro-tips - DOs & DON’Ts

Data Labeling Guidelines: DOs

#1 - Provide a reason behind why labeling is needed and tie it to business value

Labelers annotate better when they feel they have a stake in the outcome and a reason that labeling should be done. This is especially important when leveraging internal teams whose primary role is outside of labeling data but possess specific knowledge about the task at hand (SMEs). For SMEs, it is not the goal of your overall project to replace their jobs but rather make them more effective in their jobs and make their lives easier by reducing tedious work with AI/ML.

Example

“The data annotation is a critical exercise while solving any kind of automation problem. It is the correctly annotated data that enables any kind of machine learning to detect generalizations within the data. Data annotation is often done by teams of people, and it is a fundamental part of any machine learning project and ensures it functions accurately. It provides that initial setup for teaching a program what it needs to learn and how to distinguish meticulously between various inputs to come up with accurate outputs.

This guide explains the individual elements of annotated documents and the special cases that should be considered while annotating the purchase order documents. It lists the stages of annotating purchase orders which start from anonymization of the documents and end with annotating various types of fields in the document.”

#2 - Break jobs up into simpler tasks if possible

Images

Need to identify where objects are and measure their heights? Split it up into a bounding box job, then pass the cropped image to a line-object detection job in a different project (easy using Kili API). You could even use different labeling teams for each so that they can specialize.

Text

Need to classify sentiment in an employee satisfaction survey and understand what text leads to that classification? Break the jobs up into overall sentiment and then name entity recognition jobs.

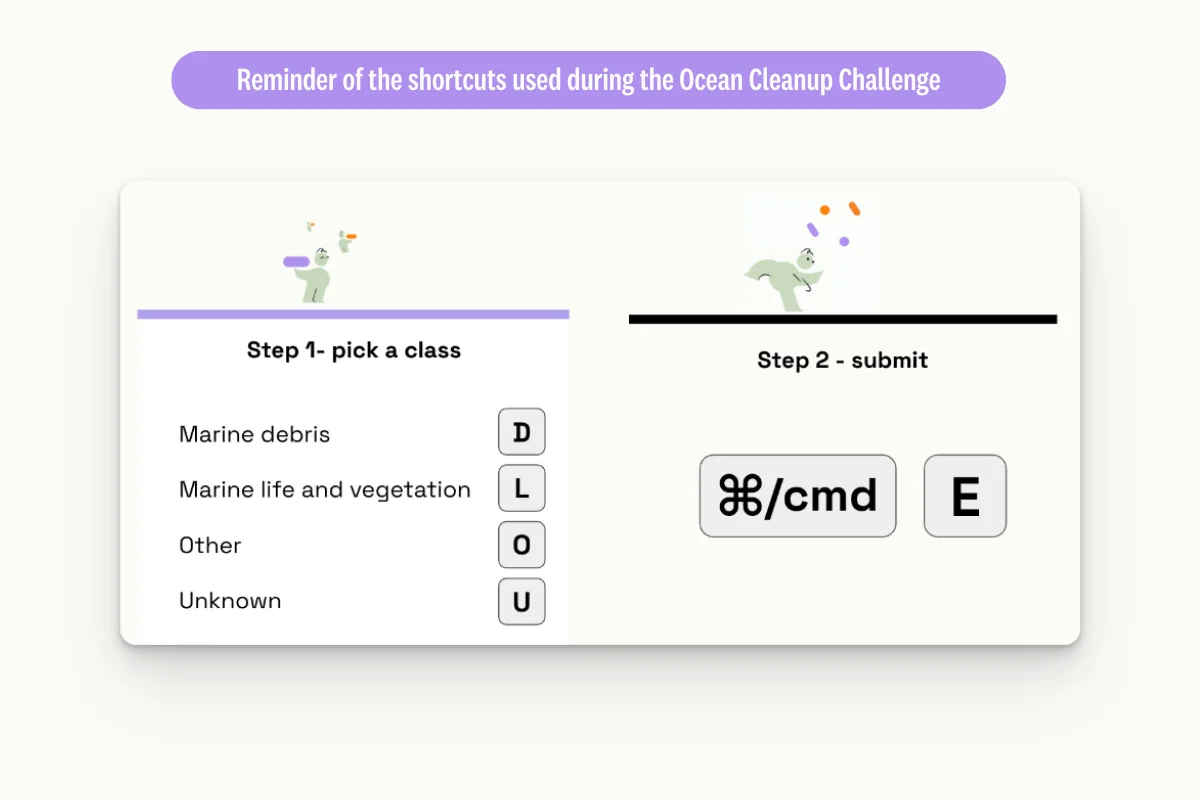

#3 - Provide your best tips & tricks in the guidelines

Labeling expertise acquired with experience on a very specific topic or tool relies on many small details that may seem obvious after some labeling time. These tiny details must be detailed on the guideline so as to ensure their transmission to the team.

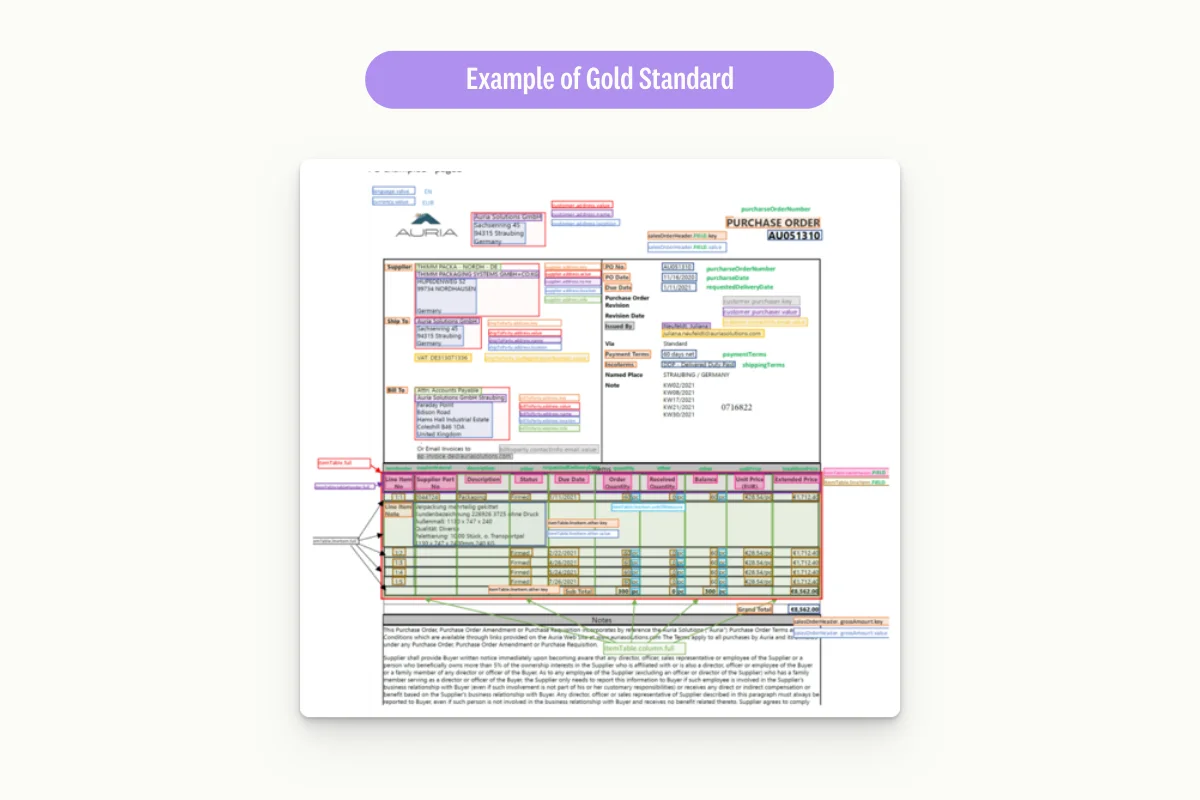

#4 - Give examples of the “gold standard”

This example(s) enables to project the labeler in the finally completed job, which is particularly relevant if you are achieving a complex job (various classes, various object types, etc.).

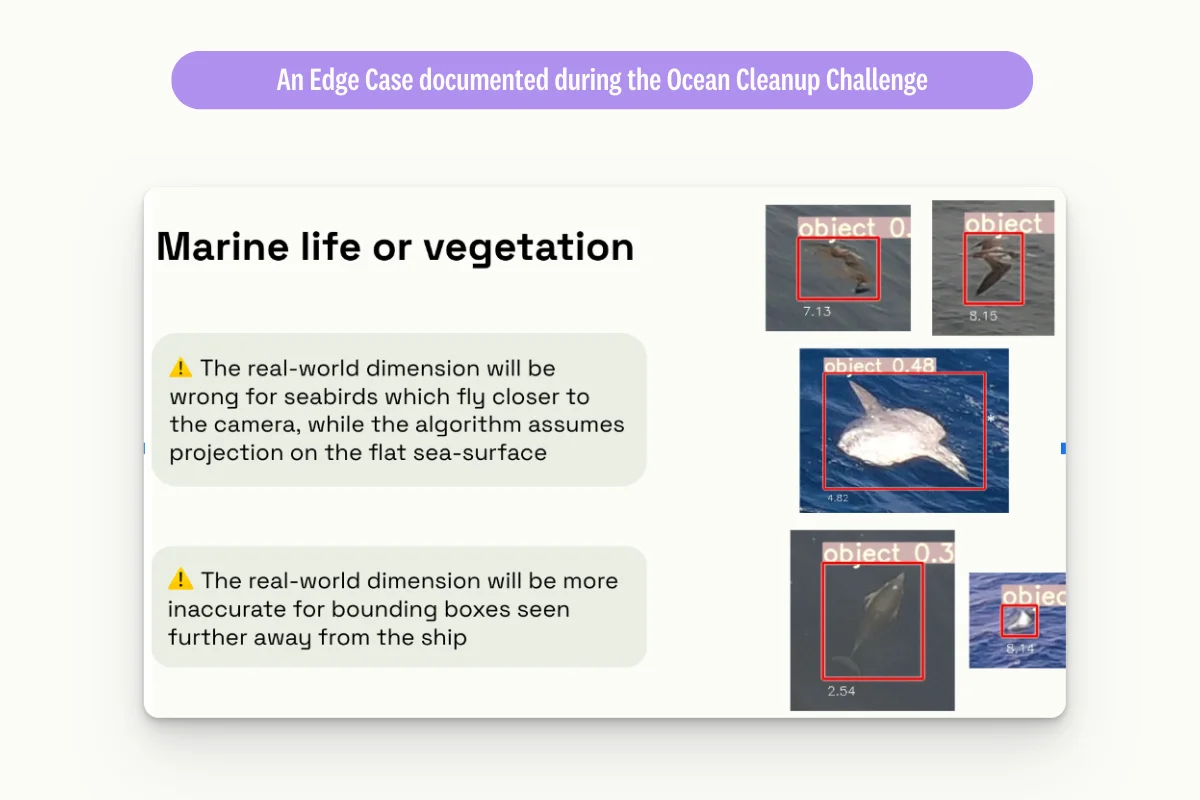

#5 - Provide examples of known tricky edge cases and/or common errors

This helps show labelers when to ask for help or reduces the first-pass error rate. These examples can be displayed in the class-specific rules section of the annotation guide.

#6 - Provide instructions for each class

Not providing instructions for a “basic” class sets up labelers for failure. Something obvious to the guide writer or ML engineer may not be obvious to a labeler.

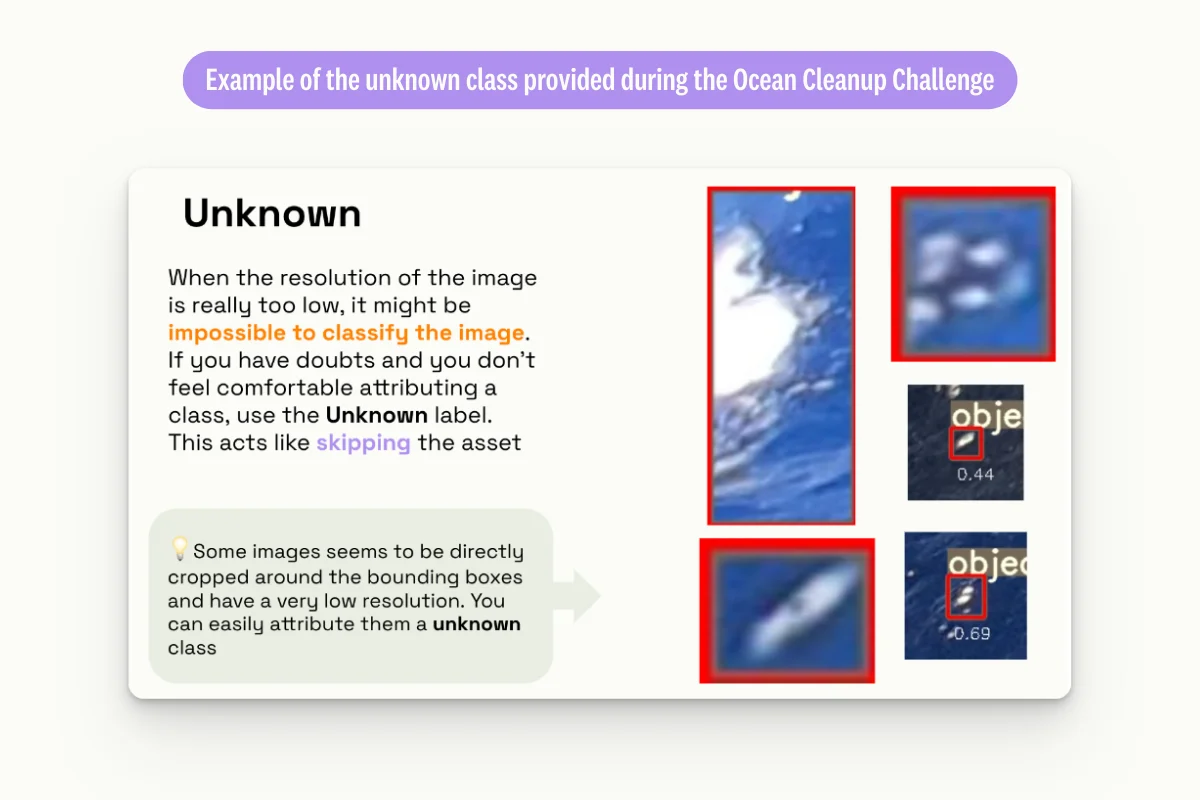

#7 - Provide a class for unknown/uncertain

This can help quickly identify where problems exist in the guidelines or ontology without the need for responding to each instance. Often patterns will arise which can help iteratively improve the annotation guideline document or the project ontology.

#8 - Allow for version control of guidelines

Labeling guidelines document must evolve during the project lifecycle. So, just as for datasets, it is important to version control your guidelines and have an approval process for updating them.

This continuous update of labeling guidelines will be key to progressively incorporating the knowledge discovered during the project into your guide.

After the update, the new guidelines link can be updated at the labeling project level for a seamless experience for the end users.

#9 - Let labelers know how they will be evaluated

This also focuses MLOps on understanding what they seek in annotated data. Setting the rules before starting will save headaches when reviewing the annotated data later.

Data Labeling Guidelines: DON’Ts

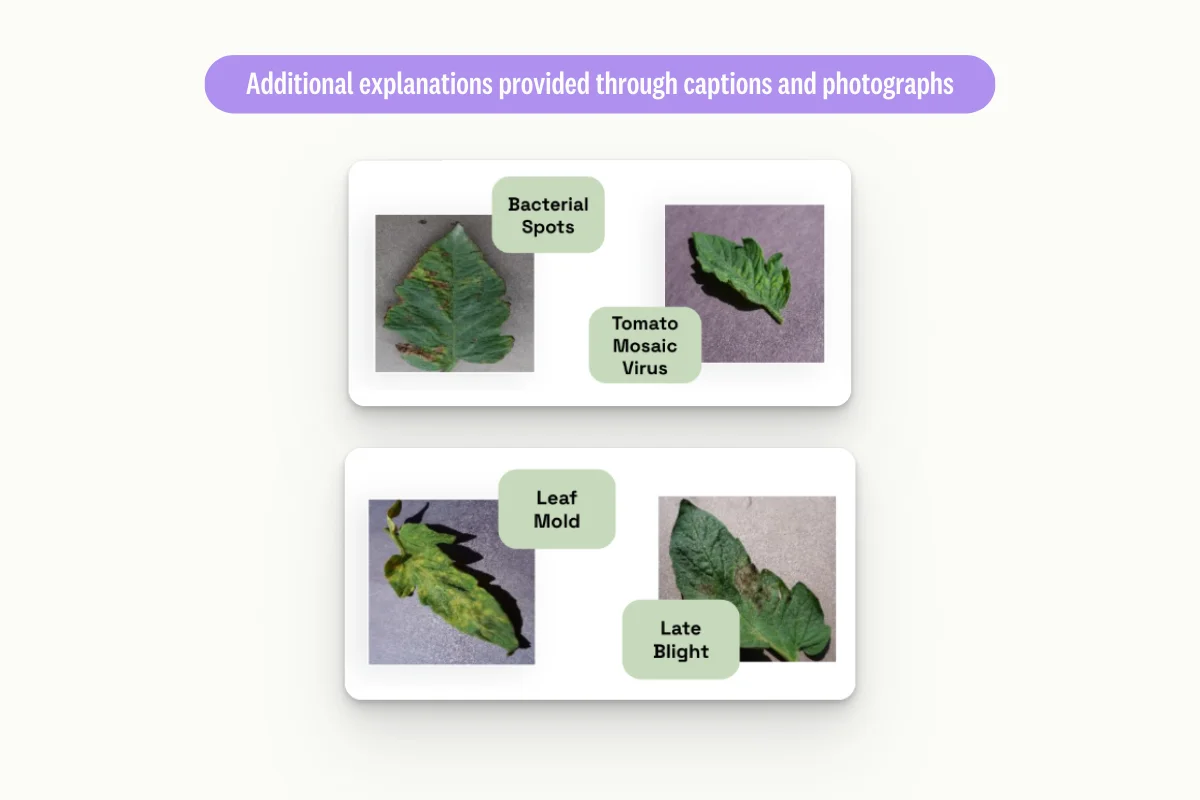

Don’t assume labelers can figure it out from text-based instructions only!

What you show is what you get! Examples are way easier and quicker to understand rather than long text paragraphs, especially in very domain-specific topics.

Don’t be overly prescriptive

Providing too much guidance can paralyze labelers, and it is often too hard to keep track of many complex rules, especially in document processing.

When starting out, it is important to find balance in guiding labelers to the correct annotation without constricting them - remember to utilize the honeypot/gold standard to evaluate training.

Example of a state-of-the-art guidelines structure

This structure example is based on the best practices observed and built with various Kili clients. It is a template to adapt based on specific business needs.

1. Introduction

- Context & AI objective

- Annotation task macro description

2. Labeling tasks description

- Asset (document, text, image) structure overview

- Ontology/classes description

- Management of uncertainty

- Labeling tooltips

3. Labeling rules & examples

- General rules & examples

- Class-specific rules & examples

4. Evaluation of annotated data

- Overview

- Evaluation metrics

- Acceptance criteria

Detailed content for each category:

Data Labeling Guidelines Structure

SectionContent I. Introduction This section will introduce the overall problem to the labeling team.I. a. Context & AI objectiveGlobal context and relevant elements on the AI & business objectives…I. b. Annotation task macro description… and macro description to introduce the labeling task. II. Labeling tasks descriptionThis second section will provide the overall description of the labeling task. II. a. Asset structure overviewDescription of the expected structure of the assets to receive, with their different parts if relevant. II. b. Ontology descriptionOverview of the global ontology, how its setup, and the associated classes. II. c. Management of uncertaintyAn important section to describe the process for the labeler to manage uncertain labeling tasks (e.g., other section, skip button, chat feedback…)II. d. Labeling tooltips Global description of the use of the interface with the configured ontology, including links to tool documentation if required and tips to facilitate use (e.g., use of shortcuts).III. Labeling rules & examplesThis third section will focus on the rules of labeling and illustrated examples.III. a. General rules & examplesRules & illustrations to annotate (e.g., number of boxes per object, overlapping rules…) at the overall level. Edge cases must be described here and illustrated.III. b. Class-specific rules & examples Rules & illustrations to annotate (e.g., number of boxes per object, overlapping rules…) for each class. Edge cases must be described here and illustrated.IV. Evaluation of annotated dataFinally, an explanation of the evaluation process can be relevant to sensibilize how to reach success. IV. a. OverviewMacro presentation of the strategy chosen to evaluate the quality.IV. b. Evaluation metricsDeep dive into the metrics chosen for the evaluation (consensus, honeypot, IoU, review step).IV. c. Acceptance criteriaClear definition of the acceptance for a given asset, with an illustration of the correction and acceptance.

Labeling Guidelines: focus on specific tasks

Classification

Classification is a simple task, but creating guidelines can still be a challenge, notably when having to manage numerous categories and complex nesting in the ontology. Emphasis has to be placed on the optimization of the search for a given category:

- Leveraging the search bar;

- Using codes to name each class to facilitate navigation;

- The correct choice of various display options.

Object detection

For object detection, the type of task impacts the complexity of the labeling (e.g., bounding box vs. semantic segmentation). Specific features may need to be highlighted to improve the user experience: shortcuts and smart tooling (interactive segmentation, tracking…).

In addition, the rules to detect the object must be carefully described in the guidelines: which part of the object should be labeled? How to manage partly hidden objects?

Relations

Relations can be constrained at job definition to facilitate the UX for the end user: it is advised to rely on this feature to facilitate the labeling jobs.

Named entity recognition

In named entity recognition, rules and perimeter of the entities to extract has to be carefully described in the guide: token size, type of token to consider…

.png)

_logo%201.svg)

.webp)