Stop us if you’ve heard this one before: Bigger is always better in AI, right? When it comes to language models, it seems like the race is all about size—larger datasets, more parameters, and massive computational power. But what if we told you that a smaller, more efficient model from Hugging Face could deliver the same, if not better, results?

Enter SmolLM, a compact yet powerful language model designed to challenge the status quo. In an industry obsessed with size, SmolLM proves that quality and precision can outweigh sheer volume. Built on a meticulously curated high-quality training corpus, which includes datasets like Cosmopedia v2 and Python-Edu, SmolLM isn’t just about being small; it’s about being smart.

Unlike larger models that demand extensive hardware and energy, SmolLM focuses on efficiency, making it ideal for applications where resources are limited but speed and accuracy are still crucial. This innovative model maintains the powerful capabilities of traditional language models while being faster and more adaptable, opening new possibilities for developers and researchers working with AI in various environments.

The SmolLM Corpus for training smaller Large Language Models

One of the standout features of SmolLM is the high-quality training data that powers its performance. Unlike many models that rely on vast but often unfiltered datasets, SmolLM’s development focused on curating a corpus that is both diverse and targeted. This careful curation is what allows SmolLM to punch above its weight class, delivering results that rival those of much larger models.

At the heart of SmolLM is the SmolLM-Corpus, a collection of datasets meticulously assembled to provide a broad range of knowledge while maintaining relevance and educational value. This corpus includes:

- Cosmopedia v2: This new high quality dataset is a jackpot of synthetic textbooks, blog posts, and stories, totalling 28 billion tokens. Generated by Mixtral, Cosmopedia v2 represents the largest synthetic dataset used in pre-training SmolLM. What makes Cosmopedia v2 exceptional is not just its size but its content. By using a "seed sample" approach, Mixtral generated content on specific topics, ensuring that the data is not only diverse but also aligned with real-world applications. This method adds depth to the dataset, making SmolLM well-versed in a variety of topics.

- Python-Edu: As its name suggests, Python-Edu is focused on educational Python code samples. Sourced from The Stack, this dataset contains 4 billion tokens of carefully selected Python scripts and tutorials. This dataset enriches SmolLM with a strong foundation in programming, particularly in Python, one of the most widely used languages in AI and data science. This makes SmolLM an excellent tool for applications requiring programming knowledge or for generating code-based outputs.

- FineWeb-Edu: Rounding out the corpus is FineWeb-Edu, a dataset of educational web content amounting to 220 billion tokens. FineWeb-Edu is deduplicated, meaning that repetitive or overly similar content has been filtered out, leaving behind a rich, varied dataset. This ensures that SmolLM is not just parroting the same information but has a well-rounded understanding of a wide range of topics.

These datasets collectively ensure that SmolLM models are not only knowledgeable but also capable of reasoning and making connections across different domains. The result is a model that, despite its smaller size, can outperform others in its class, particularly when it comes to common sense reasoning and world knowledge.

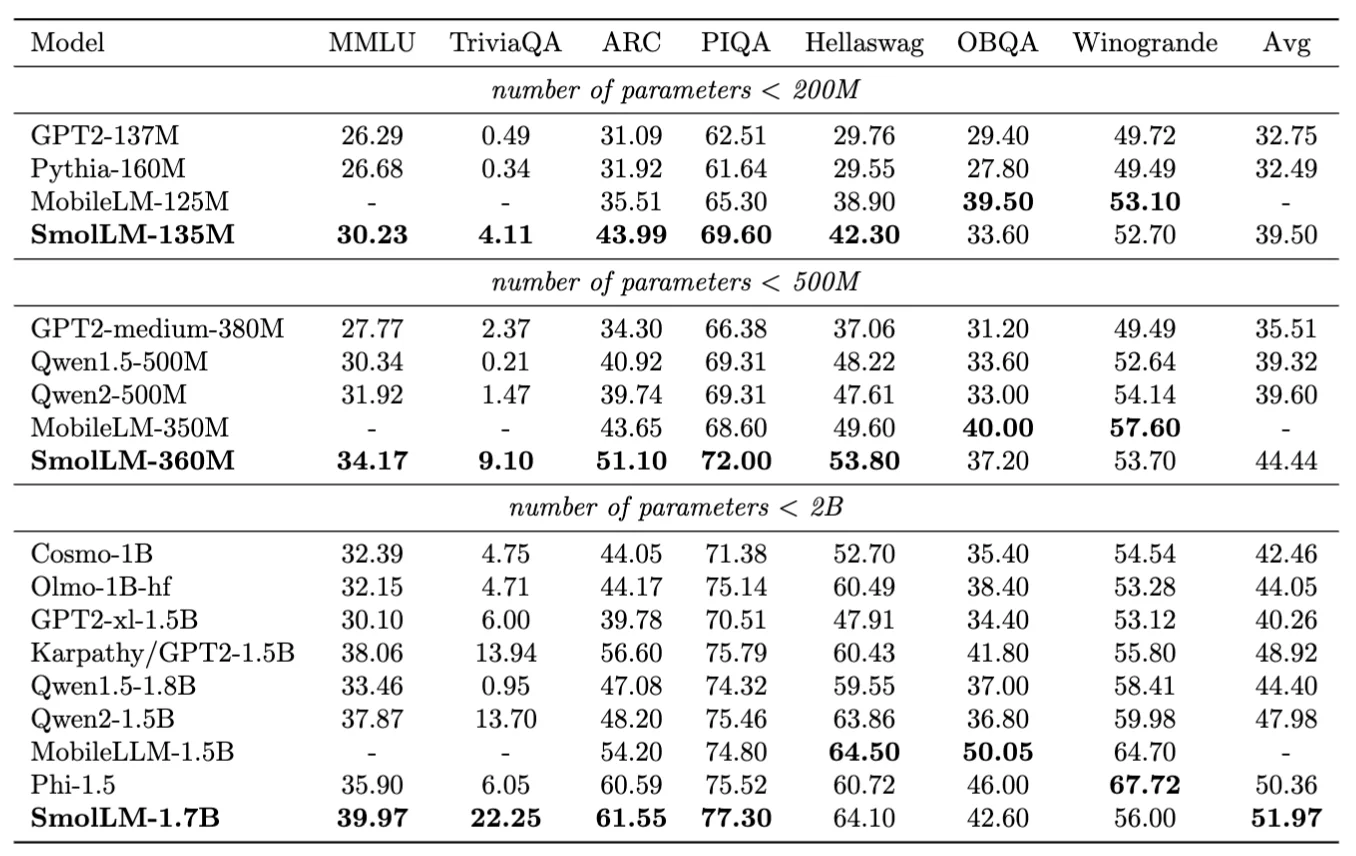

Evaluation of SmolLM models on different reasoning and common knowledge benchmarks.

Training and Evaluation of SmolLM

The impressive performance of SmolLM is not just due to its curated corpus but also the rigorous training and evaluation processes that ensure its efficiency and reliability. A high quality dataset plays a crucial role in training SmolLM, enhancing its performance and evaluation. SmolLM models are crafted to maximize the potential of their smaller size, and the results speak for themselves.

Training Process

The training of SmolLM models was conducted with a clear focus on achieving optimal performance within the constraints of a smaller architecture. The key was not to merely replicate the training methods used for larger models but to adapt and refine them specifically for a more compact design. This approach involved several critical steps:

- Efficient Use of Resources: SmolLM was trained using techniques that optimise computational resources, ensuring that the model’s smaller size did not compromise its ability to learn complex patterns. By focusing on quality over quantity, the training process emphasised the depth of understanding rather than just the breadth of data coverage.

- Fine-Tuning with Specific Objectives: SmolLM underwent fine-tuning sessions tailored to enhance its performance on tasks that require common sense reasoning, world knowledge, and technical proficiency, particularly in Python. This targeted fine-tuning ensures that the model is not just well-trained but specifically adept at handling the kinds of queries and tasks it’s most likely to encounter.

- Continual Learning: The model was also trained with a continual learning approach, allowing it to adapt and improve over time as it encounters new data or tasks. This ensures that SmolLM remains relevant and effective in a rapidly evolving AI landscape.

- Reinforcement Learning: Advanced training methodologies, including reinforcement learning, were employed to incorporate human feedback and improve learning outcomes, further enhancing the model's performance.

Evaluation and Benchmarks

After training, SmolLM models were rigorously evaluated against a series of benchmarks designed to test their capabilities across a diverse set of tasks. These benchmarks included tests for common sense reasoning, world knowledge, and technical tasks, such as coding.

- Common Sense Reasoning: SmolLM was tested against other models in its size category and consistently outperformed them in tasks that required understanding and reasoning based on everyday knowledge. This highlights the model’s ability to not only store information but to apply it in a meaningful way.

- World Knowledge: On benchmarks that required a broad understanding of various topics, SmolLM again excelled, showing that its curated datasets provided a robust foundation for answering questions and making inferences about a wide range of subjects.

- Technical Proficiency: Perhaps most notably, SmolLM demonstrated strong performance in tasks involving Python programming. Thanks to the Python-Edu dataset, SmolLM can generate accurate and relevant code snippets, making it an invaluable tool for developers and educators alike.

Overall, SmolLM’s training and evaluation processes underscore its effectiveness as a smaller model that doesn’t compromise on performance. By leveraging a curated dataset and focused training techniques, SmolLM manages to deliver results that are on par with, or even superior to, much larger models. Click here to know more about their Data curation methods.

Use Cases for (Small) Large Language Models

SmolLM excels in various scenarios where efficiency and speed are paramount. For instance, it's particularly well-suited for edge computing, where devices operate with limited processing power and memory. Developers can use SmolLM in mobile applications, ensuring fast response times without draining battery life. It’s also ideal for real-time applications like chatbots and customer support systems, where latency can significantly impact user experience. Furthermore, SmolLM's compact size allows for easier deployment across different platforms, making it versatile for diverse AI-driven tasks.

SmolLM represents a significant advancement in the field of AI, offering a powerful solution for those seeking high performance without the high costs associated with larger models. Its efficiency makes it a versatile tool, ideal for applications ranging from mobile devices to real-time customer interactions. By balancing speed, accuracy, and resource usage, SmolLM empowers developers and researchers to deploy cutting-edge AI technology in a more accessible and sustainable way.

Real-World Applications of Small Language Models

The true value of any language model lies in its practical applications, and SmolLM is no exception. Despite its smaller size, SmolLM is designed to excel in a variety of real-world scenarios, offering both efficiency and versatility across different domains. Here’s a look at some of the key areas where SmolLM shines:

1. Educational Tools and Content Generation

One of the most promising applications of SmolLM is in the field of education. Thanks to its training on datasets like Cosmopedia v2 and Python-Edu, SmolLM is particularly adept at generating educational content. Whether it’s creating synthetic textbooks, drafting tutorials, or answering student queries, SmolLM can serve as a powerful tool for educators and learners alike. Additionally, the use of open source models in educational settings enhances privacy and control over sensitive data, making it a reliable choice for institutions.

For example, an online education platform could integrate SmolLM to generate personalised study materials tailored to individual learning needs. This could range from simplified explanations of complex topics to creating coding exercises in Python. The model’s ability to produce high-quality, accurate content makes it an invaluable resource for both self-learners and formal educational institutions.

2. Code Assistance and Automation

SmolLM’s proficiency in Python, bolstered by the Python-Edu dataset, makes it an excellent candidate for code assistance and automation tasks. Developers can use SmolLM to generate code snippets, debug issues, or even write complete functions based on natural language prompts. This capability can significantly speed up the development process, especially for tasks that require repetitive coding or when dealing with large codebases.

Furthermore, SmolLM can be used in integrated development environments (IDEs) to provide real-time coding suggestions, making it easier for developers to write clean, efficient code. Its smaller size also means that it can be integrated into lightweight applications, making advanced code assistance accessible even on devices with limited computational power.

3. Customer Support and Chatbots

In customer support, response time and accuracy are crucial. SmolLM’s ability to process and generate human-like text makes it ideal for developing intelligent chatbots that can handle customer queries effectively. By drawing on its extensive training data, SmolLM can provide detailed, contextually relevant responses across a wide range of topics. SmolLM's text generation capabilities are particularly beneficial for creating engaging and accurate responses, enhancing the overall customer experience.

For businesses, this means the ability to deploy cost-effective, efficient customer support solutions without compromising on the quality of service. Whether it’s answering frequently asked questions, troubleshooting common issues, or guiding users through complex processes, SmolLM-powered chatbots can enhance the customer experience while reducing the burden on human support teams.

4. Research and Development

For researchers and developers working on AI and machine learning projects, SmolLM offers a flexible and powerful tool for experimentation. Its smaller size allows for quicker iteration and testing, making it easier to explore new ideas without the resource demands of larger models. Whether it’s for prototyping new algorithms, testing hypotheses, or conducting exploratory data analysis, SmolLM provides a robust foundation for innovation.

Moreover, SmolLM’s ability to integrate world knowledge and common sense reasoning makes it a valuable asset in areas like natural language processing, where understanding context and nuance is critical. Researchers can leverage SmolLM to develop more advanced AI systems that require a deep understanding of language and its various applications.

SmolLM’s versatility across these domains demonstrates that a smaller model can still deliver powerful, impactful results. By focusing on specific strengths and targeted applications, SmolLM proves that efficiency and effectiveness can go hand in hand.

At Kili Technology, we offer comprehensive services to ensure your AI model performs at its best:

- LLM Alignment Services: Our platform specialises in aligning your AI model with your specific goals and industry needs. We help refine and fine-tune GPT-4o Mini to maximise its impact on your operations.

- LLM Evaluation Services: With our evaluation services, you can continuously monitor your model’s performance, identify weaknesses, and receive expert guidance on improvement strategies. Our tools and expertise ensure that GPT-4o Mini remains effective and relevant in your business.

Conclusion

In this blog we saw that SmolLM is more than just a smaller language model; it’s a paradigm shift in how we think about efficiency and performance in AI. By focusing on quality training data, targeted fine-tuning, and rigorous evaluation, SmolLM has proven that bigger isn’t always better. This model delivers powerful results across a variety of applications, from education and coding to customer support and research, making it one of the best models available.

In an industry where the trend often leans towards ever-larger models, SmolLM stands out by offering a more streamlined, effective alternative. Whether you’re looking to integrate AI into your educational tools, enhance your development process, or deploy smarter chatbots, SmolLM provides the capability and reliability you need, all without the heavy resource demands of larger models.

.png)

_logo%201.svg)

.webp)