With the boom of generative AI and Large Language Models (LLMs), it would only make sense for businesses and AI teams to use these technologies to advance their applications and models. Don’t rush in blindly: We have some key insights and a handy free EBook from O’Reilly to share with you.

Introduction

The rise and evolution of foundation models and large language models (LLMs) this year have unleashed a transformative revolution in the field of artificial intelligence (AI). However, their true potential can only be unlocked with the support of high-quality, well-structured training data. As we explore this complex terrain, let's dive into seven critical insights from O'Reilly's eBook "Training Data for Machine Learning."

These insights offer a glimpse into the art of constructing training datasets that empower these advanced AI models, enabling them to conquer an ever-expanding array of use cases in our fast-paced digital era.

Intrigued? This is just the tip of the iceberg.

Uncover these expert insights' full depth and breadth by grabbing an early access copy of O'Reilly's eBook, "Training Data for Machine Learning." Don't miss this chance to elevate your understanding of dataset construction and set the stage for success in your AI endeavors.

The Crucial Role of High-Quality, Representative, and Real-World Aligned Training Data

Having high-quality data remains crucial, even as foundation models and LLMs advance. The effectiveness of any AI/ML system, including LLMs, fundamentally depends on the quality of the training data. While LLMs and foundation models aren't perfect and have challenges like poor performance on domain-specific tasks and errors, we can address these issues by using high-quality data to fine-tune and adapt them.

Several papers have demonstrated this approach. For instance, Meta released a paper showcasing how they transformed their large language model, Less is More for Alignment (LIMA), into a conversational model similar to a chatbot. They achieved this with just 1,000 carefully selected training examples, a significant reduction compared to the 50,000 examples used by OpenAI.

Out of the 1,000 examples, only 250 were manually created. This reduction was possible by meticulously sampling examples for quality and diversity. Despite the lower quantity, the fine-tuned LIMA performed almost on par with GPT-3.5. This implies that by fine-tuning these models with a limited number of high-quality examples, we can compete with state-of-the-art models, highlighting the immense value of high-quality training data.

Generative AI: An Aid, Not a Substitute

Generative AI can be used to increase the diversity and size of training datasets by automatically expanding them with relevant examples, particularly useful for rare classes. It is also possible to use generative techniques to filter out low-quality data or fill in missing attributes.

When using generative AI, it is important to consider a few key points:

- Quality and Diversity: The generated data should be of high quality and encompass a diverse range of scenarios the model will likely encounter in the real world. This ensures that the model's training is comprehensive and representative.

- Ethical Implications: Generative AI can potentially create misleading or biased data if not used carefully. It is essential to consider the ethical implications and take steps to mitigate any biases or inaccuracies in the generated data.

- Human Supervision: Human alignment is crucial in the context of generative AI. Human supervision is necessary to guide the generative process, review the generated data, and ensure that it aligns with the intended objectives.

Humans must review all generated examples to ensure that they cover a wide range of values for each attribute—no matter how small—and represent real-world data. This review process should also include checking for potential biases in the generated data to ensure fairness and accuracy.

Generative AI is handy when used with caution and a human-in-the-loop approach. With its help, we can construct high-quality training datasets tailored to the specific problem at hand while avoiding costly errors.

High-Quality Training Data is A Long-Term Necessity

Training data isn't a one-off requirement. The need for well-curated training data will persist as AI/ML transitions from research and development to industry applications. Continuously feeding your model with high-quality training data will improve the following:

- Model Performance: High-quality training data directly impacts the performance of AI models. The more diverse and representative the training data, the better equipped the model becomes to handle various scenarios and make accurate predictions.

- Adaptability: As the world evolves, so do the challenges and contexts AI models need to address. Continuously updating and expanding the training data allows models to adapt and stay relevant over time.

- Generalization: AI models aim to generalize patterns and make predictions in real-world situations. A large and diverse training dataset helps models learn generalized rules rather than memorizing specific examples, enabling them to handle a wide range of inputs effectively.

To remain competitive in this ever-changing landscape, it is essential to continuously invest in creating and expanding training data that is well-structured and represents real-world scenarios. We’ve prepared a whitepaper on Data-Centric AI here.

Design the schema strategically

Having a well-structured data labeling guideline is a critical tool and one we provide on our blog. The schema is a critical aspect of training data design that often doesn't receive enough consideration. Its importance cannot be overstated, as a poor schema can severely hinder the system's learning ability, while a well-thought-out schema can unlock much more complex capabilities.

Designing the schema involves defining the structure and format of the training data. This includes determining the data modalities, such as images or text, and how the data will be labeled or annotated. The labeling process encompasses defining the target categories or concepts through classes, adding additional descriptive labels through attributes, establishing relationships between data instances, and incorporating geometric annotations like bounding boxes.

In addition, it is necessary to decide on the taxonomy or ontology that will be used to define and organize the classes or concepts. Another consideration is whether to segment or decompose the data into parts or keep them as whole objects. Descriptive metadata also needs to be captured and represented appropriately. Furthermore, determining the types of user interactions that will provide valuable signals, such as clicks or corrections, is essential. Finally, the method of encoding and serializing the data, such as CSV, JSON, or a database, must be chosen.

When designing the schema, several vital goals should be kept in mind. First, it should enable the machine learning model to learn the desired concepts effectively. Consistency across data instances is crucial to ensure reliable results. The schema should also allow for evolution and adaptation as needs change over time. Incorporating quality control mechanisms is vital to maintain data integrity. Lastly, keeping the schema understandable to human annotators facilitates efficient and accurate annotation.

The simplest way to build high-quality datasets

Our professional workforce is ready to start your data labeling project in 48 hours. We guarantee high-quality datasets delivered fast.

The Essential Role of Domain Experts in Data Annotation

Domain experts are indispensable in constructing high-quality training data for AI systems. Their expertise is critical for translating complex real-world concepts into labeled examples that machine learning algorithms can learn from.

First and foremost, domain knowledge is essential for designing the schema and taxonomy that underpins the training data. What are the important classes, attributes, and relationships that need to be captured? How should ambiguous cases be handled? An expert's understanding of the problem space informs these structural decisions.

Additionally, domain experts provide vital human judgment and nuance when actually annotating the data. ML models can struggle with the subtleties and exceptions that specialists handle with aplomb. For example, radiologists can inject their medical knowledge to identify ambiguous tumors or lesions when labeling medical images. The same goes for seasoned mechanical engineers annotating defect patterns in industrial inspection footage.

Furthermore, experts can spot illogical or unrealistic data artifacts that algorithms might take as ground truth. Reviewing samples during annotation improves data quality and representation. Their expertise also speeds up the labeling process compared to non-experts.

Lastly, domain specialists are crucial in evaluating model performance and results. They can best discern cases where the AI errs due to limitations of the training data. This allows for iterative refinement of the datasets to address these gaps.

In summary, access to scarce domain expertise is crucial for developing robust AI systems grounded in real-world knowledge. Leveraging subject matter experts throughout the training data pipeline enables translating this expertise into machine learning models that actually work. The human touch remains invaluable.

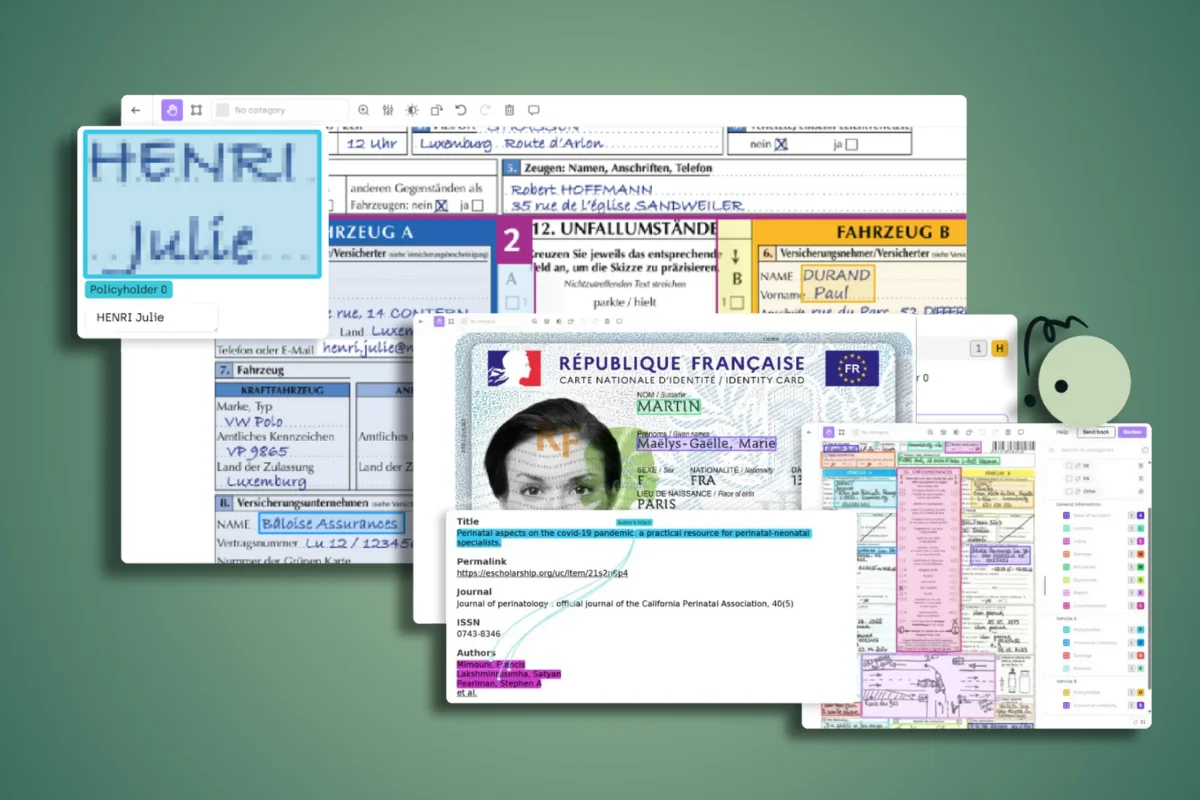

Early Integration with Annotation Tools

Early integration of a data labeling tool allows for testing end-to-end annotation workflows and helps identify and address potential issues. This facilitates quick iteration of schemas once the tool is ready. In cases where schema changes necessitate re-annotation of data, it can be a costly process after the fact. Integrating the tool early on makes it possible to identify dataset quality issues and address bias problems earlier.

Furthermore, having the ability to measure and fine-tune annotation speed and agreement becomes more accessible with the right tools. It is also important to acknowledge that tools directly impact the interfaces and experiences of human annotators. Therefore, understanding the tool's capabilities further informs whether automation can be leveraged to enhance the annotation process.

Some more tips when choosing a data labeling tool:

- Accuracy and Quality Control: Look for a tool that ensures high data labeling accuracy and provides quality control mechanisms, such as human-in-the-loop validation or consensus-based annotations.

- Scalability: Consider a tool that can handle large volumes of data and scale with your needs. This is particularly important if you anticipate an increase in data labeling requirements.

- Annotation Flexibility: Choose a tool that supports various annotation types, such as bounding boxes, semantic segmentation, or text classification. This flexibility allows you to label different types of data effectively.

- Collaboration and Project Management: Look for features that enable collaboration among annotators, project managers, and stakeholders. This can include task assignment, annotation review workflows, and communication channels.

- Integration and APIs: Consider whether the tool integrates smoothly with your existing infrastructure and workflows. Look for APIs or SDKs that allow easy integration with your data pipelines or machine learning frameworks.

- Data Security and Privacy: Ensure that the tool provides measures to protect the confidentiality of your data and complies with applicable data protection regulations.

- Customization and Extensibility: Look for a tool that allows you to customize annotation interfaces, workflows, and instructions to match your specific needs. Extensibility through plugins or custom scripting can also be valuable for advanced use cases.

Overall, considering the tool's capabilities when designing the schema, integrating it early, and leveraging it to its full potential can significantly improve the efficiency and effectiveness of the annotation process.

Getting a basic end-to-end annotation workflow with a sample schema into the tools very early, even if prototypes, avoid many pitfalls. Annotators can work with actual data and give feedback. The schema can be refined quickly. Data quality can be measured. Integrating late leads to more rework and wasted effort.

Simplify your LabelingOps

Integrate labeling operations on Kili technology with your existing ML stack, datasets and LLMs. Let us show you how.

Security is Paramount

Ensuring data security is essential for any AI project and should never be compromised. One effective way to protect sensitive information during the training phase is by implementing robust access control measures. For companies, prioritizing data security is crucial to safeguard proprietary data and comply with data privacy regulations. It is also important to choose a data labeling platform that guarantees confidentiality and protects sensitive data from unauthorized access or breaches.

Companies like Kili Technology value strong security measures, including secure hosting options, authentication protocols, and encrypted data storage. Additionally, leading data labeling tools have secure integrations with major cloud storage providers like AWS S3, Azure Blob Storage, and Google Cloud.

This secure transfer ensures the safety of your data. In addition to these features, responsible data labeling software providers must comply with rigorous security and privacy standards, such as SOC2, ISO, HIPAA, and GDPR. They guarantee data security through measures such as data encryption, strict access controls, and regular security audits.

Learn More

Don't compromise on data security! We invite you to download our comprehensive white paper that delves deep into best practices for handling sensitive data. Gain insights into rigorous security measures, stringent compliance standards, and robust access controls. Equip yourself with the knowledge to safeguard your proprietary information effectively.

Conclusion

In conclusion, the process of curating training data for AI systems is a delicate and intricate endeavor involving a myriad of factors. The critical aspects of this process are prioritizing data quality, invaluable input from subject matter experts, early integration of annotation tools, and a steadfast commitment to data security. Selecting the right tools for data labeling can significantly affect the efficiency and effectiveness of your AI training pipeline. A sophisticated tool can offer accuracy, scalability, flexibility, collaboration, and extensibility. Furthermore, with data being an organization's most valuable asset, choosing a platform that prioritizes robust security measures is crucial.

O’Reilly Training Dataset EBook

The journey of AI development is paved with meticulous planning and strategic decisions, and every step taken toward enhancing the quality of your training data is a leap toward a more accurate and reliable AI system.

That's why we partnered with O'Reilly to deliver you this book on Training Data, laying out the roadmap for successful AI projects and helping you make informed decisions! We encourage you to download the early access version for free available right now!

.png)

_logo%201.svg)