A Data Story of the GLM Model Family: From GLM (2021) to GLM-5 (2026)

GLM-5's paper has just been published. Let's deep dive into the GLM Model Family to discover how the model has been trained through their data pipelines.

Learn the latest techniques to building high-quality datasets for better performing AI.

.webp)

LLM-as-a-judge and HITL aren’t competing approaches — they’re complementary layers. This article covers the practical keys to making both work together reliably in enterprise AI systems.

.png)

What's the difference between LLM-as-a-judge, HITL, and HOTL workflows? We cover this and provide practical tips for each application in our latest guide.

.png)

How new annotation tools are transforming workflows from electronics inspection to agricultural monitoring

.png)

This guide will walk you through everything you need to know about OCR data labeling, from understanding the fundamentals to implementing quality workflows that scale across your organization.

.png)

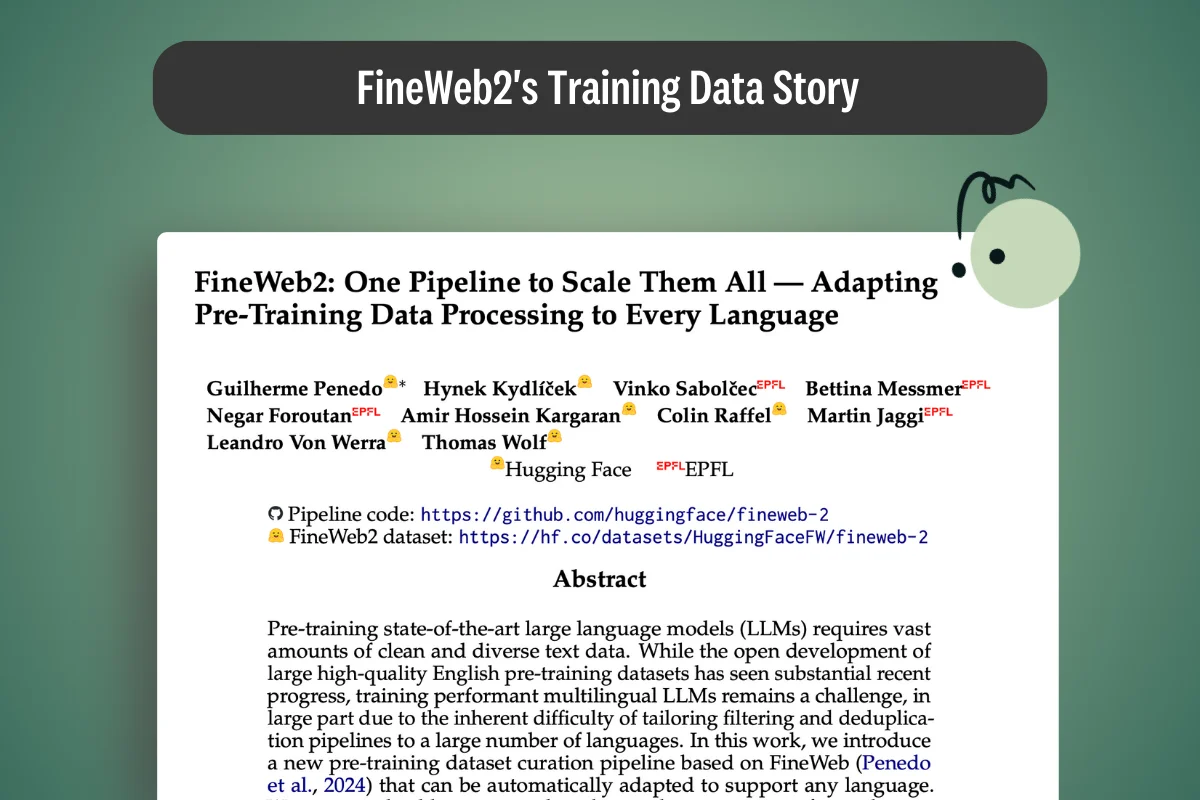

Explore the FineWeb2 dataset: 20TB of multilingual pre-training data covering 1,000+ languages. Learn how its filtering pipeline builds better LLMs.

.png)

Intelligent Document Processing (IDP) minimises human errors by automating data entry. Learn more about what IDP is, how it works and its benefits for modern enterprises.

.png)