Geospatial data annotation requires far more than just drawing bounding boxes on satellite imagery. As machine learning engineers push the boundaries of geospatial data analysis and the use of geographic information systems (GIS) in advanced sectors like geospatial intelligence (GEOINT), location intelligence, surveillance, the quality of annotations has become the critical differentiator for geospatial AI model performance.

These applications demand high-quality labeled geospatial data for it to run effectively in production. Consequently, the focus of successful large-scale data annotation projects must shift from sheer volume to advanced techniques. This article explores these methods, which are essential for creating the high-quality training data needed for AI models to perform reliably in production.

Multi-Modal Annotation Fusion Techniques

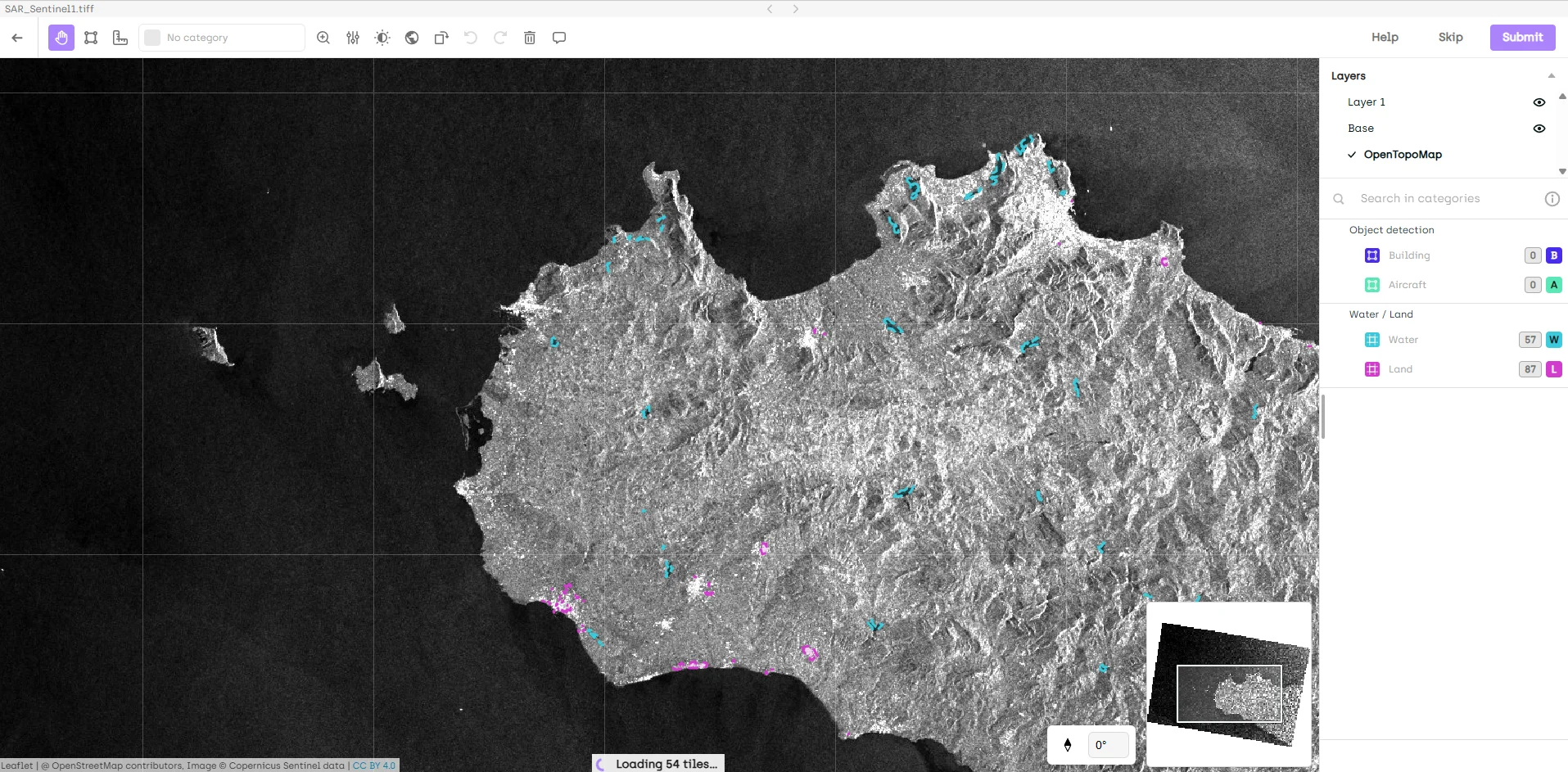

The complexity of real-world geospatial analysis rarely fits neatly into single-sensor paradigms, which is why advanced annotation workflows leverage multiple data modalities to create more accurate training datasets. This technique involves managing a mix of data types, such as raster data from satellite and aerial imagery (e.g., optical and SAR) and vector data overlays—like points, lines, and polygons—that represent known geographic features or basemaps. Effectively layering these diverse geospatial datasets requires scalable annotation solutions capable of handling this complex geospatial analysis during the annotation process itself. This allows human experts to validate features and resolve ambiguities, creating high-quality data for even the most demanding multimedia projects.

Cross-Sensor Validation Approaches

One of the most powerful techniques for improving annotation quality is cross-sensor validation, which involves using complementary geospatial data sources to validate and refine labels. Synthetic Aperture Radar (SAR) imagery, for instance, penetrates cloud cover that often obscures optical imagery. By overlaying SAR data with optical imagery in your annotation workflow, annotators can verify the presence of objects even when the primary optical source is partially obscured.

Consider a port monitoring application where persistent cloud cover makes consistent optical annotation challenging. By incorporating SAR imagery as a validation layer, annotators can confirm the presence and boundaries of ships, containers, and infrastructure elements that might be partially hidden in the optical data. This approach of using multiple data sources allows annotators to more accurately analyze and validate features, significantly reducing false negatives and improving boundary precision.

Thermal imagery provides another valuable validation layer, particularly for infrastructure and industrial applications. Heat signatures can reveal active facilities, distinguish between similar-looking structures, and provide crucial context that isn't visible in standard optical imagery. An annotator working on industrial site classification can use thermal data to differentiate between active and dormant facilities, improving the semantic accuracy of their geospatial annotations.

Multispectral Layering and Basemap Integration

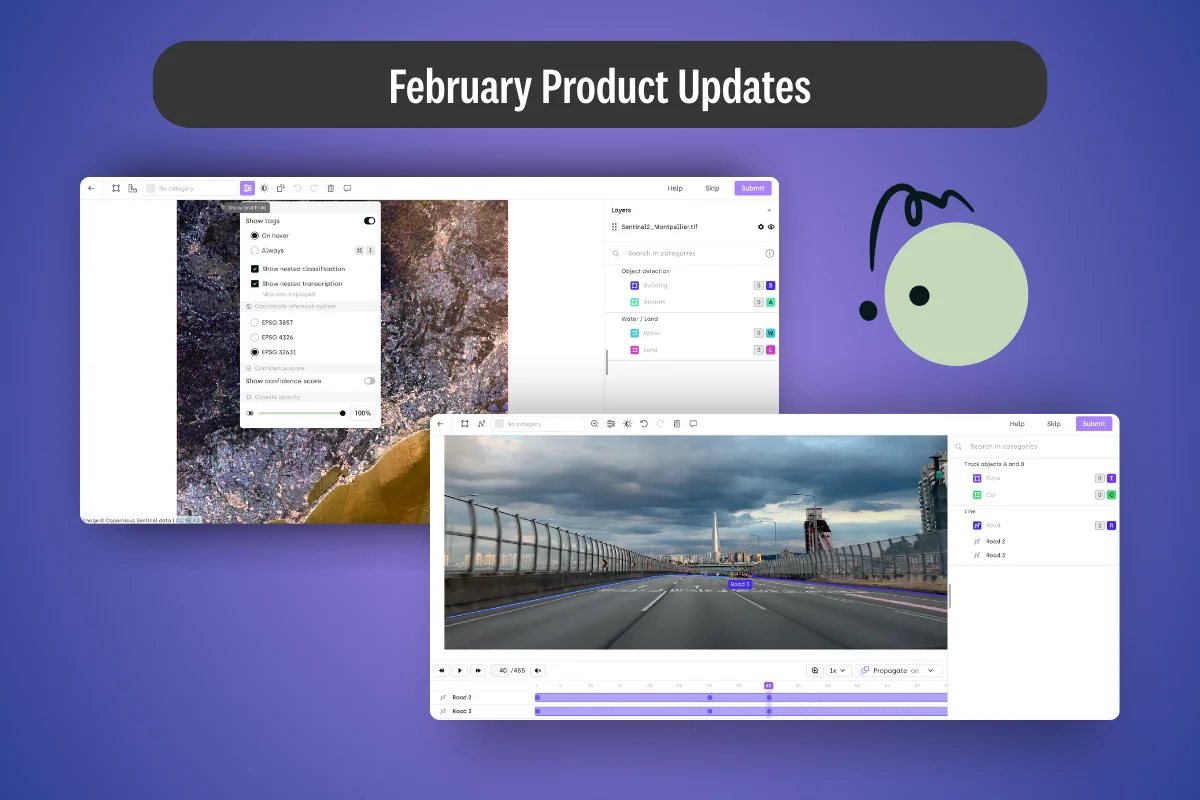

Modern annotation platforms like Kili Technology enable layer management, a set of advanced techniques that transform how annotators interact with complex geospatial data. This approach to multispectral annotation involves the use of maps and reference layers to build essential spatial context. Instead of working with the challenges of raw satellite imagery, annotators can stack multiple spectral bands and reference layers, which drastically improves the accuracy of feature identification.

The key to this method lies in the strategic use of opacity controls and layer toggling during the annotation process. For instance, annotators use polygons and lines for a precise geographic representation by first identifying general features on a natural color composite, then toggling to near-infrared bands to clarify vegetation boundaries or define land parcels on maps, and finally overlaying a basemap to verify infrastructure alignment. This layered approach is particularly valuable for land use classification, where the boundaries between different land cover types can be ambiguous in single-band imagery.

Basemaps and other map-based reference layers are crucial for effective annotation, providing context that raw satellite imagery often lacks. This advanced form of mapping helps annotators align features like road networks and validate classifications using administrative boundaries or topographic information. This additional context is especially valuable when working with imagery from unfamiliar regions where local knowledge might be limited.

Automated Pre-Annotation and Human-in-the-Loop Refinement

The intersection of automation and human expertise, powered by modern labeling tools and their automation features, represents a significant advancement in the annotation process. This human-in-the-loop approach is the key to scaling annotation workflows for complex geospatial analysis tasks. Rather than starting from scratch, AI creates initial annotations, which makes the workflow more efficient and less error-prone. This allows teams to label faster and focus their expertise on refinement, efficiently handling larger datasets with increased productivity.

Smart Initialization Strategies

Foundation models trained on vast datasets of satellite images can provide surprisingly accurate initial annotations for common features. By applying segmentation techniques, these models generate pre-annotations for features like buildings, roads, and water bodies, greatly improving the accuracy and efficiency of the overall process. These pre-annotations serve as a starting point, allowing human annotators to focus their expertise on refinement rather than creation from scratch.

The key to successful pre-annotation lies in understanding its limitations. While foundation models excel at identifying common features, they often struggle with region-specific architecture, seasonal variations, or specialized infrastructure. Smart initialization strategies use these models to handle the routine aspects of annotation while reserving human expertise for the nuanced decisions that require domain knowledge.

Transfer learning from similar geospatial domains offers another powerful initialization approach for effective pre-annotation. A model trained on urban infrastructure in one region can provide useful pre-annotations for similar features in a new geography, even if the specific architectural styles differ. The human annotator's role becomes one of adaptation rather than creation, significantly accelerating the annotation process while maintaining quality. This advanced approach requires robust platforms capable of supporting multiple annotation formats to ensure compatibility across all geospatial data types and workflows.

Confidence-Based Review Prioritization

Not all annotations require the same level of scrutiny. Effective data management is crucial for organizing and tracking annotation review processes, ensuring that large and diverse datasets are handled efficiently. By implementing confidence-based review systems, annotation teams can focus their quality assurance efforts where they’re needed most.

This strategic sampling approach ensures that difficult cases receive multiple reviews while straightforward annotations move quickly through the pipeline. For instance, clearly defined agricultural fields might require only basic review, while complex urban areas with overlapping infrastructure receive multiple expert assessments. This targeted approach maximizes the impact of your quality assurance resources.

Advanced Geometric and Spatial Validation Techniques

Geospatial AI applications often require precise geometric accuracy that goes beyond simple object detection. Advanced validation techniques are therefore essential to ensure annotations meet the stringent requirements of real-world applications. These validation processes involve in-depth spatial analysis of the geographic component of the data, often within modern GIS platforms. By using location-based elements from satellite imagery and GPS data, annotators can perform accurate annotation that moves beyond visual checks to ensure high-quality geospatial data processing and mapping for critical decision-making.

Mensuration for Precision Validation

Mensuration—the science of measurement—transforms annotation from a purely visual exercise into a quantitative discipline. These mensuration techniques involve incorporating precise measurements on the earth’s surface into the annotation process. This allows teams to validate features and the geometric accuracy of their labels, ensuring that all annotated real-world objects are dimensionally correct and consistent across the dataset.

In practice, mensuration involves using the geographic metadata embedded in geospatial imagery to calculate real-world distances, areas, and angles. An annotator labeling aircraft at an airfield, for example, can verify their annotations by measuring wingspan and fuselage length against known specifications. This quantitative validation catches errors that might slip through purely visual inspection.

Scale consistency represents another critical application of mensuration. When working with multi-resolution datasets, mensuration tools help ensure that feature sizes remain consistent across different ground sample distances. A building that measures 50 meters in length should maintain that measurement whether it's annotated in 30cm or 3m resolution imagery.

Collaborative Annotation Workflows

High-quality geospatial annotation is rarely a solo endeavor. Collaborative annotation workflows support better decision-making in geospatial projects by bringing together multiple perspectives and expertise. The most successful projects leverage diverse knowledge through carefully designed collaborative workflows.

Expert Routing Based on Domain Knowledge

Different annotators bring different strengths to a project. Some excel at identifying military equipment, others specialize in agricultural features, and still others have deep knowledge of specific geographic regions. By routing annotation tasks based on these specializations, projects can leverage the full depth of their team's expertise.

Effective expert routing requires understanding both the dataset characteristics and annotator capabilities. A comprehensive annotation project might route military facilities to defense analysts, agricultural areas to agronomists, and urban infrastructure to civil engineers. This specialization not only improves annotation quality but also increases efficiency as annotators work within their areas of expertise.

Regional knowledge presents another crucial dimension for expert routing. Annotators familiar with local architectural styles, agricultural practices, or infrastructure patterns can identify features that might confuse those without regional context. This local expertise becomes particularly valuable when working with diverse global datasets where feature appearance varies significantly by geography.

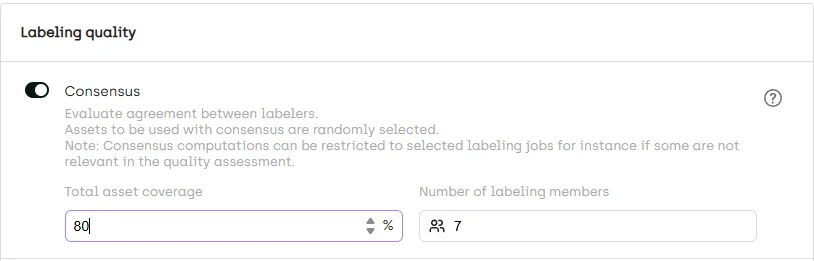

Consensus Mechanisms for Complex Features

Some features defy simple classification, requiring multiple perspectives to annotate accurately. Consensus mechanisms formalize the process of resolving these ambiguities through structured multi-annotator workflows.

The most effective consensus mechanisms go beyond simple majority voting. They incorporate annotator expertise, historical accuracy, and feature-specific requirements to weight different opinions appropriately. For instance, when annotating a complex industrial facility, the workflow might require agreement between annotators with expertise in different aspects of industrial infrastructure.

Structured review processes ensure that disagreements lead to improved understanding rather than confusion. When annotators disagree, the review process should capture not just the final decision but the reasoning behind different interpretations. This documentation becomes valuable training material for future annotators and helps refine annotation guidelines.

Real-Time Quality Metrics and Adaptive Workflows

In the video below, our partner Enabled Intelligence discusses their best practices for monitoring quality and actively adapting their quality workflow depending on performance evolution and project needs. Recently, they won a data labeling contract with the NGA, where Kili Technology's geospatial tool plays a significant part of Enabled Intelligence's core platform.

Modern annotation platforms provide unprecedented visibility into annotation quality as work progresses. Automation enhances the quality and efficiency of annotation workflows by streamlining processes and reducing manual errors. By monitoring quality metrics in real-time, teams can adapt their workflows to maintain high standards while optimizing resource utilization.

Dynamic Quality Thresholds

Static quality assurance processes often waste resources on high-performing annotators while under-reviewing problematic areas. Dynamic quality thresholds adjust review requirements based on real-time performance metrics, ensuring that quality assurance efforts focus where they're needed most.

These adaptive systems track metrics like inter-annotator agreement, temporal consistency, and validation scores to identify when additional review is warranted. An annotator consistently achieving high agreement scores might see their work sampled less frequently, while challenging image sets automatically trigger additional review layers.

Project-specific metric optimization allows teams to tailor their quality thresholds to application requirements. A change detection project might prioritize temporal consistency, while an object counting application focuses on detection recall. By aligning quality metrics with project goals, teams ensure that their annotation efforts directly support model performance.

Annotation Accuracy Tracking

Real-time accuracy tracking transforms quality assurance from a post-hoc activity into an integral part of the annotation process. By monitoring accuracy metrics as annotations are created, teams can identify and address issues before they propagate through the dataset.

Pattern identification represents one of the most valuable applications of accuracy tracking. By analyzing error patterns across annotators, image types, and feature classes, teams can identify systematic issues that individual review might miss. Perhaps certain annotators consistently struggle with specific feature types, or particular imaging conditions lead to increased error rates. These insights drive targeted training and process improvements.

Specialized Techniques for Challenging Scenarios

Even with advanced tools and workflows, some annotation scenarios present unique challenges that require specialized approaches.

Handling Ambiguous Boundaries

Natural features rarely present clear-cut boundaries. The transition from forest to grassland, the edge of a water body, or the extent of urban sprawl all present boundary ambiguities that challenge even experienced annotators. Advanced techniques help resolve these ambiguities consistently.

Multispectral data provides one of the most powerful tools for boundary clarification. Vegetation indices derived from near-infrared bands can reveal subtle transitions invisible in natural color imagery. Water boundaries become clearer when using spectral bands that emphasize the water-land interface. By providing annotators with these enhanced visualizations, projects can achieve more consistent boundary placement.

Mensuration tools offer another approach to boundary ambiguity, particularly for features with expected dimensions. Agricultural fields, parking lots, and other human-made features often follow predictable size patterns. By measuring annotated features against these expectations, annotators can validate their boundary decisions and maintain consistency across the dataset.

Multi-class labeling strategies acknowledge that some boundaries are inherently fuzzy. Instead of forcing binary decisions, these approaches allow annotators to indicate uncertainty through graduated labels or confidence scores. This additional information proves valuable during model training, allowing algorithms to learn from the inherent ambiguity in the data.

Dense Object Annotation Strategies

Crowded scenes present unique challenges for annotation quality. Whether labeling vehicles in a parking lot, containers in a port, or buildings in a dense urban area, overlapping objects and occlusions complicate the annotation process.

Systematic annotation approaches prevent the chaos that can emerge in dense scenes. Rather than randomly selecting objects, annotators follow predefined patterns—such as working in consistent directions or completing defined sections—that ensure complete coverage without duplication. This systematic approach proves particularly valuable when multiple annotators work on the same dense scene.

Hierarchical annotation strategies help manage complexity in dense environments. Instead of attempting to annotate every object in a single pass, annotators might first identify major features, then progressively add detail. This approach not only improves accuracy but also allows for different levels of annotation detail depending on project requirements.

Implementation Best Practices

Successfully implementing advanced annotation techniques requires thoughtful configuration and continuous refinement.

Tool Configuration for Quality Optimization

Kili Technology provides extensive configuration options that support quality-focused workflows. Successful implementation begins with thoughtful interface setup that guides annotators toward quality outcomes.

Custom workflow design should reflect your specific quality requirements. Multi-step review processes, specialized interfaces for different feature types, and integrated validation tools all contribute to annotation quality. The key lies in balancing comprehensiveness with usability—overly complex workflows can actually decrease quality by overwhelming annotators.

Feature-specific configurations optimize the annotation experience for different object types. Dense point annotations might benefit from specialized clustering tools, while large area annotations require efficient polygon editing capabilities. By tailoring the interface to your specific annotation challenges, you remove friction that can lead to quality issues.

Team Training and Knowledge Management

Even the most sophisticated tools require skilled users to achieve high-quality results. Comprehensive training programs ensure that annotators understand not just the mechanics of annotation but the reasoning behind quality requirements.

Building comprehensive annotation guidelines creates a shared foundation for quality. These guidelines should go beyond simple feature definitions to include edge cases, regional variations, and quality standards. The most effective guidelines evolve through practice, incorporating lessons learned from real annotation challenges.

Continuous improvement processes ensure that annotation quality increases over time. Regular team reviews of challenging cases, systematic analysis of error patterns, and incorporation of annotator feedback all contribute to evolving best practices. This iterative approach transforms annotation from a static process into a learning system.

Performance-based annotator development recognizes that different team members progress at different rates. By tracking individual performance metrics and providing targeted training, teams can help each annotator reach their full potential. This personalized approach not only improves overall quality but also increases annotator satisfaction and retention.

Conclusion

Advanced annotation techniques transform geospatial data labeling from a routine task into a sophisticated discipline that directly impacts AI model performance. By leveraging multi-modal data fusion, intelligent automation, precise validation techniques, and collaborative workflows, teams can create training datasets that meet the demanding requirements of production geospatial AI systems.

The techniques explored in this article—from multispectral validation to mensuration-based quality control—provide practical tools that ML engineers can implement today. As geospatial AI applications continue to expand into critical domains like climate monitoring, urban planning, and defense, the importance of high-quality training data only grows.

The future of geospatial annotation lies not in choosing between human expertise and automation, but in thoughtfully combining both through advanced workflows and intelligent tools. By implementing these advanced techniques within platforms like Kili Technology, organizations can build the reliable, accurate datasets that next-generation geospatial AI demands.

.png)

_logo%201.svg)

.webp)