The landscape of Earth observation and geospatial analysis is undergoing a fundamental transformation. Machine learning engineers working with satellite imagery, aerial photography, and other geospatial data are increasingly turning to foundation models—a new paradigm that promises to revolutionize how we extract insights from our planet's vast and complex data streams.

As the volume of Earth observation data continues to grow exponentially, traditional approaches to geospatial analysis face mounting challenges. Foundation models offer a compelling solution, bringing the power of large-scale pre-training, accurately labeled geospatial data, and transfer learning to the unique challenges of geospatial AI.

What are Geospatial Foundation Models?

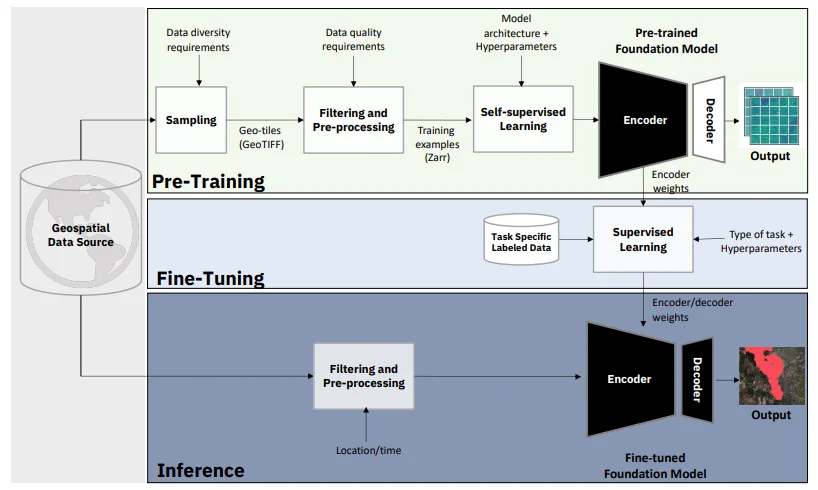

Source: A framework for building geopsatial foundation models as proposed by IBM and NASA for their Prithvi-100 model.

Foundation models or GFMs/GeoFMs, represent a paradigm shift in how we approach AI for Earth observation. Unlike traditional models designed for specific tasks, foundation models are large-scale neural networks pre-trained on diverse geospatial datasets to develop broad, transferable capabilities.

Core Characteristics

Geospatial foundation models exhibit several defining characteristics:

- Scale: These models are trained on massive datasets spanning multiple terabytes of Earth observation data, often incorporating imagery from various satellites, time periods, and geographic regions.

- Multi-modal Learning: They can process and understand different types of geospatial data simultaneously—optical imagery, synthetic aperture radar (SAR), multispectral bands, and even textual descriptions of locations.

- Self-supervised Pre-training: Rather than requiring labeled data for every pixel or region, these models learn meaningful representations through self-supervised techniques like masked image modeling or contrastive learning.

- Adaptability: Once pre-trained, they can be fine-tuned for various downstream tasks with minimal additional data, from land use classification to change detection.

Distinction from Traditional Models

Traditional Earth observation models typically follow a task-specific approach. An AI model built for crop classification wouldn't necessarily perform well at urban planning tasks without significant retraining. Each new application often requires:

- Extensive labeled training data

- Domain-specific feature engineering

- Complete model redesign and training from scratch

Foundation models fundamentally change this equation. By learning general-purpose representations of Earth's surface during pre-training, they can adapt to new tasks with minimal fine-tuning. This is analogous to how large language models have transformed natural language processing, but adapted for the unique challenges of Earth observation data.

Advantages of Foundation Models in Earth Observation and Geospatial AI

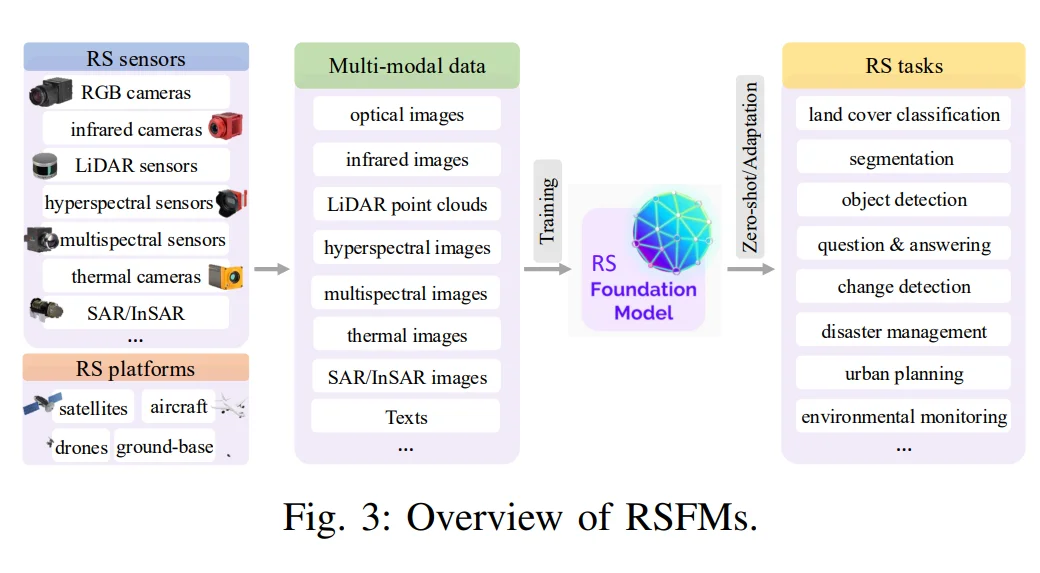

Source: An overview of remote sensing foundation models.

The adoption of AI foundation models in geospatial applications offers several compelling advantages:

Data Efficiency: Traditional supervised learning approaches require extensive labeled datasets for each specific task. Foundation models, pre-trained on vast amounts of unlabeled data, can achieve strong performance on new tasks with limited labeled examples. This is particularly valuable in Earth observation, where manual annotation of satellite imagery is expensive and time-consuming, often requiring domain expertise to interpret complex geographical features.

Generalization Across Geographies: Models trained on specific regions often fail when applied to new geographic areas with different landscapes, urban patterns, or climatic conditions. Foundation models, exposed to global diversity during pre-training, demonstrate superior cross-regional generalization capabilities.

Temporal Understanding: By training on time-series data, foundation models can learn to recognize seasonal patterns, long-term environmental changes, and temporal dynamics that task-specific models might miss.

Multi-scale Feature Learning: These models automatically learn hierarchical representations—from low-level textures to high-level semantic concepts—enabling them to work effectively across different spatial resolutions and scales.

Rapid Deployment: When new applications emerge, such as disaster response or environmental monitoring needs, foundation models can be quickly adapted without the months-long development cycles required for traditional approaches.

Key Challenges and Limitations

Despite their promise, foundation models in Earth observation face several significant challenges:

Computational Requirements: Training foundation models demands substantial computational resources. Processing petabytes of satellite imagery requires specialized hardware infrastructure that may be inaccessible to many organizations.

Data Heterogeneity: Earth observation data comes from numerous sources with varying specifications—different spatial resolutions, spectral bands, acquisition angles, and temporal frequencies. Harmonizing these diverse inputs remains technically challenging.

Domain Shift: While foundation models improve generalization, they can still struggle with significant domain shifts, such as adapting from temperate to arctic regions or from rural to dense urban environments.

Interpretability: The black-box nature of large neural networks poses challenges for applications requiring explainable decisions, such as environmental policy or legal compliance.

Evaluation Complexity: Unlike natural image datasets with clear object categories, Earth observation tasks often involve continuous variables, fuzzy boundaries, and context-dependent interpretations that complicate standardized evaluation.

Assessment and Evaluation Frameworks

Multi-task Benchmarks: Comprehensive evaluation suites test models across diverse tasks—land cover classification, change detection, object counting, and semantic segmentation—to assess generalization capabilities. GeoBench, introduced by Lacoste et al. (2023), provides a standardized benchmark comprising 15 diverse tasks covering various sensors and resolutions. This framework establishes explicit protocols for evaluating how well foundation models generalize across different Earth observation applications, from crop mapping to flood detection, using consistent metrics and evaluation procedures.

Geographic Diversity Testing: Models are evaluated on held-out geographic regions to measure their ability to generalize to unseen landscapes and environmental conditions. The SatlasPretrain dataset by Alesiani et al. (2023) exemplifies this approach, containing imagery from over 200 countries across diverse biomes and urban environments. Researchers use such globally distributed datasets to explicitly test for domain shift—measuring whether a model trained on North American data maintains performance when applied to African or Southeast Asian landscapes, quantifying cross-regional generalization capabilities.

Temporal Consistency: Evaluation frameworks assess whether models maintain consistent predictions across time series and can accurately detect genuine changes versus seasonal variations. The Seasonal Contrast (SeCo) pre-training strategy by Mañas et al. (2021) addresses this challenge directly, introducing methods to help models learn representations invariant to seasonal shifts while remaining sensitive to meaningful long-term changes. This ensures models can distinguish between recurring patterns (like vegetation greening in summer) and actual environmental changes (such as deforestation or urban expansion).

Downstream Task Performance: The ultimate test lies in how quickly and effectively models can be adapted to new, specific applications with limited fine-tuning data. The EuroSAT dataset by Helber et al. (2019) has become a standard benchmark for measuring few-shot learning capabilities. Researchers evaluate foundation models by fine-tuning them on just 1% or 5% of EuroSAT's labeled data, providing quantifiable measures of data efficiency and adaptability—critical metrics for determining real-world applicability where labeled data is scarce.

Robustness Metrics: Tests for performance under various conditions—cloud cover, seasonal variations, different sensor types—ensure models are reliable for operational use. The SEN12MS dataset by Schmitt et al. (2019) specifically addresses sensor fusion and robustness challenges by providing paired Sentinel-1 (SAR) and Sentinel-2 (optical) imagery. Researchers use this to evaluate whether models can maintain performance when optical data is obscured by clouds, forcing reliance on SAR data alone—directly testing robustness to sensor failure and adverse atmospheric conditions.

Multi-sensor Fusion and Geospatial Reasoning

Why Multi-sensor Integration Matters

Earth observation rarely relies on a single data source. Optical imagery provides rich visual information but is hindered by clouds. SAR penetrates clouds but lacks the intuitive visual interpretation of optical data. Multispectral and hyperspectral sensors reveal information invisible to the human eye.

Foundation models excel at multi-sensor fusion because they can learn complementary representations from different sensor types during pre-training. This enables them to:

- Maintain performance when certain sensors are unavailable

- Combine strengths of different modalities for more robust predictions

- Learn sensor-invariant features that transfer across imaging platforms

Geospatial Reasoning Capabilities

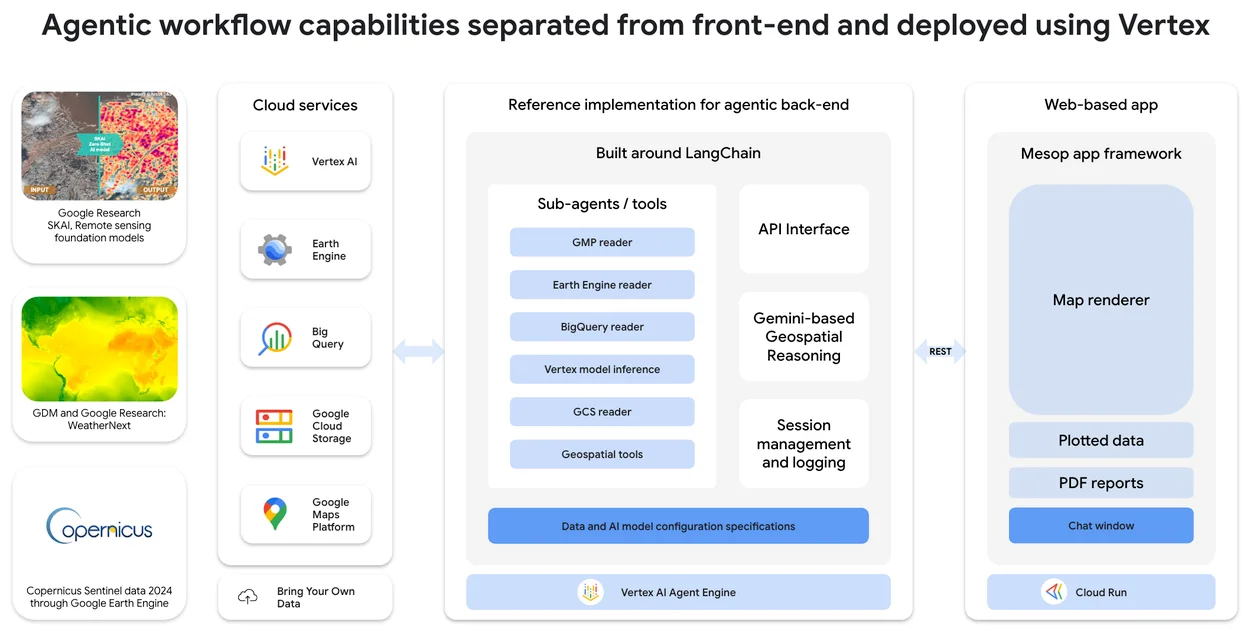

Source. Google's overview of the architecture for geospatial reasoning.

Beyond pixel-level analysis, foundation models contribute to higher-level geospatial reasoning:

Spatial Context Understanding: These models learn implicit spatial relationships—understanding that ports appear near coastlines, agricultural fields often form geometric patterns, and urban development follows transportation networks.

Cross-scale Reasoning: They can connect local observations to regional patterns, understanding how individual buildings relate to neighborhood structures and broader urban development.

Temporal Reasoning: By processing time series during training, foundation models develop capabilities to distinguish between normal seasonal variations and anomalous changes, crucial for applications like deforestation monitoring or urban expansion tracking.

Comparison to Traditional GIS Methods

Traditional Geographic Information Systems (GIS) rely on explicit rules, manual feature extraction, and predefined spatial operations. While powerful for many applications, they require significant human expertise and struggle with unstructured data like raw satellite imagery.

Foundation models complement rather than replace GIS by:

- Automatically extracting features from raw imagery that can be imported into GIS workflows

- Handling ambiguous or complex patterns that rule-based systems struggle with

- Scaling to massive datasets without proportional increases in human effort

- Learning patterns that human operators might not explicitly recognize

Real World Applications

Foundation models are already demonstrating value across diverse Earth observation applications:

Climate Change Monitoring: Models trained on historical satellite data can detect subtle changes in ice coverage, vegetation patterns, and coastal erosion, providing crucial data for climate research and monitoring vital signs.

Agricultural Intelligence: By understanding crop patterns, growth stages, and field boundaries, these models enable precision agriculture applications, yield prediction, and early detection of crop stress or disease.

Urban Planning: Foundation models can automatically map informal settlements, track urban expansion, and assess infrastructure development, supporting data-driven city planning and sustainable urban development.

Disaster Response: Rapid damage assessment after natural disasters becomes possible by fine-tuning pre-trained models on post-disaster imagery, significantly accelerating emergency response efforts.

Environmental Conservation: Automated detection of deforestation, illegal mining, or poaching activities helps conservation efforts scale to protect vast wilderness areas.

Infrastructure Monitoring: Regular assessment of roads, bridges, pipelines, and power lines for maintenance needs or damage detection.

Ethical Considerations

The development and deployment of foundation models in Earth observation raise important ethical considerations:

Privacy Concerns: High-resolution models capable of identifying individual structures or activities must balance utility with privacy protection, particularly in residential areas.

Dual-use Potential: Technologies developed for environmental monitoring could potentially be misused for surveillance or military applications, requiring careful governance and ethical frameworks.

Data Sovereignty: Training data often spans multiple countries, raising questions about data ownership, usage rights, and benefit sharing.

Environmental Impact: The computational resources required for training large models have a significant carbon footprint, creating a paradox for models intended to support environmental applications. Organizations are increasingly focusing on efficient training methods and green computing infrastructure.

Accessibility and Equity: Ensuring that the benefits of these technologies reach developing nations and smaller organizations, not just well-resourced institutions.

Significant Foundation Models in Earth Observation

Several noteworthy foundation models have emerged in the geospatial domain:

Prithvi: A collaborative effort by NASA and IBM, Prithvi is trained on Harmonized Landsat-Sentinel data and designed for various Earth observation tasks.

SatMAE: Leveraging masked autoencoder techniques, this model learns robust representations from unlabeled satellite imagery.

Clay Foundation Model: An open-source foundation model for Earth observation developed by Clay, designed for flexibility across different data sources and resolutions.

Google's Geospatial Models: Google has introduced a suite of models and systems aimed at interpreting planetary-scale data. This includes models like SKAI (Satellite-based Knowledge and Information), which is used for disaster response such as real-time flood and wildfire boundary mapping, and Weather/Next, a generative model for high-resolution weather forecasting. These models are often integrated with a Gemini-powered reasoning engine to analyze vast geospatial datasets from sources like Google Earth Engine.

Commercial Offerings: Companies like Planet, Maxar, and others are developing proprietary foundation models optimized for their satellite constellations and customer applications.

Future Outlook

The future of geospatial foundation models holds immense promise. We anticipate:

Increased Model Scales: As computational resources grow and architectures improve, models will process even larger spatial and temporal contexts. The recently published SkySense++ model pre‑trained on 27 million multi‑modal images outperforms its predecessor across 12 Earth‑observation tasks, demonstrating the benefits of scale. Complementary benchmarks such as SustainFM now evaluate GeoFMs against 17 Sustainable Development Goal tasks, while the robustness benchmark REOBench shows that larger vision‑language GeoFMs lose up to 20 % accuracy under corruption—evidence that sheer size is not a panacea. A 2024 survey of vision foundation models in remote sensing summarises more than 70 public models exceeding 100 M parameters, charting the “scaling law” trajectory.

Better Multi-modal Integration: Future models will seamlessly combine satellite imagery with ground-based sensors, IoT devices, and crowd-sourced data. SkySense++’s factorised encoder handles optical, SAR and hyperspectral streams in one backbone, requiring 10× fewer labels at fine‑tune. Google Research’s 2025 Geospatial Reasoning programme combines MAE‑style vision encoders with language models to produce zero‑shot land‑cover maps. For regional studies, a residual cross‑modality attention network improved Arctic permafrost‑landform detection by +5 AP@50.

Real-time Capabilities: Advances in model efficiency will enable near real-time analysis of streaming satellite data for applications like disaster response. DeepMind’s AlphaEarth produces sub‑hourly global updates for hazards such as floods and landslides. The GUARDIAN GNSS network delivers Total Electron Content maps within 5 minutes, already used in volcanic‑ash advisories. A regional Prithvi‑SAR flood model processes each Sentinel‑1 scene in 2–3 minutes and raises F1 scores by 12 %. Historic events such as Hurricane Dorian were mapped within hours by the RAPID SAR pipeline, foreshadowing FM‑powered change‑detection workflows.

Specialized Architectures: We'll likely see architectures specifically designed for geospatial data's unique characteristics—spatial autocorrelation, multi-scale structures, and temporal dynamics.

Researchers are moving beyond vanilla ViTs to designs that bake in geospatial priors:

- SatMamba pairs masked auto‑encoders with state‑space models, achieving linear time/space scaling for long multi‑band sequences (paper).

- GeoAggregator uses Gaussian‑biased local attention to respect spatial autocorrelation in tabular sensor grids (paper).

- Multi‑scale transformers like MCAT for change detection and SIG‑ShapeFormer for cloud classification tie hierarchical spatial windows to temporal tokens (MCAT, SIG‑ShapeFormer).

- A Perception Field Mask limits attention to physically proximal patches, improving neighbourhood reasoning in hyperspectral imagery (LESS‑ViT). (arxiv.org, dl.acm.org, mdpi.com)

Democratization: As the technology matures, we expect more accessible tools and platforms that allow smaller organizations to leverage foundation models for their specific needs. IBM and NASA have released the Prithvi model family on Hugging Face under a permissive licence, cutting labelled‑data needs for burn‑scar mapping by 15 %. On the commercial side, Amazon’s SageMaker GeoFM jump‑start offers one‑click inference endpoints for NGOs and start‑ups. Institutional backing is evident in the ESA–NASA 2025 workshop, which focuses on open datasets, evaluation suites and safe model sharing. (huggingface.co, aws.amazon.com, science.data.nasa.gov)

The transformation brought by foundation models to Earth observation is just beginning. For machine learning engineers working in this space, understanding and leveraging these models will be crucial for building the next generation of geospatial AI applications. As we continue to face global challenges from climate change to sustainable development, these technologies offer powerful tools for monitoring, understanding, and protecting our planet.

Resources

For machine learning engineers looking to dive deeper into geospatial foundation models, consider exploring:

Open-Source Models and Code

- Prithvi Foundation Model - NASA-IBM's geospatial foundation model

- SatMAE Repository - Satellite masked autoencoder implementation

- Clay Foundation Model - Open-source Earth observation model

- TorchGeo - PyTorch library for geospatial data

Datasets and Benchmarks

- BigEarthNet - Large-scale Sentinel-2 benchmark dataset

- Sen12MS - Multi-spectral and SAR dataset

- SpaceNet Challenges - Building detection and road network datasets

- GeoBench - Multi-task benchmark for geospatial ML

- EuroSAT - Land use and land cover classification dataset

Research Papers and Publications

- Foundation Models for Generalist Geospatial AI - Comprehensive paper introducing Prithvi

- Foundation Models for Remote Sensing and Earth Observation: A Survey - Systematic review of the field

- SatMAE: Pre-training Transformers for Temporal and Multi-Spectral Satellite Imagery - Key paper on masked autoencoders for satellite data

- Feature Guided Masked Autoencoder for Self-supervised Learning in Remote Sensing - Advances in self-supervised techniques

Cloud Platforms and Tools

- Google Earth Engine - Planetary-scale geospatial analysis

- Microsoft Planetary Computer - Cloud computing platform for environmental data

- AWS Ground Station - Satellite data processing infrastructure

- Sentinel Hub - Cloud API for satellite imagery

The intersection of foundation models and Earth observation represents one of the most exciting frontiers in applied AI, offering opportunities to tackle some of humanity's most pressing challenges while pushing the boundaries of what's technically possible.

FAQs about Geospatial AI

What are Foundation Models (FMs) and how do they differ from traditional AI models in Earth Observation (EO)?

Foundation models are large-scale neural networks pre-trained on massive geospatial datasets to develop broad, transferable capabilities. Unlike traditional models built for specific tasks, foundation models can adapt to new applications with minimal fine-tuning, eliminating the need for extensive labeled data and complete model redesign for each new use case.

What are the main advantages of using Foundation Models in Earth Observation and Geospatial AI?

Key advantages include data efficiency (strong performance with limited labeled examples), superior cross-regional generalization, rapid deployment for new applications, automatic multi-scale feature learning, and temporal understanding of seasonal patterns and environmental changes.

What are the key challenges and limitations of Foundation Models in Earth Observation?

Main challenges include substantial computational requirements, data heterogeneity from diverse sensor sources, domain shift issues when adapting to new environments, interpretability concerns for explainable applications, and evaluation complexity due to Earth observation's continuous variables and context-dependent interpretations.

How are Foundation Models for Earth Observation assessed and evaluated?

Evaluation uses multi-task benchmarks like GeoBench, geographic diversity testing on held-out regions, temporal consistency assessment, downstream task performance measurement, and robustness metrics testing performance under various conditions like cloud cover and seasonal variations.

Why is multi-sensor integration important for Geospatial Foundation Models?

Different sensors have complementary strengths—optical imagery provides visual detail but is affected by clouds, while SAR penetrates clouds but lacks visual clarity. Foundation models excel at multi-sensor fusion by learning complementary representations during pre-training, enabling robust predictions even when certain sensors are unavailable.

How do Foundation Models contribute to "Geospatial Reasoning"?

Foundation models enable spatial context understanding (recognizing that ports appear near coastlines), cross-scale reasoning (connecting individual buildings to neighborhood patterns), and temporal reasoning (distinguishing seasonal variations from genuine changes) for applications like deforestation monitoring.

How do Foundation Models compare to traditional mapping methods like GIS?

Foundation models complement GIS by automatically extracting features from raw imagery, handling ambiguous patterns that rule-based systems struggle with, and scaling to massive datasets without proportional human effort increases. They work alongside rather than replace traditional GIS workflows.

What are some real-world applications of Foundation Models in Earth Observation and Geospatial AI?

Applications include climate change monitoring, precision agriculture, urban planning, disaster response damage assessment, environmental conservation (detecting deforestation and illegal activities), and infrastructure monitoring for maintenance needs.

What are the ethical considerations surrounding the development and deployment of Foundation Models in EO?

Key considerations include privacy protection in high-resolution imagery, dual-use potential for surveillance, data sovereignty across countries, environmental impact from computational requirements, and ensuring accessibility for developing nations and smaller organizations.

What are the most significant foundation models for earth observation and geospatial AI?

Notable models include Prithvi (NASA-IBM collaboration), SatMAE (masked autoencoder approach), Clay Foundation Model (open-source), and various commercial offerings from companies like Planet and Maxar, each contributing unique approaches to Earth observation challenges.

Accelerating Geospatial AI Development

Building robust foundation models requires high-quality training data at scale—a challenge we understand deeply at Kili Technology. We work with geospatial AI companies to streamline the creation of annotated datasets that power these advanced models, from satellite imagery classification to multi-modal Earth observation tasks.

Our platform handles the unique complexities of geospatial data annotation: multispectral imagery processing, large file management up to 5GB, coordinate-based navigation, and enterprise-grade quality control workflows. Whether you're developing foundation models for agricultural monitoring, urban planning, or environmental assessment, we provide the tools and services to accelerate your data preparation pipeline.

If you're working on geospatial AI applications and interested in discussing how our annotation platform can support your foundation model development, contact our sales team to explore how we can accelerate your use cases.

.png)

_logo%201.svg)

.webp)