In an era where technology is reshaping our lives, the fusion of Natural Language Processing (NLP) and Deep Learning is at the forefront of innovation. This powerful combination is bridging the gap between human communication and machine understanding, creating a world where our devices comprehend our language and respond in kind.

This article explores the fascinating world of NLP and deep learning. We'll explore the history, the underlying technology, the wide-ranging applications, and the prospects of this dynamic duo. We'll also take a closer look at large language models and how they are shaping industries and businesses across the globe.

Whether you're a tech enthusiast, a business leader looking to leverage these technologies, or simply curious about how your smartphone understands your voice, this guide will provide insights and understanding into a world where machines are fluent in our language.

A Brief Introduction to Natural Language Processing and Deep Learning

Natural Language Processing (NLP) is the science of teaching machines to interpret human language. It's the technology behind chatbots that assist customers, algorithms that analyze social media sentiment, and much more.

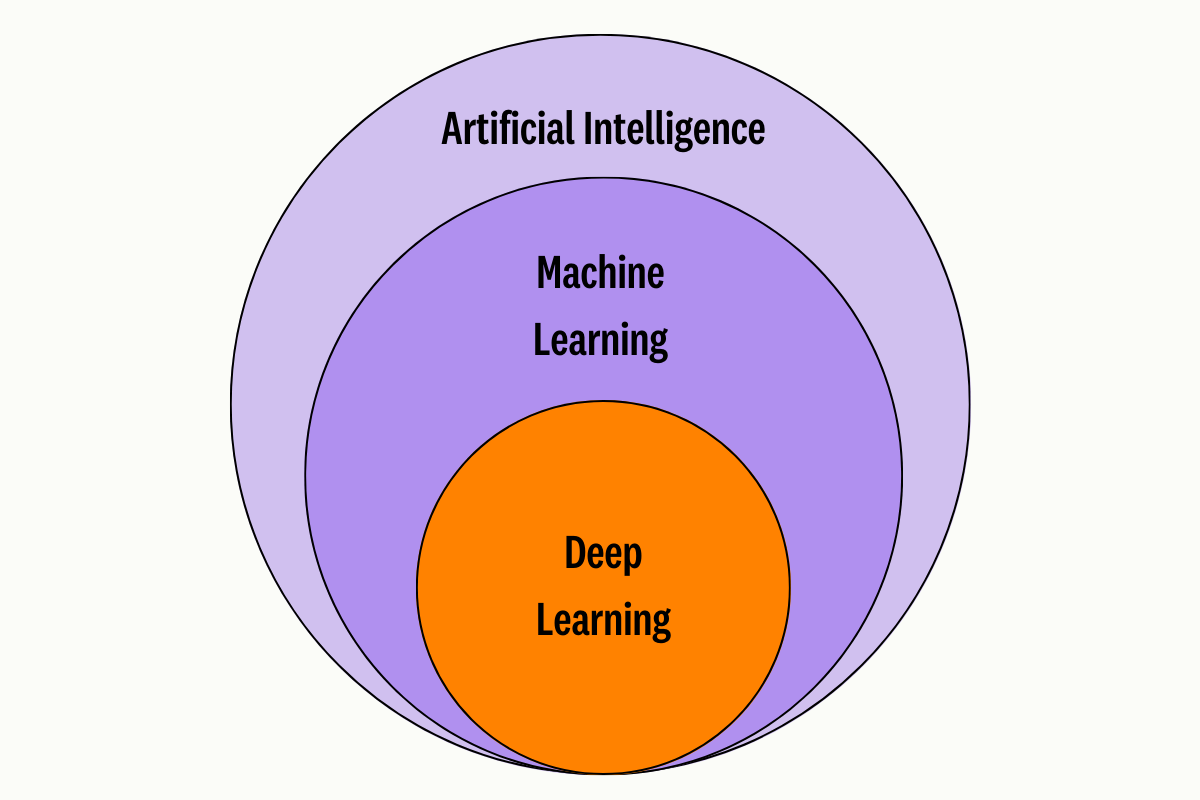

Deep Learning, a subset of machine learning, amplifies this by employing neural networks that mimic the human brain. When applied to NLP, deep learning enables machines to generate human-like responses, create new content, and even predict our needs.

NLP is not just a technological marvel; it's a daily utility. From asking Alexa for the latest news to using Grammarly for error-free writing, NLP is embedded in our daily routines.

In the business world, NLP's impact is profound:

- In the field of healthcare, medical transcription services leverage the power of Natural Language Processing (NLP) to convert voice-recorded reports into accurate and structured text efficiently. Additionally, cutting-edge predictive algorithms analyze patient data, enabling healthcare professionals to create personalized treatment plans that cater to individual needs and conditions.

- In the realm of finance, banks utilize NLP to strengthen their fraud detection capabilities. By meticulously analyzing customer behavior and transactions, these sophisticated algorithms can swiftly identify and flag suspicious activities, safeguarding the financial well-being of their clients.

- Within the retail industry, e-commerce platforms have significantly benefited from implementing NLP-powered chatbots. These intelligent virtual assistants actively engage with customers, providing real-time support and assistance. By enhancing the overall user experience, these chatbots help boost customer satisfaction and ultimately contribute to increased sales and customer loyalty.

- In the manufacturing sector, NLP-driven voice commands are vital in optimizing factory processes. By utilizing voice-based control systems, machinery in factories can be efficiently managed, significantly improving efficiency and safety standards. This technological advancement allows for streamlined operations, reducing production time and enabling workers to focus on other critical tasks.

The integration of NLP across various industries showcases this technology's versatility and transformative potential, revolutionizing how businesses operate and enhancing overall productivity and effectiveness.

Integrating deep learning with NLP has further broadened these applications, enabling real-time language translation, advanced sentiment analysis, and personalized marketing strategies.

What is Natural Language Processing?

Natural language processing (NLP) is an area of computer science and artificial intelligence concerned with the interactions between computers and human (natural) languages. Its goal is to make machines able to understand, interpret, and generate human language to communicate with humans. NLP allows machines to process large amounts of natural language data and extract relevant information from it to be used for various tasks such as sentiment analysis, text summarization, machine translation, question answering, and more.

Definition and Importance

In general terms, NLP is a sub-discipline of AI which focuses on the ability of computers to analyze, understand, and generate natural language. It helps machines interact with humans in a way that they both can understand – by interpreting the words spoken by us or written in texts etc. In simpler terms, NLP enables machines to read our queries and respond accordingly in a meaningful way. The importance of NLP lies in its potential applications like providing virtual assistants for customer service inquiries or providing accurate translations from one language to another in real-time.

Real-world Applications

NLP has seen several successful applications across many industries - from customer service automation to healthcare. For example, virtual assistants are powered by NLP techniques like intent recognition, where the system identifies the user’s query intent based on natural language input. Other examples include automatic summarization tools, which can summarize an article or email into a few concise sentences; automated grammar checks, which correct spelling mistakes; machine translation systems which translate text from one language into another; and voice search systems which allow users to search using voice commands instead of typing their query. NLP technology can also be used for automated sentiment analysis, where the system can detect emotions conveyed through words or phrases – this has potential applications in marketing research and customer decision-making processes.

Components of NLP

NLP consists of several components, including speech recognition (recognizing spoken words):

Syntax: This component of natural language processing (NLP) deals with the arrangement of words in a sentence, ensuring grammatical correctness and structural integrity. Parsing techniques and rule-based algorithms are commonly employed to analyze and generate well-formed sentences.

Semantics: The field of semantics in NLP focuses on the underlying meaning of words and sentences. It goes beyond the surface-level understanding and delves into concepts, objects, and the relationships between them. NLP algorithms can infer the context and extract valuable insights by deciphering the intended message.

Pragmatics: Considered an essential component of language understanding, pragmatics considers the larger context in which communication occurs. It involves understanding the speaker's intention, the listener's interpretation, and the social norms that shape the interaction. Pragmatics is crucial in capturing nuances, sarcasm, and implying meaning beyond the literal.

Morphology: Morphology examines the structure and formation of words in a language. It analyzes root words, prefixes, and suffixes to understand how they contribute to the overall meaning of a word. By dissecting and understanding these linguistic units, NLP systems can effectively handle word variations and capture their intended essence.

Phonetics: In the realm of speech processing, phonetics is concerned with the study of sounds produced and perceived in language. It explores the physical properties of speech sounds, their acoustic characteristics, and the articulatory processes involved in their production. With a deeper understanding of phonetics, NLP algorithms can accurately transcribe speech and convert it into written text.

Discourse: This fundamental aspect of NLP involves unraveling how sentences connect to deliver coherence and coherence in a text. It considers language elements' overall structure, flow, and organization within a larger textual context. Discourse analysis enables NLP systems to build meaningful representations of written or spoken text by analyzing relationships between sentences.

Statistical and Machine Learning Models: Modern NLP heavily relies on statistical methods and machine learning models, encompassing advanced techniques such as deep learning. These models process large volumes of textual data, learning patterns, and relationships to understand and generate human-like language. By leveraging these powerful algorithms, NLP systems can achieve remarkable language understanding and generation levels.

These components work together to enable different types of tasks related to natural language processing, such as speech synthesis generation (generating audible speech) and sentiment analysis (understanding user feedback).

The history of NLP

The story of Natural Language Processing (NLP) is captivating, filled with innovation, challenges, and evolution. From its humble beginnings in the 1950s to the advanced technology we have today, let's delve into the history of NLP.

Early Methods and Challenges

Natural Language Processing (NLP) was primarily rule-based in the early days. Linguists and computer scientists tirelessly crafted sets of rules to interpret language manually. These rule-based systems, although commendable, grappled with the intricate nuances and complexities intricately woven into human language, leading to certain limitations and challenges that called for further advancements in NLP technology.

- Challenges with Ambiguity: Human language, with its nuances and multiple interpretations, presented significant hurdles for early Natural Language Processing (NLP) systems. The lack of contextual understanding often led to misinterpretations and inaccurate responses.

- Limited Scalability: In the early days, crafting rules for every conceivable sentence structure proved impractical for NLP systems. As a result, their scope and applicability were inherently limited, leaving many linguistic patterns and variations unaccounted for.

- Lack of Adaptability: These systems lacked the ability to learn and adapt to novel expressions, slang, or changes in language usage. Consequently, their effectiveness diminished quickly as they failed to keep up with the dynamic nature of human communication.

Addressing these challenges became paramount in developing more sophisticated and advanced NLP models, which now strive to account for ambiguity, provide scalability, and exhibit adaptability to ensure accurate and relevant language processing capabilities.

The Statistical Revolution

In the late 1980s and 1990s, the Natural Language Processing (NLP) field experienced a monumental shift with the emergence of statistical methods. This revolutionary approach moved away from the conventional reliance on rigid, predetermined rules and instead embraced the power of statistical models to analyze vast amounts of real-world text data. Through this breakthrough, NLP pioneers were able to unlock the potential of predictive analysis, opening up new horizons in the realm of language processing and understanding.

- Probabilistic Models: Sophisticated algorithms were developed to calculate the probability of certain words following others accurately. This breakthrough enabled a more flexible and accurate language understanding, paving the way for improved natural language processing systems.

- Machine Translation: The field of machine translation revolutionized with the introduction of statistical methods. These innovative techniques allowed for more nuanced and context-aware translations between languages, significantly improving the quality and fluency of automated translations.

- Data-Driven Approach: In this era, there was a noticeable shift towards a data-driven approach to language processing. Instead of relying solely on predefined rules, systems learned from actual language usage. This data-driven paradigm empowered language processing models to better adapt to real-world language variations and intricacies, resulting in more effective and accurate language understanding and generation.

Evolution to Deep Learning

Over the past decade, deep learning techniques have been remarkably integrated into Natural Language Processing (NLP), propelling it to unprecedented heights. With its ability to analyze and understand human language, deep learning has revolutionized how we interact with and extract information from text data.

This synergy between deep learning and NLP has unlocked new possibilities and paved the way for groundbreaking advancements in various fields, including machine translation, sentiment analysis, and question-answering systems. The future holds even more exciting prospects as we continue to push the boundaries of what can be achieved through this powerful combination of technologies.

Neural Networks: Deep learning leverages complex neural networks that closely resemble the intricate workings of the human brain. By enabling machines to learn from massive datasets, these networks empower them to make intelligent associations and predictions.

Word Embeddings: Innovative techniques like word embeddings go beyond mere word representations. They capture the rich semantic relationships between words by embedding them in a continuous vector space, enabling machines to grasp the contextual nuances of language more effectively.

Large Language Models: The emergence of powerful models like GPT-3 and BERT has revolutionized language understanding and generation. With the ability to process and generate human-like text at an unprecedented scale, these models hold immense potential for various fields, including natural language processing, content generation, and conversational agents.

Real-time Applications: Deep learning has unlocked groundbreaking opportunities in real-time applications. This transformative technology has enabled tasks like speech recognition and sentiment analysis to be performed instantaneously, opening up new possibilities in voice-activated assistants, customer feedback analysis, and more.

Deep Learning in NLP

Introduction to Deep Learning

Deep Learning is a specialized field within machine learning that has garnered significant attention for its ability to model complex patterns and structures in data. It employs three or more layers of neural networks, including input, hidden, and output layers, to create a more intricate understanding of the data it processes. Let's delve deeper into the key aspects of deep learning:

Simulating the Human Brain

The structure and function of the human brain inspires deep learning models. Just as neurons in the brain are connected by synapses, artificial neural networks consist of nodes (artificial neurons) connected by weighted pathways.

- Nodes: Nodes are computational units that take inputs, apply a mathematical function, and produce an output.

- Weighted Pathways: The connections between nodes have weights that are adjusted during training, determining the strength of the connection.

The network "learns" by adjusting these weights based on the prediction error, gradually improving its performance.

Layers in a Neural Network

A deep neural network consists of multiple layers:

- Input Layer: This layer receives the raw data and passes it to the hidden layers.

- Hidden Layers: These are the core of deep learning. Multiple hidden layers allow the network to learn complex features and representations of the data.

- Output Layer: This layer produces the final prediction or classification.

While a neural network with a single hidden layer can model simple relationships, additional hidden layers enable the network to capture more intricate patterns.

How Deep Learning Applies to NLP

In Natural Language Processing (NLP) context, deep learning is an innovative approach that has revolutionized the field. By leveraging neural networks with multiple layers, deep learning models can effectively capture and model complex patterns in data, enabling a more nuanced understanding and generation of human language.

One of the key advantages of deep learning in NLP is its capability for feature learning. Instead of relying on manual feature engineering, where linguists or domain experts manually design features for processing language data, deep learning algorithms can automatically discover the representations necessary for feature detection or classification from raw data. This saves time and effort and allows for more accurate and flexible language processing.

Deep learning models excel in contextual understanding. Understanding the context of words in a sentence is crucial for accurate language comprehension. Deep learning models can analyze the surrounding words and phrases, recognizing that the same word can have different meanings based on the specific situation. This contextual understanding enhances the accuracy and precision of language analysis and generation.

Deep learning plays a critical role in NLP by enabling the modeling of complex patterns, facilitating contextual understanding, and delivering scalable performance. Its ability to learn directly from data and capture high-level representations offers significant advantages in language processing tasks, contributing to advancements in areas such as machine translation, sentiment analysis, and question-answering systems.

Deep Learning Models and Algorithms (CNN, RNN)

Several models and algorithms play a crucial role in deep learning for Natural Language Processing (NLP):

Convolutional Neural Networks (CNNs): Originally designed for image recognition, CNNs have found application in NLP tasks such as sentence classification and sentiment analysis. By leveraging the hierarchical structure of text, CNNs can capture local patterns and global dependencies simultaneously.

Example: Sentiment Analysis

- Application: Sentiment analysis in product reviews.

- How It Works: A CNN can be used to analyze customer reviews of a product on an e-commerce site. The network scans the text using filters (kernels) to detect patterns or features such as words or phrases that are indicative of positive or negative sentiment.

- Outcome: The model can classify reviews as positive, negative, or neutral, helping businesses understand customer satisfaction and areas for improvement.

Recurrent Neural Networks (RNNs): Ideal for handling sequential data like text, RNNs can retain information from previous inputs. This enables them to comprehend the context of a sentence better and make more informed predictions. With their recurrent nature, RNNs can capture temporal relationships in language, making them valuable in various NLP applications.

Example: Machine Translation

- Application: Real-time translation between languages (e.g., English to Spanish).

- How It Works: An RNN can be used in a sequence-to-sequence model where one RNN (encoder) reads the input text in the source language and compresses the information into a context vector. Another RNN (decoder) then uses this vector to generate the translated text in the target language. The recurrent nature of the RNN allows it to consider the entire context of a sentence, making it effective for translation.

- Outcome: The model can provide real-time, context-aware translation between languages, facilitating communication across language barriers.

Both Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have found distinct applications in Natural Language Processing (NLP), taking advantage of their specific strengths. CNNs are particularly effective in tasks that detect local patterns or features within the text, such as sentiment analysis. On the other hand, RNNs excel in tasks that require understanding language's sequential nature and contextual information, as seen in machine translation.

Large Language Models in NLP

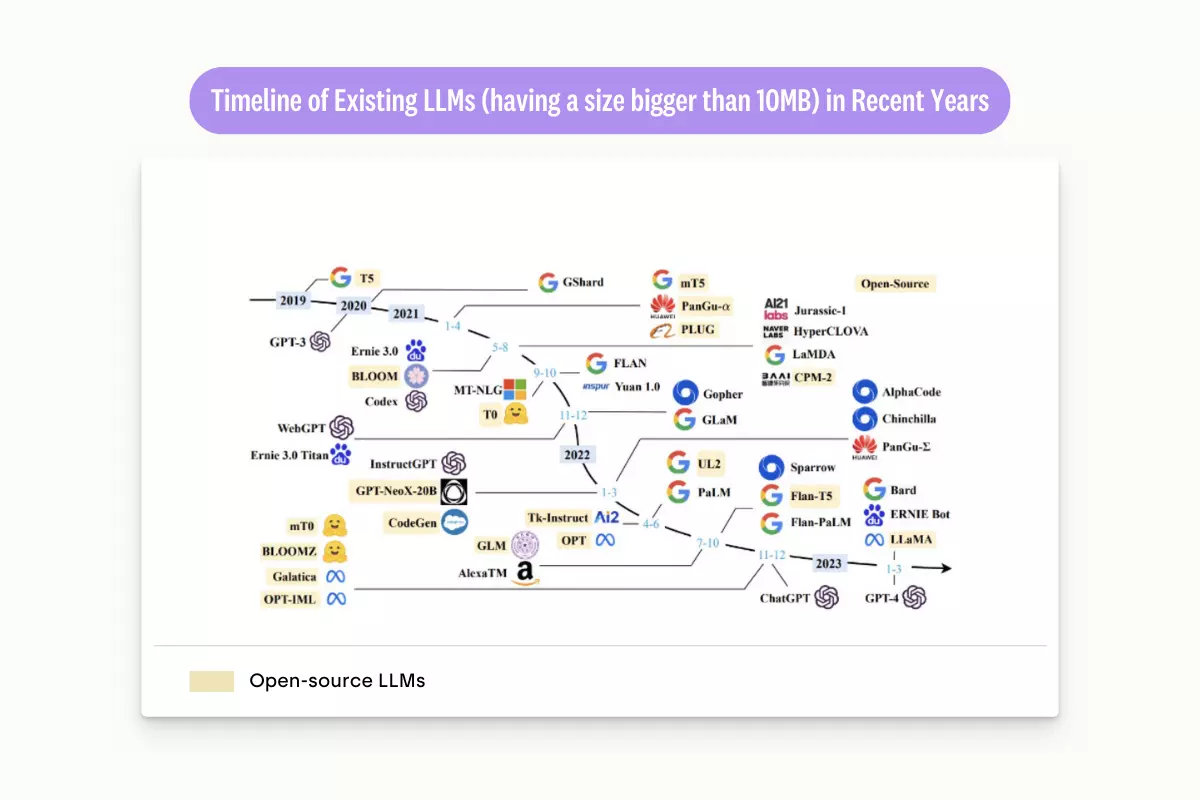

Introduction to Large Language Models (e.g., GPT-3)

Large language models, such as GPT-3, have garnered immense attention globally. Equipped with billions of parameters, these models can generate text resembling human language, respond to queries, and even assist in code composition. Their application in natural language processing (NLP) is extensive, offering opportunities to enhance various language-related tasks.

How Large Language Models Work with Deep Learning

Large Language Models leverage deep learning techniques to acquire knowledge from extensive datasets. By analyzing countless text documents, these models gain an understanding of human language structure, grammar, and nuances. This breakthrough has resulted in significant advancements and opened up numerous real-world applications, ranging from natural language processing and sentiment analysis to chatbots and language translation systems.

Applications and Use Cases

Large language models find applications across various industries:

Media and Publishing: They harness the power of machine learning text generation models to aid in content creation, effortlessly generating engaging articles and crafting captivating stories that captivate readers.

Customer Support and Service: By leveraging advanced machine learning question answering models, they are able to provide detailed and comprehensive answers to user queries, significantly improving customer satisfaction and creating a positive support experience.

Localization and Translation: With the adoption of state-of-the-art machine learning language translation models, they are able to facilitate accurate and precise language translation between multiple languages, ensuring seamless communication and understanding across global communities and cultures.

Software Development: Through the application of cutting-edge machine learning algorithms, they assist developers in writing and optimizing code, boosting productivity, and enabling the creation of efficient and high-quality software solutions.

These are just a few examples highlighting the diverse applications of large language models in various industries.

Why Deep Learning is Useful for NLP

Deep Learning has emerged as a powerful tool in the field of Natural Language Processing (NLP), enabling machines to understand and generate human language with remarkable accuracy. Let's explore why deep learning is beneficial for NLP:

Handling Large Datasets

- Scalability: Deep learning models are designed to handle vast amounts of data. In the context of NLP, they can be trained on extensive text corpora, learning from millions of sentences, phrases, and words.

- Continuous Learning: Unlike traditional models, deep learning networks can continue to learn and adapt as more data becomes available. This is vital in NLP, where language continually evolves.

- Example: Search engines like Google utilize deep learning to process and understand the enormous volume of text on the web, providing more relevant and context-aware search results.

Identifying Complex Patterns

- Hierarchical Feature Learning: Deep learning models learn hierarchical features, starting from simple patterns like individual letters or words and gradually building up to more complex structures like phrases and sentences.

- Contextual Understanding: Through recurrent neural networks (RNNs) and attention mechanisms, deep learning models can understand the context in which words are used, recognizing subtleties and nuances in language.

- Example: In sentiment analysis, deep learning models can detect not only explicit positive or negative words but also understand sarcasm, idioms, and complex expressions that contribute to the overall sentiment.

Limitations and Challenges

While deep learning offers significant advantages, it's essential to recognize its limitations and challenges in NLP:

- Data Dependency: Deep learning models require large amounts of labeled data for training. In some languages or specialized domains, such data may be scarce or expensive to obtain.

- Computational Resources: Training deep learning models demands substantial computational power and memory, which may be prohibitive for small organizations or individual researchers.

- Interpretability: Understanding how a deep learning model arrives at a particular decision can be challenging, leading to concerns about transparency and accountability.

- Overfitting: Without careful tuning and validation, models may become too specialized to the training data and perform poorly on unseen data.

NLP Tasks and Applications

The use cases of NLP are virtually limitless, as they can be used for language comprehension, translation, and creation. A very practical example of this is chatbots, who are capable of comprehending questions given to them by customers in a natural language format. These chatbots can derive the intent and meaning behind a customer's request and produce unscripted responses based on the available information. Though they are generally only used as the first line of response currently, it demonstrates a very practical application of deep learning and NLP in the real world. Listed below are more general uses cases of NLP.

Language Translation

Language Translation is the task of converting text or speech from one language to another, a vital tool in our increasingly interconnected world. Deep learning has played a pivotal role in revolutionizing this field. For instance, Sequence-to-Sequence (Seq2Seq) models with attention mechanisms have become a cornerstone in machine translation. Unlike earlier methods that struggled with long sentences and complex structures, these models can capture long-term dependencies and nuances in language. An example of this can be seen in translating a complex legal document from English to French, where traditional methods might misinterpret the intricate legal terms. Seq2Seq models, however, can provide more accurate and natural translations by understanding the relationships between these terms.

The advent of Large Language Models (LLMs) like Transformer models has further enhanced translation quality. By considering the entire context of a sentence or paragraph, these models can lead to more coherent translations. For example, in translating a novel from Japanese to English, an LLM can maintain the stylistic nuances and thematic coherence across chapters, something that might be lost with more fragmented translation methods. This ability to understand and preserve the subtleties of language has made LLMs an invaluable asset in tasks like literary translation, international business communication, and real-time translation services, bridging language barriers and fostering global understanding.

Here are some more real life examples:

- Google Translate: Google Translate uses a neural machine translation (NMT) model called GNMT. GNMT is a seq2seq model with attention, which means that it can learn long-term dependencies between words in a sentence. This allows GNMT to produce more accurate and natural-sounding translations than traditional machine translation methods.

- Yandex Translate: Yandex Translate also uses an NMT model. However, Yandex Translate's model is specifically designed for translating between Russian and other languages. This allows Yandex Translate to produce more accurate translations for Russian-language text.

- DeepL: DeepL uses a different type of deep learning model called a transformer model. Transformer models are known for capturing long-range dependencies in text, which makes them well-suited for machine translation. DeepL's transformer model is trained on a massive dataset of parallel text, which allows it to produce high-quality translations for a wide range of languages.

- Waygo: Waygo uses a convolutional neural network (CNN) to translate text in real-time. CNNs are well-suited for image recognition tasks, and they can also be used to translate text by recognizing the shapes and patterns of letters and words. Waygo's CNN is trained on a dataset of images of text in different languages, which allows it to translate text in real-time with high accuracy.

Grammar checking

Grammar Checking involves the identification and correction of grammatical errors in text, a task that has been significantly enhanced by deep learning. Traditional rule-based systems often rely on a fixed set of rules, leading to mistakes or oversights, especially with complex sentences or uncommon grammatical structures. On the other hand, deep learning models can understand the intricate rules and structures of language. For example, in a sentence like "Their going to the store," where a common mistake has been made with the homophone "their" instead of "they're," deep learning models can recognize this error by analyzing the context and structure of the sentence.

The role of Large Language Models (LLMs) in grammar checking is even more profound. LLMs can provide context-aware corrections, understanding not just grammar rules but also a sentence's intended meaning. Consider a complex technical document filled with industry-specific jargon and expressions. An LLM can recognize the unique grammatical patterns within that specific field and offer grammatically accurate and contextually relevant corrections. This ability to tailor corrections to the context makes LLMs an essential tool for writers, editors, and professionals across various fields, ensuring that communication is grammatically correct and clear and effective. Whether it's a student writing an essay, a novelist crafting a story, or a business professional preparing a report, grammar checking powered by deep learning and LLMs offers a sophisticated and nuanced approach to enhancing written communication.

Here are some more real-life examples:

- Grammarly: Grammarly uses a proprietary deep learning model that is trained on a massive dataset of text. The model can recognize a wide range of grammatical errors, as well as stylistic issues and clarity problems.

- Quillbot: QuillBot employs a method known as sequence-level knowledge distillation, where "titanic, truly enormous" models are compressed down to "very large" sizes. The process is carefully designed and tested to ensure that the compressed models retain their effectiveness while being more manageable in size.

Part-of-Speech tagging

Part-of-Speech Tagging assigns parts of speech to each word in a sentence, such as identifying whether a word is a noun, verb, adjective, etc. This seemingly simple task is crucial in many language processing applications, from text analysis to machine translation. Deep learning, particularly Recurrent Neural Networks (RNNs), has significantly contributed to this area. For example, in the sentence "I fish for fish at the fish market," the word "fish" appears three times but with different parts of speech. RNNs can consider the sequential nature of the text, recognizing that the first "fish" is a verb, the second is a noun, and the third is an adjective. This ability to understand the context allows for more accurate part-of-speech tagging.

Large Language Models (LLMs) take this further by leveraging vast training data to understand the intricate relationships between words. In the field of computational linguistics, researchers and professionals can utilize LLMs to analyze complex literary texts or historical documents. For instance, an LLM can recognize the archaic language and unique grammatical structures in analyzing Shakespearean plays, providing accurate part-of-speech tagging that respects the historical context.

Here are more examples:

- Virtual assistants: Deep learning powers virtual assistants like Siri and Alexa. Part-of-speech tagging is used to understand the user's requests and generate accurate responses.

- Discourse analysis: Deep learning analyzes the structure and meaning of discourse, such as conversations, news articles, and books. Part-of-speech tagging is used to identify the parts of speech of words in the text, which helps the system to better understand the structure and meaning of the discourse.

- Natural language generation: Deep learning-based part-of-speech taggers are used by OpenAI's GPT-3 language model to help the machine generate grammatically correct text. This is important for the text to be readable and understandable by humans. For example, in the sentence "I went to the store and bought some milk," the deep learning-based part-of-speech tagger can correctly identify the parts of speech for each word in the sentence, which helps GPT-3 to generate the sentence grammatically correct.

Automatic text condensing and summarization

Automatic Text Condensing and Summarization is the task of reducing a lengthy text into a concise summary while retaining the essential information. This process is vital in various fields where large volumes of information must be quickly understood. Deep learning has made significant strides in this area, with models that can analyze and understand the key points in a text, generating summaries that capture the core message without losing critical details. For example, deep learning models can condense lengthy court rulings into concise summaries in the legal profession, allowing lawyers and judges to grasp the essential findings and legal reasoning quickly.

The role of Large Language Models (LLMs) like GPT-3 in text summarization is even more profound. These models can create summaries that are not only concise but also coherent and engaging. Consider the world of journalism, where news agencies must provide quick and accurate summaries of breaking news events. An LLM can analyze a complex news report on a political election and generate a summary that captures the key outcomes, candidates' positions, and implications, all in a reader-friendly format.

- Legal: Deep learning models can condense lengthy court rulings into concise summaries, allowing lawyers and judges to grasp the essential findings and legal reasoning quickly. For example, the Lex Machina platform uses deep learning to provide case summaries and legal analysis, which helps lawyers to quickly identify relevant cases and make better legal arguments.

- Finance: BloombergGPT is a Large Language Model tailored for the finance sector. It can be used for text condensing and summarization by analyzing complex financial reports and news, and condensing them into concise and coherent summaries. This enables financial professionals to quickly grasp key insights, making it a valuable tool for investors, analysts, traders, and decision-makers in the fast-paced world of finance.

Syntactic analysis

Syntactic Analysis is analyzing a sentence's grammatical structure, identifying the relationships between words and phrases. This is a fundamental aspect of understanding human language and has applications ranging from machine translation to voice assistants. Deep learning, particularly Convolutional Neural Networks (CNNs), has contributed significantly to this field. For example, in analyzing a complex sentence like "The book that the student who just graduated wrote is on the shelf," CNNs can detect the nested relationships and understand that it's the student who wrote the book, and the book is on the shelf.

Large Language Models (LLMs) take syntactic analysis even further by considering broader context and linguistic subtleties. In literary analysis, for instance, an LLM can recognize the unique syntactic structures used by different authors or within various literary genres. It can analyze a poem by Emily Dickinson or a novel by James Joyce, providing nuanced insights into their distinctive grammatical styles. Similarly, in scientific writing, LLMs can understand the specific syntax used in technical descriptions or complex equations, aiding in tasks like automated summarization or translation of scientific texts.

Some more examples:

- Voice assistants: Deep learning models are used to analyze the syntactic structure of spoken language, which helps them to understand the user's commands and respond appropriately. For example, the Amazon Echo and Google Home devices use deep learning to analyze the syntactic structure of spoken commands, which helps them to play music, set alarms, and answer questions.

- Chatbots: Syntactic analysis allows chatbots to break down sentences, analyze their structure, extract key information, determine intent and generate coherent responses while following rules of grammar and syntax. Using the syntactic information, the chatbot classifies the intent of the sentence - whether it's a question, comment, command etc. This determines how the chatbot will respond.

Conclusion

In this comprehensive exploration of Natural Language Processing (NLP), deep learning, and the role of Large Language Models (LLMs), we've journeyed through the multifaceted landscape of modern language technology. From the foundational understanding of NLP and its historical evolution to the cutting-edge applications in tasks like language translation, grammar checking, part-of-speech tagging, text condensing, and syntactic analysis, we've seen how technology is reshaping our interaction with language.

With its ability to handle large datasets and identify complex patterns, deep learning has revolutionized NLP, enabling machines to understand and generate human language with remarkable accuracy. The emergence of LLMs like BloombergGPT has further enhanced this field, providing nuanced and context-aware solutions tailored to specific domains like finance.

Through real-life examples and concrete applications, we've illustrated the transformative impact of these technologies across industries, academia, and everyday life. Whether it's a student utilizing grammar-checking tools, a business leader receiving concise executive briefings or a linguist analyzing the syntax of literary works, NLP powered by deep learning and LLMs is making language more accessible, insightful, and engaging.

.png)

_logo%201.svg)

.webp)