An Overview of Llama 3.1

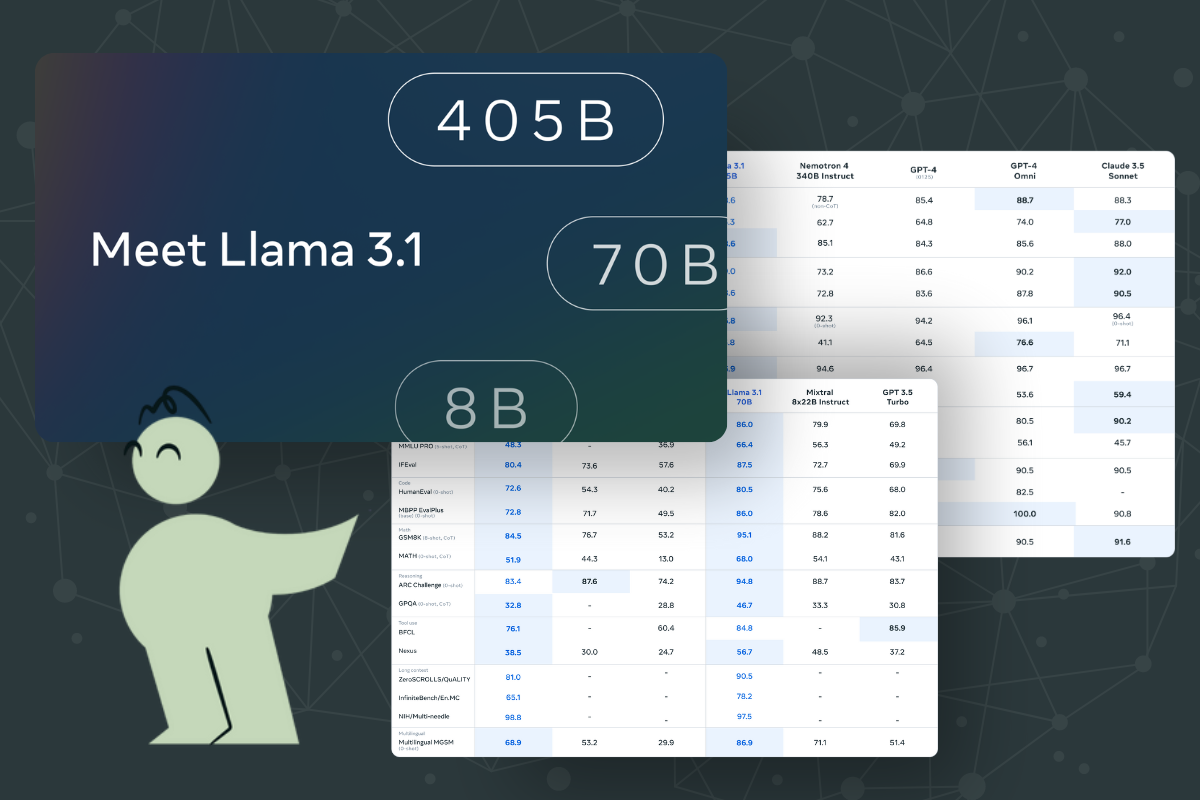

Llama 3.1 is Meta's latest flagship language model, boasting an impressive 405 billion parameters. This model represents a significant leap forward in the Llama models, showcasing Meta's commitment to advancing the field of artificial intelligence. Llama 3.1 is designed to be a versatile and powerful model, capable of handling a wide range of tasks across multiple languages.

The model officially supports eight languages: English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai. However, the paper notes that "the underlying foundation model has been trained on a broader collection of languages," indicating its potential for even wider linguistic capabilities.

The decision to develop a 405B parameter model was driven by scaling laws and compute optimization. Meta researchers found that this size was "approximately compute-optimal according to scaling laws on our data for our training budget of 3.8 × 10^25 FLOPs." This massive scale-up - "almost 50× more than the largest version of Llama 2" - was aimed at pushing the boundaries of model performance and advancing the field towards artificial general intelligence (AGI).

And with the publication of Llama 3.1's thorough documentation, we look forward to seeing capable models, closed and open models, built on top of all this great work and research from Meta.

How Llama 3.1 differs

Llama 3.1 stands out from its predecessors and competitors in several key ways:

- Scale: With 405 billion parameters, it's significantly larger than previous Llama models. The paper states, "We train a model at far larger scale than previous Llama models: our flagship language model was pre-trained using 3.8 × 10^25 FLOPs, almost 50× more than the largest version of Llama 2."

- Compute-optimal size: The 405B parameter size was chosen based on scaling laws, with the paper noting, "This leads to a model size that is approximately compute-optimal according to scaling laws on our data for our training budget."

- Multilingual capabilities: While officially supporting eight languages, the model has a broader linguistic understanding due to its diverse training data.

- Performance: Llama 3.1 is competitive with leading AI models like GPT-4 across various tasks, as the paper states, "Our experimental evaluation suggests that our flagship model performs on par with leading language models such as GPT-4 across a variety of tasks, and is close to matching the state-of-the-art."

Training Llama 3.1

Pre-training data results from Meta's technical paper of the Llama 3 family.

The pre-training data for Llama 3.1 was meticulously curated from various sources, with an emphasis on high-quality and diverse content. The researchers used "about 15T multilingual tokens, compared to 1.8T tokens for Llama 2," significantly expanding the model's knowledge base.

Llama 3.1's training dataset

Overall, the Llama 3.1 pre-training dataset was a well-balanced mix of a variety of general domains, carefully processed and adjusted to ensure high-quality and diverse training inputs.

Key domains included:

- General Knowledge: This included various information from the web, filtered and cleaned for quality.

- Mathematical and Reasoning: Specific data to enhance the model's capabilities in mathematical problem-solving and logical reasoning.

- Multilingual Texts: Text data from various languages is processed to ensure high quality.

- Code: A significant portion of the dataset focused on different programming languages to improve code generation and understanding capabilities.

The multilingual data includes high-quality text from multiple languages, such as German, French, Italian, Portuguese, Hindi, Spanish, and Thai. However, Meta claims that the total number of languages used for the corpus is 34. This multilingual corpus was sourced and processed to enhance the model's performance across different languages. For the officially supported list, human annotations and data from other NLP tasks were included to further augment the multilingual dataset.

For programming, the dataset included code snippets from various languages aimed at enhancing the model's code generation and understanding capabilities. The high-priority programming languages included:

- Python

- Java

- JavaScript

- C/C++

- TypeScript

- Rust

- PHP

- HTML/CSS

- SQL

- Bash/Shell

Synthetic data generation techniques were used to translate data from common languages to less common languages, addressing gaps between major programming languages and less common ones.

Training Data Processing

Of course, due to this model's immense size, Meta has had to use smaller models that were leveraged to help with the filtering, curation, and iteration of data to train and fine-tune Llama 3.1.

- Data Curation and Cleaning: The initial step involved collecting a large-scale training corpus from various data sources up to the end of 2023. Multiple de-duplication and data-cleaning mechanisms were applied to each data source to obtain high-quality tokens. Domains containing personally identifiable information (PII) and known adult content were removed to ensure the safety and appropriateness of the data.

- Web Data Curation: Much of the data was obtained from the web. A rigorous cleaning process was employed to ensure the data quality, including removing duplicate content and filtering out low-quality documents.

- Determining the Data Mix: To create a high-quality language model, it was essential to carefully determine the proportion of different data sources in the pre-training mix. This was achieved through knowledge classification and scaling law experiments. We provide an explanation of these two techniques below.

- Adjustment of Data Mix During Training: Several adjustments were made to the data mix during training to enhance performance on specific tasks. This included increasing the percentage of non-English data to improve multilingual performance, upsampling mathematical data to boost reasoning capabilities, adding more recent web data to update the model's knowledge cut-off, and downsampling subsets identified as lower quality.

- Annealing: In the final stages of pre-training, a process called annealing was used. This involved upsampling high-quality data sources and linearly reducing the learning rate to zero over the last 40 million tokens. This process also included Polyak averaging of model checkpoints to produce the final pre-trained model. We provide further explanation of annealing later on in this article.

- Specialized Training for Multimodal Capabilities: For Llama 3's multimodal aspects, separate encoders were trained for images and speech using large datasets of image-text and speech-text pairs. These were then integrated with the language model via specialized adapters trained on paired data.

Using Knowledge Classification and Scaling Laws to find the right data mix

The process of determining the data mix for Llama 3.1's pre-training dataset using Knowledge Classification and Scaling Laws involved a series of systematic steps to optimize the dataset composition for enhanced model performance. Here's how each technique was applied:

Knowledge Classification

The goal was to categorize the web data into different knowledge domains to ensure a balanced and diverse dataset.

- Classifier Development: A classifier was developed to identify and categorize different types of information present in the web data.

- Categorization: The classifier categorized the data into various domains, such as arts and entertainment, science, technology, etc.

- Downsampling Over-represented Categories: Categories that were overrepresented, like arts and entertainment, were downsampled. Fewer tokens from these categories were included in the final data mix, ensuring that no single category dominated the dataset.

- Balancing the Mix: The data mix was balanced to provide a comprehensive knowledge base for the model by downsampling overrepresented categories and ensuring the inclusion of underrepresented ones.

Scaling Laws

Scaling laws were used to determine the optimal size of the model and the data mix required to achieve the best performance given the computational budget.

- Training Small Models: Small models were trained on different data mixes. These models were much smaller and less resource-intensive than the final model, making it feasible to experiment with various data compositions.

- Performance Prediction: The performance of these small models on benchmark tasks was analyzed to predict how a larger model would perform with the same data mix.

- Scaling Law Experiments: These experiments helped understand the relationship between the amount of data, the computational resources required, and the resulting model performance. This involved identifying compute-optimal models by training them across a range of compute budgets and data mixes.

- Data Mix Adjustments: The data mix was iteratively adjusted based on the scaling law experiments. This involved adding or removing specific data types to find the optimal balance that maximized model performance on various tasks.

This iterative process involved training small models, evaluating their performance, adjusting the data mix, and repeating the process. Each iteration aimed to better align the data mix with the optimal balance of diverse knowledge domains and computational efficiency.

We've seen this iterative process across the development of datasets for large language models; one of the best-documented ones is Hugging Face's FineWeb dataset, where early benchmarking signals were used to determine the effectivity of each step in building the corpus.

What is annealing, and how does it work?

In the context of training large language models like Llama 3.1, "annealing" refers to a specific stage in the training process designed to fine-tune the model and improve its performance on certain tasks. The term is borrowed from metallurgy, where annealing involves heating and then slowly cooling a material to remove stresses and toughen it.

For Llama 3.1, annealing involves several key components:

- Gradual Reduction of Learning Rate: During the final stages of pre-training, the learning rate is linearly reduced to zero. This helps stabilize the model's parameters and improve its generalization capabilities by minimizing the risk of overfitting to the training data. The gradual reduction ensures that the model makes smaller adjustments to its weights, which helps in fine-tuning the model's performance on specific tasks.

- Upsampling High-Quality Data: During the annealing phase, the data mix is adjusted to prioritize high-quality data sources. This means that data deemed to be of the highest quality is used more frequently in this final stage of training. This step is crucial for ensuring that the model's final adjustments are based on the best available data, enhancing its overall performance and accuracy.

- Polyak Averaging: Polyak averaging (also known as checkpoint averaging) is employed to produce the final pre-trained model. This technique involves averaging the model's parameters across multiple checkpoints saved during the annealing phase. Polyak averaging helps smooth out the parameter values, leading to a more stable and reliable model.

To break it down even further, here's how annealing works:

- Implementation:

- During the final stages of training, the learning rate gradually decreases following a predetermined schedule, often linearly. This gradual reduction ensures that the model converges smoothly to a set of parameters representing a local optimum.

- The data mix is adjusted to include more high-quality data, which is used more frequently in the training batches.

- Checkpoints are saved periodically, and at the end of the annealing phase, the parameters from these checkpoints are averaged to produce the final model.

- Training Stability:

- The model makes increasingly smaller parameter updates by gradually reducing the learning rate. This helps avoid large swings in the parameter values, which can destabilize the model and lead to overfitting.

- High-quality data ensures that the final adjustments to the model parameters are based on the best available information, further stabilizing the model and improving its accuracy.

- Final Model Output:

- The final model produced after the annealing phase has well-tuned parameters and smoothed out through the checkpoint averaging process. This results in a more robust, accurate model, and capable of generalizing well to new, unseen data.

Fine-tuning Llama 3.1

The post-training process for the Llama 3 model employs a variety of fine-tuning datasets to enhance the model's performance across multiple domains. The datasets are curated to ensure a balanced and comprehensive training regimen, comprising human-annotated prompts, synthetic data, and specialized data sources.

Supervised Finetuning Data (SFT) for Llama 3.1

Human Annotation Prompts: This dataset consists of prompts collected through human annotations, where responses are selected using a rejection sampling method. This ensures that only high-quality responses are used for further training.

Synthetic Data: Targeting specific capabilities, synthetic data is generated using text representations and text-input large language models (LLMs). This data includes various question-answer pairs designed to improve the model's performance in targeted areas.

Fine-tuning Data Sources

General English Data: Making up 52.66% of the dataset, this category includes general English examples with an average of 6.3 turns per example and 974.0 tokens in context. It covers a broad range of topics to ensure the model's versatility in handling everyday English language tasks.

Code Data: This category represents 14.89% of the dataset and focuses on coding examples. With an average of 2.7 turns per example and 753.3 tokens in context, it aims to enhance the model's ability to understand and generate code.

Multilingual Data: Comprising 3.01% of the dataset, multilingual data includes examples from multiple languages, averaging 2.7 turns and 520.5 tokens in context. This data is crucial for improving the model's multilingual capabilities.

Exam-like Data: Designed to simulate exam questions and answers, this dataset accounts for 8.14% of the total data. Each example has an average of 2.3 turns and 297.8 tokens in context, focusing on the model's ability to handle structured and formal question-answer formats.

Reasoning and Tools Data: This significant portion, representing 21.19% of the dataset, includes examples that require advanced reasoning skills and tool usage. With an average of 3.1 turns and 661.6 tokens in context, this data helps refine the model's problem-solving abilities.

Long Context Data: Although a small part of the dataset at 0.11%, long context data is vital for training the model to handle extensive contexts. Each example in this category averages 6.7 turns and a substantial 38,135.6 tokens in context, pushing the model's capabilities in processing long-form content.

These diverse and well-curated datasets play a crucial role in the supervised fine-tuning stage of Llama 3's post-training, ensuring that the model can perform effectively across various tasks and applications.

Fine-tuning process

- Supervised Finetuning (SFT): This stage involves finetuning the pre-trained checkpoints using supervised learning techniques. The data for this stage includes human annotations and synthetic data. The objective is to optimize the model's performance on specific tasks through a standard cross-entropy loss function.

- Reward Modeling: A reward model is trained on top of the pre-trained checkpoint using human-annotated preference data. This data consists of preference pairs where human annotators rank responses as chosen, rejected, or sometimes edited for improvement. The reward model's training objective is to learn from these rankings without a margin term in the loss, which simplifies the model and avoids diminishing returns from data scaling.

- Direct Preference Optimization (DPO): This involves further aligning the model with human preferences by directly optimizing the model parameters based on the preferences expressed in the data. This helps in enhancing the model's ability to generate preferred outputs. We further explain their process below.

- Rejection Sampling: This technique is used to improve the quality of the training data. Responses generated by the model are sampled and ranked, and only the highest quality responses are used for further training. This helps in refining the model's outputs by filtering out less desirable responses.

- Iterative Rounds: The entire post-training process is conducted in multiple rounds. Each round includes supervised finetuning, reward modeling, and direct preference optimization. This iterative approach helps in gradually improving the model's performance and alignment with human preferences.

- Custom Data Curation: Specific data curation strategies are employed to enhance the model's capabilities in areas such as reasoning, coding, factuality, multilingual understanding, tool use, long context, and precise instruction following.

- Model Averaging: Model averaging is a technique employed at the end of the post-training phase of Llama 3.1 to enhance the stability and performance of the language model. We explain this further below.

Applying DPO to Llama 3.1

Meta conducted Direct Preference Optimization (DPO) as a key component of the post-training process for Llama 3.1 to enhance the model's alignment with human preferences. Here's an overview of how this process was executed:

- Initial Setup:

- The process begins with training on human-annotated preference data, using the best performing models from previous alignment rounds as the base.

- The primary goal is to ensure that the training data aligns well with the distribution of the policy model being optimized in each round, facilitating continuous improvement in model performance.

Preference Data Collection:

- Preference data is collected from human annotators who rank multiple responses generated by the model for each prompt. These rankings are based on the quality and preference of the responses, with annotations creating clear rankings such as edited > chosen > rejected.

- This data includes responses categorized into various levels of preference (significantly better, better, slightly better, or marginally better) and sometimes includes edited responses to further improve the chosen response.

- Algorithmic Adjustments:

- Masking Formatting Tokens: Special formatting tokens, including header and termination tokens, are masked in the DPO loss calculation. This helps stabilize the training by preventing common tokens from contributing to the loss in conflicting ways.

- Regularization with NLL Loss: An additional negative log-likelihood (NLL) loss term is added to the chosen sequences with a scaling coefficient. This further stabilizes DPO training by maintaining desired formatting and preventing the decrease in log probability of chosen responses.

- Training Hyperparameters: The learning rate for DPO is set at 1e-5, and the β hyper-parameter is set at 0.1. These settings are chosen based on their effectiveness in optimizing large-scale models and ensuring high performance on benchmarks.

- Iterative Rounds: DPO is conducted over multiple rounds. In each round, new preference annotations and SFT data are collected. This iterative approach ensures continuous improvement by refining the model's responses based on the latest and most relevant data.

- Comparison with Other Algorithms: DPO is preferred over other on-policy algorithms like PPO (Proximal Policy Optimization) due to its lower computational requirements and better performance on instruction-following benchmarks. This makes DPO a more efficient and effective for aligning large-scale models like Llama 3.

Model averaging for post-training Llama 3.1

Here's how Meta conducted model averaging during the post-training process:

- Impact on Stability and Performance:

- The main advantage of model averaging is that it leads to a more robust and stable model. Averaging helps in mitigating the effects of any transient noise or instabilities that might occur in individual checkpoints.

- This technique ensures that the final model is less likely to exhibit overfitting to the specific data points seen in the later stages of training, thus improving its overall performance on unseen data.

- Implementation in Llama 3.1:

- For Llama 3.1, the averaging was implemented during the post-training phase, mainly focusing on the last few stages of training, where fine-tuning and adjustments are critical.

- This approach is consistent with the broader objective of the post-training phase, which is to refine the model's capabilities and align it more closely with human preferences and real-world tasks.

Model averaging, as part of the post-training strategy for Llama 3.1, plays a vital role in ensuring that the final model is high-performing and stable and ready for deployment in various applications.

Quality Techniques used for Llama 3.1

The post-training phase of Llama 3.1 incorporates rigorous data processing and quality control measures to ensure the model is trained on high-quality data. Here's what we've learned from their paper:

- Rule-Based Data Removal:

- Excessive Use of Emojis and Exclamation Points: In the initial rounds of post-training, it was noted that the model generated responses with an overabundance of emojis and exclamation points. To address this, rule-based strategies were implemented to identify and filter out such elements, maintaining a professional and balanced tone in the dataset.

- Mitigating Overly-Apologetic Tone: The dataset showed a tendency towards an overly apologetic tone, characterized by phrases like "I'm sorry" or "I apologize." By identifying these common phrases and adjusting their frequency, the model's responses were balanced to avoid an excessive apologetic tone.

- Topic Classification: A fine-tuned Llama 3 8B model was utilized as a topic classifier to categorize data into broad and specific categories. This classification system enabled the model to understand and process a wide range of topics effectively. For instance, broad categories included "mathematical reasoning," while specific topics encompassed areas like "geometry and trigonometry".

- Quality Scoring: Reward Model and Llama-Based Signals: Quality scores were generated using signals from both the reward model (RM) and the Llama model. The RM-based scoring selected data in the top quartile as high quality. Additionally, the Llama model assessed each sample, rating general English data on aspects like accuracy, instruction following, and tone, and coding data on bug identification and user intention. Combining both signals resulted in a robust quality assessment process.

- Difficulty Scoring: Instag and Llama-Based Scoring: Difficulty was measured through Instag, which involved intention tagging of prompts where more intentions indicated higher complexity. Llama 3 also rated dialogs on a three-point difficulty scale, ensuring a varied and challenging dataset for training. We'll have a closer look into this technique further down the article.

- Semantic Deduplication: Using RoBERTa, complete dialogs were clustered based on semantic similarity. Within each cluster, dialogs were sorted by a combined quality and difficulty score. A greedy selection process was then applied, retaining only those examples with less than a threshold cosine similarity to previously selected examples. This method ensured that the dataset was free from redundant or overly similar samples, maintaining diversity and relevance.

- Final Quality Assurance: Quality tuning culminated in curating a highly selective dataset, where samples were rewritten and verified either by human annotators or the best-performing models. This refined dataset was then used to train Direct Preference Optimization (DPO) models. The result was a significant improvement in human evaluations without compromising the generalization abilities of the model, as verified by extensive benchmarks.

Instag: A Key Component in Evaluating Data Complexity for Llama 3.1

In the post-training phase of Llama 3.1, Instag plays a critical role in scoring the difficulty of training data. This innovative method ensures that the model is exposed to a variety of challenging prompts, enhancing its overall capabilities. Here's an in-depth look at what Instag is and how it functions:

What is Instag?

Instag, short for "Intention Tagging," is a method used to evaluate the complexity of prompts in the training data. The primary goal of Instag is to prioritize examples that present more significant challenges for the model, thereby improving its ability to handle complex tasks.

Implementation:

- Intention Tagging:

- Instag involves prompting the Llama 3 model to perform intention tagging on supervised finetuning (SFT) prompts. During this process, the model identifies and tags the number of distinct intentions or purposes within each prompt.

- The principle behind intention tagging is that prompts with more intentions are inherently more complex and require a higher level of understanding and reasoning from the model.

- Scoring System:

- The complexity of each prompt is quantified based on the number of intentions tagged by the model. More intentions imply a higher complexity score.

- This tagging and scoring process allows the training data to be sorted and prioritized, ensuring that more complex and challenging prompts are given greater importance during training.

- Integration with Llama-Based Scoring:

- Instag is used alongside Llama-based scoring, where the model rates the difficulty of dialogs on a three-point scale. This combined approach provides a comprehensive assessment of both the quality and difficulty of the training data.

- The dual scoring system ensures that the dataset is not only of high quality but also presents a wide range of difficulties, preparing the model to handle various real-world scenarios effectively.

Benefits:

Enhanced Model Performance: By focusing on more complex examples, Instag helps in fine-tuning the model to improve its performance on challenging tasks, such as advanced reasoning and problem-solving.

Balanced Data Mix: The use of Instag ensures a balanced mix of easy, medium, and hard prompts, which is crucial for training a robust and versatile model.

Improving Llama 3.1's coding, multilingual capabilities, and more

Special attention was given to improving Llama 3.1's more specific capabilities. In Meta's paper, the llama models were additionally trained on coding skills, multilingualism, math and reasoning, long context lengths, tool use, factuality, and steerability. Extensive human evaluations and annotations were also used to ensure high-quality performance throughout Meta's optimized capabilities.

Llama 3.1's coding skills

Llama 3.1 demonstrates strong performance on coding benchmarks across various tasks. The evaluation uses the pass@N metric, which assesses the pass rate for a set of unit tests among N generations. Key results include:

- Python Code Generation:

- HumanEval: Llama 3 8B achieves 72.6±6.8, 70B scores 80.5±6.1, and 405B achieves 89.0±4.8.

- MBPP: Llama 3 8B achieves 60.8±4.3, 70B scores 75.4±3.8, and 405B reaches 78.8±3.6.

- HumanEval+: The results are 67.1±7.2 for Llama 3 8B, 74.4±6.7 for 70B, and 82.3±5.8 for 405B.

- Multi-Programming Language Code Generation:

- Evaluations are conducted on MultiPL-E, which includes translations of problems from HumanEval and MBPP into various programming languages such as C++, Java, PHP, Typescript, and C#.

- For HumanEval, Llama 3 8B achieves 52.8 ±7.7 in C++, and Llama 3 405B scores 82.0 ±5.9.

- For MBPP, Llama 3 8B achieves 53.7 ±4.9 in C++, and Llama 3 405B scores 67.5 ±4.6.

With that being said, here's how Meta was able to achieve these impressive scores:

- Expert Training:

- According to Meta, Llama 3.1 trained a specialized code expert by continuing pre-training on a mixture with over 85% code data.

- This approach involved fine-tuning the model on high-quality, repository-level code data to extend its context length to 16K tokens, following methodologies similar to those used in CodeLlama.

- Synthetic Data Generation:

- Synthetic data was generated to address issues in code generation, such as instruction following, syntax errors, incorrect code generation, and bug fixing.

- This process involved creating a large dataset of synthetic coding dialogues, amounting to over 2.7 million examples.

- Several methods were employed:

- Execution Feedback: Generating solutions to programming problems and analyzing correctness through static and dynamic analysis.

- Programming Language Translation: Translating data from common programming languages (like Python and C++) to less common ones (like Typescript and PHP) to bridge performance gaps.

- Backtranslation: Generating documentation, explanations, and then translating back to code, using quality filters to ensure fidelity.

- System Prompt Steering and Filtering:

- During rejection sampling, code-specific system prompts were used to enhance readability, documentation, and thoroughness.

- A "model-as-judge" approach was utilized to filter training data based on code correctness and style, retaining only the highest-quality samples.

Making Llama 3.1 multilingual

Llama 3.1 shows impressive performance across various multilingual benchmarks. Key results include:

- Multilingual MMLU:

- Llama 3 models were evaluated on a translated version of the Massive Multitask Language Understanding (MMLU) benchmark.

- The performance in a 5-shot setting was as follows:

- Llama 3 8B: 58.6

- Llama 3 70B: 78.2

- Llama 3 405B: 83.2

.png)

_logo%201.svg)

.webp)