In this article, we’ll look into data augmentation and more specifically into some useful techniques for image augmentation. Also, we’ll explore advanced labeling techniques like direct labeling, semi-supervised labeling, active learning, and weak supervision.

Data Augmentation

A usual method for getting more labeled data is by labeling unlabeled data. But another method is to augment our existing data to create more labeled examples. With data augmentation, we expand the dataset by adding slightly modified copies of existing data or creating new synthetic data from our existing data. We will focus on augmenting image data in this blog. Besides images, text, audio, video, etc and many other data types can also be augmented. Data augmentation can also be used in signal processing and speech recognition.

Different Image Data Augmentation Techniques

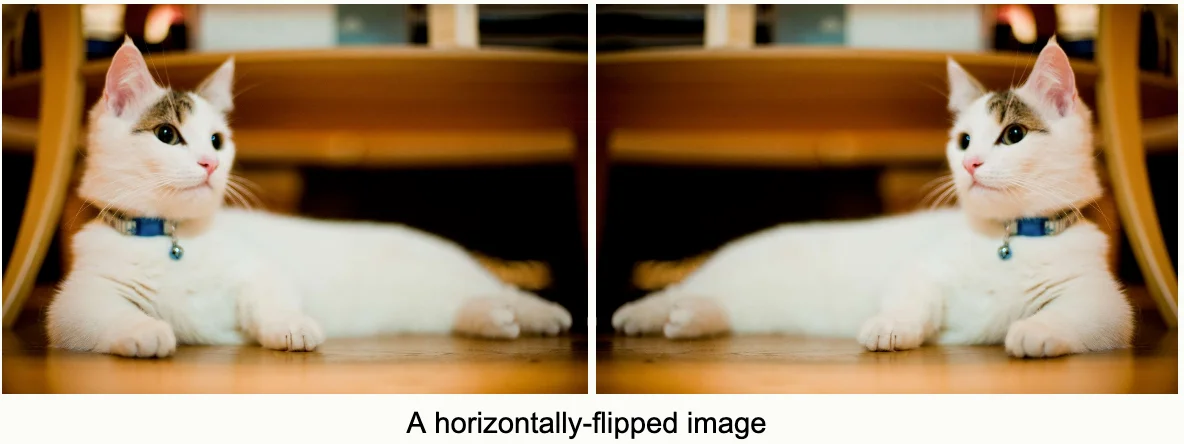

- Position Augmentation: Cropping, Flipping, Scaling, Translation, Rotation, Noise addition, Padding.

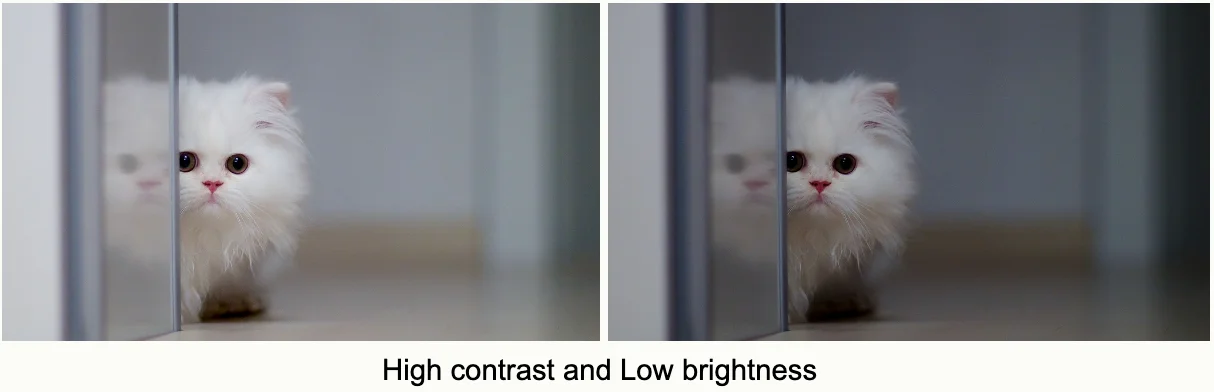

- Color Augmentation: Brightness, Contrast, Saturation, Warmth, Hue, Grain.

How can Data Augmentation help?

Data augmentation is a way to improve our model's performance, expand datasets, add new valid examples that fall into regions of the feature space that are covered by our real examples (examples similar to real examples, and add valid examples in a way that improves coverage of our feature space. Keep in mind that if we add invalid examples, we run the risk of learning the wrong answer or at the very least introducing unwanted noise. So be careful of only augmenting our data invalid ways.

With the existing data, it is possible to create more data by making minor alterations to the existing samples. Simple tasks like flips or rotations are an easy way to double or triple the number of images in a dataset. Simple tasks like rotating or flipping an image are easy ways to double or triple the number of images in our dataset.

Augmenting an image dataset using TensorFlow

CIFAR is the Canadian Institute for Advanced Research. The CIFAR-10 dataset is a collection of images commonly used to train machine learning models and computer vision models. CIFAR-10 contains:

- 60,000, 32 by 32 color images.

- 10 different classes with 6000 images in each class.

Augmenting the CIFAR-10 dataset:

- Pad the image with a black, four-pixel border.

- Randomly crop a 32 x 32 region from the padded image.

- Flip a coin to determine if the image should be flipped horizontally left or right.

Since color images are tensors, TensorFlow provides very useful functions to perform augmentations on image datasets.

Source: https://www.cs.toronto.edu/~kriz/cifar.html

Let's look at a chunk of code that encapsulates the steps for doing a left, right flip. Define the augmentation operation:

Code: https://gist.github.com/DamianArado/b07c2530d9ba1c97385ff1f400f8afaf

First, we define a function that will use to augment image datasets. Then we'll use tf.image.resize_with_crop_or_pad which allows you to resize or crop the image. In this case, we're going to pad. tf.image.random_crop generates a crop of size height by width across all channels. And then tf.image.random_flip_left_right does the same thing with rotations like flipping left or right. So the function returns the altered image to be appended to the dataset.

Here is an example after applying padding, cropping, and left-right flip:

Other Advanced-Data Augmentation Techniques

Apart from simple image manipulation, there are other advanced techniques for data augmentation:

- Semi-supervised data augmentation

- Unsupervised data augmentation (UDA)

- Semi-supervised learning with GANs (Generative Adversarial Networks).

- Policy-based data augmentation like AutoAugment.

- Neural Style transfer

Some useful Data Augmentation Libraries

- Scikit-image

- OpenCV

- imgaug

- albumentations

- Streaming over lightweight data transformations

- pytorch/vision: Datasets, Transforms and Models specific to Computer Vision

- facebookresearch/AugLy: A data augmentations library for audio, image, text, and video.

Key Takeaways

Data augmentation is a great way to increase the number of labeled examples in our dataset. It generates artificial data by creating new examples which are variants of the original data. It increases the size of our dataset and the sample diversity, which results in better feature space coverage. Data augmentation provides a means to improve accuracy, and generalization and avoid overfitting.

Advanced Labeling Techniques

Why is Advanced Labeling important?

Machine learning is growing everywhere and machine learning requires training data and labeled data if we are doing supervised learning. That means we need labeled training sets. But manually labeling data is often expensive and difficult, while unlabeled data is typically pretty cheap and easy to get. An unlabeled dataset contains a lot of information that can help improve our model. Advanced labeling techniques help us reduce the cost of labeling data while leveraging the information in large amounts of unlabeled data.

For the uninitiated, in order to build and train any object detection model or classification model—whether using a standalone convolutional neural network or another framework like YOLOv4—we need to have a labeled or annotated image dataset. That's where Kili can help. Kili Technology provides an advanced image annotation tool that streamlines the data labeling process, making it fast and simple. You can read more about the Image Annotation Tool here.

Kili Technology

Labeling is an ongoing and often, a critical process for your application and your business but at the end of the day creating datasets requires labels, so you need to think about how exactly you will do that. Before we go further, it would be really cool to know that, with Kili Technology, you can easily accelerate the labeling process by connecting one of your models to pre-annotate the data. The work of the annotators is 2 to 5 times faster!

Whether your annotation project requires rough tagging or extreme granularity, Kili has what you need. Leverage out-of-the-box tools like superpixel labeling for object detection, interactive segmentation, propagation, tracking on videos, and more, or build your own operators using Kili’s Python SDK. Streamline and accelerate the process with transfer learning, active learning, and programmatic labeling.

It is the All-in-one Platform for Quality Labeling at Scale. For diving deep, we recommend you check out our comprehensive documentation.

Direct Labeling

Direct labeling or Process Feedback is a way of continuously creating new training data that we will be going to use to retrain our model. This can be understood using 3 steps:

- 1. First, we take the features from the inference requests that our model gets. Here, inference requests essentially mean the predictions that our model is being asked to make and the features that are provided to make those predictions.

- 2. Then, we get labels that can be used with respect to the features for the inference requests that we got in step 1. We get these labels by monitoring the systems (predictions that our system gets) using the feedback from those systems.

- 3. And lastly, we need to make sure to join the results that we get from monitoring systems to the original inference requests. They can be hours, days, or even weeks apart.

It may happen that we run batches on the 1st of the month and get feedback on the 23rd, and so on, so we need to make sure that we can do those joins in order to apply the labels.

Continuously creating training dataset using direct labeling

The advantages of process feedback or direct labeling are great. If our system and our domain are set up in a way that we can do that, it's often the best answer because we have labels that we monitor, and we constantly get new training data. The signals that we get from our labels are really strong. For example, getting signals for click-throughs, if the user clicked or didn't click, is a very strong signal. The Disadvantage is, unfortunately, in many domains for many problems, it's just isn't possible.

Semi-Supervised Labeling

With Semi-supervised labeling, we start with a relatively small dataset that has been labeled by humans. Then, we combine that labeled data with a large amount of unlabeled data, where we infer the labels for the unlabeled data by looking at how the different human-labeled classes are clustered or structured within the feature space.

Then, we train our model using the combination of the two datasets. This method is based on the assumption that different label classes will cluster together or have some recognizable structure within the feature space.

Using Semi-supervised labeling is advantageous as combining labeled and unlabeled data can improve the accuracy of machine learning models. Getting unlabeled data is often very inexpensive since it doesn't require people to assign labels. Often unlabeled data is easily available in large quantities.

Label propagation is an algorithm that assigns labels to previously unlabeled examples. This makes it a semi-supervised algorithm where a subset of the data points has labels. The algorithm propagates the labels to data points without labels. It does that based on the similarity or community structure of the labeled data points and the unlabeled data points. This similarity or structure is used to assign labels to the unlabeled data.

Active Learning

Active learning is a way to intelligently sample our data by selecting the unlabeled points that would bring the most predictive value to our model. This is very helpful in a variety of contexts: first of all, limited data budget. It costs money to label data, especially when we're using human experts to look at the data and assign a label to it.

For example, in healthcare, active learning helps offset this cost and burden. If we have an imbalanced dataset, active learning is an efficient way to select rare classes at the training stage. If standard sampling techniques don't help with improving accuracy and other target metrics, active learning can find ways to achieve or help achieve the desired accuracy.

The active learning strategy works by selecting labeled examples that will best help the model learn. In a fully supervised setting, the training dataset consists of only the examples that we've labeled. In a semi-supervised setting, we leverage those examples to perform some labeled propagation, so that's in addition to active learning.

This is the typical active learning lifecycle: we start with a pool of unlabeled data, and then active learning selects a few examples using intelligent sampling, then we annotate the data with human annotators or by leveraging some other technique. This annotation or labeling procedure generates up labeled training dataset. Finally, we use this labeled data to train a model and make predictions and the cycle goes on.

There are several common active learning sampling techniques. With margin sampling, we assign labels to the most uncertain points based on their distance from the decision boundary. With cluster-based sampling, we select a diverse set of points by using clustering methods over our feature space. With query-by-committee, we train several models and select the data points with the highest disagreement among those models.

Finally, region-based sampling is a relatively new algorithm. At a high level, this algorithm works by dividing the input space into separate regions and running an active learning algorithm in those regions. See this article on Why Active Learning Performance in Labeling Radiology Images Is 90% Effective.

Weak Supervision

Weak supervision is a way to generate labels using information from one or more sources. Usually, these are subject matter experts, and usually, they're designing heuristics. The resulting labels are noisy rather than the deterministic labels that we're used to.

More specifically weak supervision comprises one or more noisy conditional distributions over unlabeled data. And the main objective is to learn a generative model that determines the relevance of each of these noisy sources.

So, we start with unlabeled data for which we don't know the true labels. Then we add to the mix one or more weak supervision sources. These sources are a list of heuristic procedures that implement noisy, and imperfect automated labeling.

Source: Weak Supervision - Snorkel AI

Subject matter experts are the most common sources for designing these heuristics which typically consist of a coverage set and an expected probability of the true label over the coverage set.

By noisy we mean that the label has a certain probability of being correct. The main goal is to learn the trustworthiness of each supervision source, and this is done by training a generative model. The Snorkel framework came out of Stanford in 2016 and is the most widely used framework for implementing weak supervision. It doesn't require manual labeling, so the system programmatically builds and manages the training data set.

Key Takeaways

So let's review a few of the key points of advanced labeling techniques. “Direct” supervised learning requires labeled data, but labeling data is often an expensive, difficult, and slow process.

Semi-supervised learning is one possible way to add labels to unlabeled data. So this method falls between unsupervised learning and supervised learning. It works by combining a small amount of labeled data with a large amount of unlabeled data. And this improves learning accuracy.

Active learning is another advanced labeling method. It relies on intelligent sampling techniques, that select the most important examples of the label and add them to the data set. Active learning improves predictive accuracy while minimizing labeling costs.

The last method we saw, was weak supervision. Weak supervision leverages noisy, limited, or inaccurate label sources inside a supervised learning environment.

Conclusion

In this article, we discussed how data augmentation is helpful for us, including some advanced techniques. Then, we looked into some powerful advanced labeling techniques. First, we looked into direct labeling and its pros and cons, then we saw how semi-supervised labeling works and how we can use it to improve our model's performance by expanding our labeled dataset.

Next, we saw active learning, which uses intelligent sampling to assign labels based on the existing data to unlabeled data and lastly, we arrived at weak supervision, which is a way to programmatically label data, typically by using heuristics that are designed by the subject matter experts.

.png)

_logo%201.svg)

.webp)