Artificial intelligence can potentially transform industries, but the high costs associated with advanced models often present a significant barrier. If you're navigating the landscape of AI, you might be excited about the possibilities yet concerned about the expenses.

Cut to GPT-4o Mini, a new model promising to deliver cost-efficient intelligence without compromising on performance. With GPT-4o Mini, OpenAI is making advanced AI capabilities more accessible, enabling businesses and developers to leverage cutting-edge technology without the hefty price tag.

In this article, we'll explore how GPT-4o Mini can transform our operations, providing cutting-edge capabilities and cost savings. Let’s explore its features, compare it with the latest models from Hugging Face and Mistral, and explore its real-world applications.

Understanding GPT-4o Mini

GPT-4o Mini is a compact version of the GPT-4 model, the latest AI model designed to offer high-level intelligence at a reduced cost. This model builds upon previous advancements of GPT-4 and aims to make AI technology more affordable and accessible to a wider audience while maintaining high performance. It replaces GPT-3.5 turbo, which was once the smallest model that OpenAI offers.

Key Features and Innovations

- Enhanced Efficiency: GPT-4o Mini has been designed to perform complex tasks while consuming less computational power. This efficiency reduces costs and lowers the environmental impact of running large AI models.

- Scalability: The model is highly scalable, meaning it can be adapted for various applications, from small-scale projects to large enterprise solutions. This flexibility makes it a versatile choice for different needs.

- Improved Training Techniques: By utilising advanced training techniques, GPT-4o Mini achieves a high level of accuracy and reliability. These improvements help ensure the model can handle a wide range of tasks effectively.

- User-Friendly Integration: One of the standout features of GPT-4o Mini is its ease of integration. Whether you're a tech-savvy professional or a business owner with limited technical knowledge, integrating GPT-4o Mini into your existing systems is straightforward.

- Cost-Effectiveness: Perhaps the most significant innovation is its cost-effectiveness. By optimizing resource use and enhancing efficiency, GPT-4o Mini offers a powerful AI solution without the hefty price tag typically associated with such models.

These features make GPT-4o Mini a compelling choice for anyone looking to leverage AI technology in a cost-effective manner.

GPT-4o Mini's Performance Compared to Other Small Models

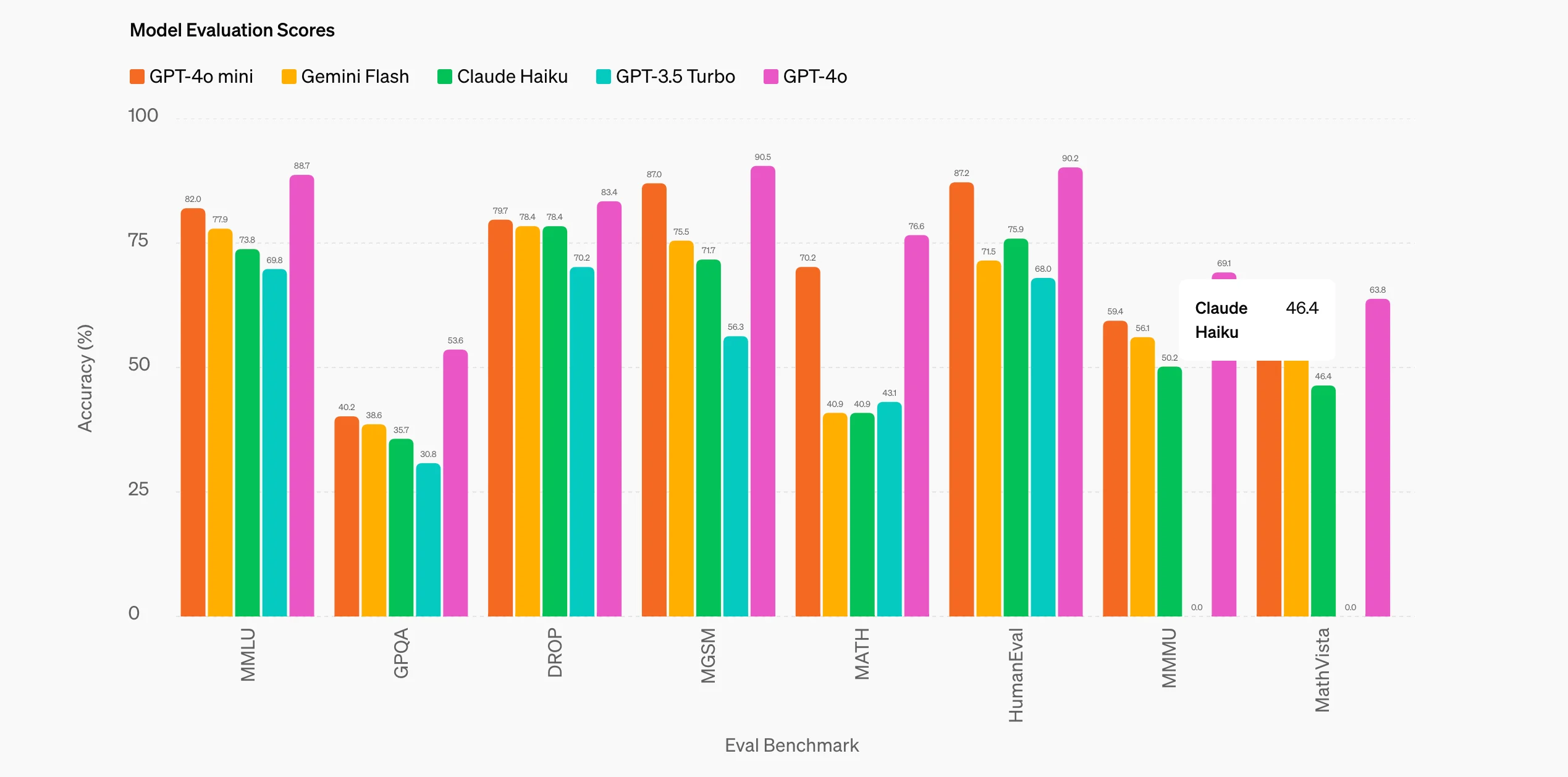

GPT-4o Mini demonstrates impressive capabilities when measured against other small models, showcasing its strength in both textual and multimodal reasoning tasks.

- MMLU (Massive Multitask Language Understanding) Benchmark:

GPT-4o Mini scores an impressive 82% on the MMLU benchmark. This benchmark is designed to assess a model's ability to understand and reason across a wide range of academic subjects. In this context, GPT-4o Mini outperforms other small models, particularly in tasks that involve complex reasoning and understanding of both text and visual inputs. This high score indicates that GPT-4o Mini excels in interpreting and generating human-like responses across diverse topics. - MMMU (Multimodal Massive Multitask Understanding) Benchmark:

When it comes to multimodal reasoning, GPT-4o Mini continues to lead with a score of 59.4% on the MMMU benchmark. This benchmark evaluates a model's ability to handle and integrate information from multiple modalities, such as text and images. GPT-4o Mini's score surpasses that of Gemini Flash (56.1%) and Claude Haiku (50.2%), demonstrating its superior capability in combining visual and textual information to provide coherent and accurate outputs. - Performance on Academic Benchmarks:

Across various academic benchmarks, GPT-4o Mini consistently outperforms other small models, including GPT-3.5 Turbo. It excels in both textual intelligence—where it processes and generates text with a high degree of understanding—and in multimodal reasoning, where it integrates and analyzes data from different formats (e.g., text and images). This broad capability makes GPT-4o Mini a versatile choice for applications that require robust AI performance on complex, interdisciplinary tasks.

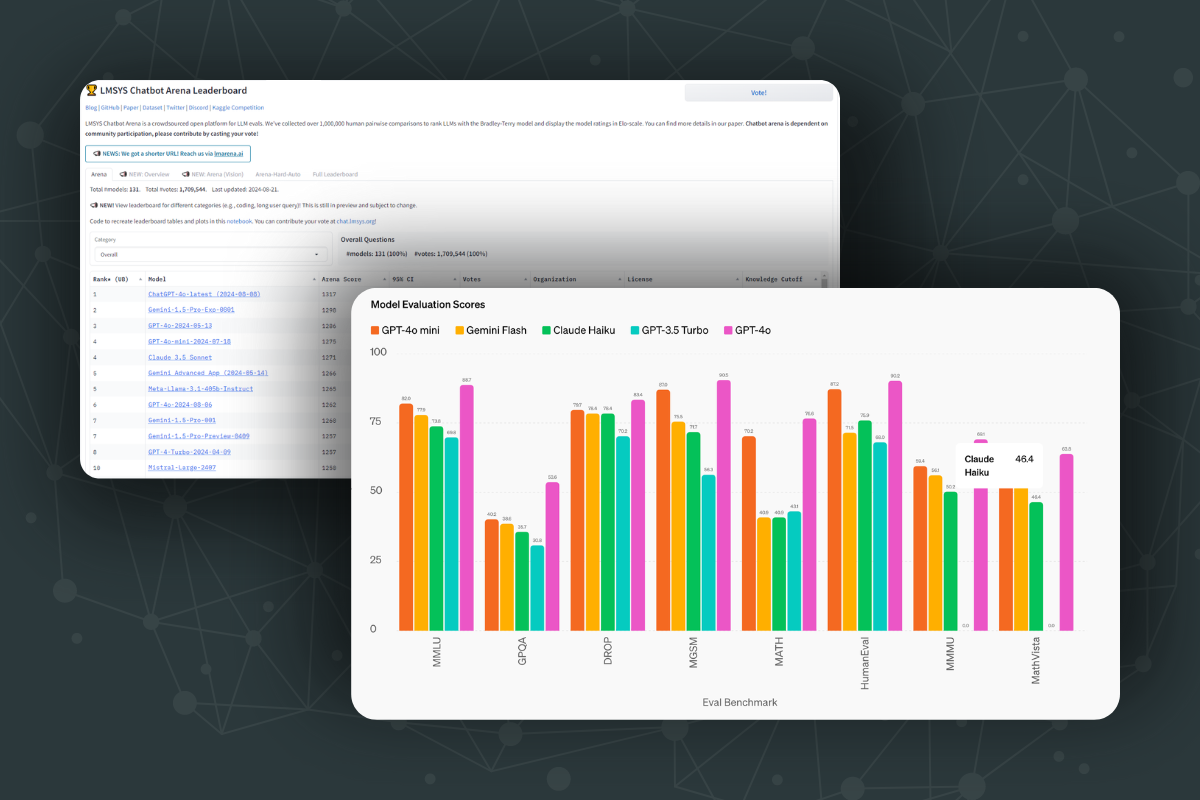

GPT-4o Mini's Performance on the LMSYS Chat Leaderboard

The LMSYS Chat Arena leaderboard ranks large language models (LLMs) based on over 1.7 million human pairwise comparisons, providing a robust measure of their capabilities across a wide range of tasks. In this highly competitive environment, GPT-4o Mini demonstrates exceptional performance, placing 4th overall among 131 models, according to the leaderboard as of August 21, 2024.

A few key points:

- High Overall Ranking:

GPT-4o Mini holds the 4th position on the leaderboard, with an Arena Score of 1275. This score is a testament to its strong performance across various evaluation criteria, placing it just behind more resource-intensive models like ChatGPT-4.0-latest and GPT-4.0-2024-05-13. - Competitive Edge:

Despite being a more cost-efficient and smaller version of the GPT-4 series, GPT-4o Mini manages to outperform many other models, including some larger ones. Its high ranking highlights its efficiency in delivering robust AI capabilities while maintaining a smaller computational footprint. - Comparison with Top Models:

GPT-4o Mini is only 42 points behind the 3rd-ranked GPT-4.0-2024-05-13 and 24 points behind Gemini-1.5-Pro-Exp-0801, indicating that it is highly competitive even with the most advanced models on the market. This proximity in scoring demonstrates GPT-4o Mini's effectiveness in various tasks, making it a compelling option for those looking for a balance between performance and cost.

Cost Efficiency of GPT-4o Mini

GPT-4o Mini stands out for its cost efficiency, offering high-quality performance at a competitive price point. Based on Artifical Analysis' results, GPT-4o Mini demonstrates an excellent balance between quality, speed, and cost, making it an attractive option for users looking to maximize value in their AI investments.

Price Competitiveness

GPT-4o Mini is priced at $0.26 per 1 million tokens (blended 3:1 ratio of input to output tokens), which is significantly cheaper compared to many other models in the market. Specifically, the input token price is $0.15, and the output token price is $0.60 per million tokens. This makes GPT-4o Mini one of the most affordable high-performance models available, providing substantial savings for users who require large-scale token processing.

When compared to other models, such as Claude 3.5 Sonnet ($6 per 1 million tokens) and Reka Core ($15 per 1 million tokens), GPT-4o Mini offers a far more economical option without a significant compromise on quality or speed.

Quality to Price Ratio

GPT-4o Mini achieves a Quality Index of 71, placing it among the top performers in terms of quality. The model excels in tasks evaluated by the MMLU benchmark, ensuring strong performance across various reasoning and knowledge domains.

When comparing this Quality Index to the price per token, GPT-4o Mini is positioned in the most attractive quadrant on the quality versus price graph. This means that users get excellent quality at a lower cost compared to other models, offering an outstanding price-to-performance ratio.

Speed and Latency

GPT-4o Mini also delivers competitive performance in terms of speed and latency. With an output speed of 114.1 tokens per second, it is faster than many other models, including GPT-4 (August 6) and Claude 3 Haiku. The model's latency is also commendable, taking only 0.42 seconds to deliver the first token, which is lower than the average.

Real-World Applications

GPT-4o Mini's blend of cost-efficiency and robust performance makes it suitable for a wide range of applications across various industries. Here, we'll explore some potential uses and how businesses and professionals can leverage this model to enhance their operations.

1. Customer Service

- Chatbots and Virtual Assistants: GPT-4o Mini can power intelligent chatbots and virtual assistants, providing quick and accurate responses to customer inquiries. This can improve customer satisfaction and reduce the workload on human agents.

- Personalised Customer Interactions: By analysing customer data, GPT-4o Mini can tailor interactions to individual preferences, enhancing the overall customer experience.

2. Content Creation

- Automated Writing: Businesses can use GPT-4o Mini to generate high-quality written content, such as blog posts, articles, and social media updates. This can save time and resources while maintaining a consistent content output.

- Content Optimisation: The model can assist in optimising content for SEO by suggesting relevant keywords and improving readability.

3. Data Analysis

- Predictive Analytics: GPT-4o Mini can analyse large datasets to identify trends and make predictions, helping businesses make data-driven decisions.

- Market Research: By processing and analysing market data, the model can provide insights into consumer behaviour and market trends.

4. Education and Training

- Interactive Learning Tools: Educational institutions can use GPT-4o Mini to develop interactive learning tools that provide personalised instruction and feedback to students.

- Content Development: The model can assist educators in creating customised lesson plans, educational content, and assessments.

5. Healthcare

- Medical Data Analysis: GPT-4o Mini can help healthcare professionals analyse patient data, identify patterns, and make informed decisions about treatment plans.

- Patient Support: Virtual assistants powered by GPT-4o Mini can provide patients with information and support, improving access to healthcare resources.

By applying GPT-4o Mini in these scenarios, businesses can achieve greater efficiency, improve customer experiences, and drive innovation.

Integration and Implementation

Successfully integrating GPT-4o Mini into your operations requires careful attention to fine-tuning and evaluation. By focusing on these aspects, you can ensure the model delivers optimal performance tailored to your specific needs.

Steps for Integrating GPT-4o Mini

- Assess Your Needs: Start by identifying the specific tasks or problems you want GPT-4o Mini to address. Understanding your needs will guide the fine-tuning process, ensuring the model is adapted to your unique requirements.

- Prepare Your Data: The quality of the data you use for fine-tuning directly impacts the model’s performance. Collect and clean a robust dataset that reflects the diversity and complexity of the tasks GPT-4o Mini will handle. This data should be comprehensive, accurate, and relevant to your application.

- Fine-Tuning the Model: Fine-tuning GPT-4o Mini involves adjusting the model’s parameters using your specific dataset. This step is crucial for tailoring the model to your industry, language, or task-specific nuances. During this process, you may need to experiment with different settings to achieve the best results, balancing the model’s general capabilities with your specific needs.

- Developing the Interface: As you fine-tune the model, consider how end-users will interact with it. Design an interface that allows for easy access and interaction with the model’s capabilities. Whether it’s a chatbot, content generation tool, or data analysis platform, the interface should be intuitive and user-friendly.

- Evaluation and Testing: After fine-tuning, rigorously evaluate the model’s performance. Use a variety of test cases to assess how well GPT-4o Mini handles different tasks. Key metrics might include accuracy, response time, and relevance of the outputs. This evaluation phase helps identify any weaknesses or areas for further adjustment.

- Iterative Improvement: Fine-tuning is often an iterative process. Based on the evaluation results, you may need to go back and adjust the model’s parameters or retrain it with additional data. Continuous refinement ensures that GPT-4o Mini remains aligned with your goals and maintains high performance over time.

- Deployment and Monitoring: Once the model is fine-tuned and evaluated to your satisfaction, deploy it into your live environment. Establish monitoring tools to track the model’s ongoing performance, capturing data that can inform future refinements.

Addressing Common Concerns and Challenges

- Data Quality and Relevance: Ensure that the data used for fine-tuning is of the highest quality. Poor data can lead to suboptimal performance, so invest time in data preparation and validation.

- Overfitting: Be cautious of overfitting, where the model becomes too tailored to the training data and performs poorly on new, unseen data. Regularly test the model with fresh datasets to ensure it remains versatile and effective in real-world applications.

- Scalability of Fine-Tuning: Fine-tuning on a large scale can be resource-intensive. Plan for the necessary computational resources and consider incremental or transfer learning techniques to reduce the burden.

- Continuous Evaluation: AI models can drift over time as data changes. Implement a process for continuous evaluation and updates to keep GPT-4o Mini aligned with your evolving needs and industry trends.

- User Training and Feedback: Educate your team on how to utilise the fine-tuned model effectively. Gather user feedback to refine the model further, ensuring it meets the practical needs of those interacting with it.

Potential Limitations and Considerations

While GPT-4o Mini offers a range of benefits, it's important to acknowledge that no AI model is without its challenges. Understanding these potential limitations allows you to proactively address them through ongoing evaluation and fine-tuning, ensuring that GPT-4o Mini continues to perform at its best.

Potential Limitations

- Performance Ceiling: GPT-4o Mini is designed to balance cost and performance, which means it may not always match the output of more resource-intensive models, particularly for highly complex tasks. This can be a limitation if your application demands the highest level of AI capabilities.

- Data Dependency: The effectiveness of GPT-4o Mini relies heavily on the quality of data it's trained on. Poor or biased data can lead to inaccurate results, limiting the model’s utility in real-world applications.

- Integration Challenges: Even with a user-friendly design, integrating GPT-4o Mini into specialised or legacy systems might require significant technical expertise and customisation, posing challenges during implementation.

- Maintenance and Drift: AI models like GPT-4o Mini can experience performance drift over time as data evolves or new requirements emerge. Without regular updates and maintenance, the model may become less effective.

Improvement Through Evaluation and Fine-Tuning

To mitigate these limitations and enhance GPT-4o Mini’s performance, continuous evaluation and fine-tuning are essential. Here's how these processes can improve the model's effectiveness:

- Identifying Weaknesses Through Evaluation: Regular evaluation is key to identifying where GPT-4o Mini may be underperforming. By assessing the model’s outputs against a diverse set of test cases, you can pinpoint specific weaknesses, such as inaccuracies, slow response times, or failure to generalise across different tasks.

- Focused Fine-Tuning: Once weaknesses are identified, you can fine-tune GPT-4o Mini to address these issues. This might involve retraining the model with more comprehensive or updated data, adjusting parameters to better handle complex tasks, or optimising resource allocation for improved performance.

- Adaptive Learning: Implementing a feedback loop where the model learns from new data and user interactions can help GPT-4o Mini adapt to changing needs. Continuous learning and adaptation ensure that the model remains relevant and effective as your business grows or as market conditions evolve.

- Scalable Fine-Tuning: As your use of GPT-4o Mini expands, scalable fine-tuning techniques can be employed to manage the complexity of new tasks without requiring extensive computational resources. This ensures that the model remains cost-effective even as it evolves to meet new demands.

- Ongoing Monitoring and Adjustment: Post-deployment, continuous monitoring of the model’s performance allows you to make incremental adjustments as needed. This proactive approach helps in maintaining optimal performance and addressing any emerging limitations promptly.

Conclusion

GPT-4o Mini represents a significant advancement in the field of AI, offering a powerful and cost-efficient solution that makes cutting-edge technology accessible to a broader audience. Its innovative features, coupled with a focus on efficiency and affordability, make it a compelling choice for various applications across industries.

In this article, you’ve seen the capabilities and benefits of GPT-4o Mini, how it compares to other leading models and practical steps for integrating it into your systems. While there are potential limitations, being aware of these and implementing mitigation strategies can help you harness the full potential of GPT-4o Mini.

Unlock the full potential of GPT-4o Mini for your business today. Visit our LLM alignment platform to explore tailored solutions and start optimizing your AI strategy.

.png)

_logo%201.svg)

.webp)