LCL Bank Automates KYC with AI Document Extraction

Learn how France's LCL Bank transformed regulatory compliance, processing millions of ID documents and DPE forms in weeks instead of months with AI automation.

Learn how our customers rely on Kili Technology to build their high-quality datasets at scale

.svg)

.svg)

.svg)

%3Aformat(webp).webp)

Learn how France's LCL Bank transformed regulatory compliance, processing millions of ID documents and DPE forms in weeks instead of months with AI automation.

%3Aformat(webp).webp)

Discover how Enabled Intelligence achieved 95%+ accuracy processing millions of geospatial labels for military and commercial AI. See why Kili Technology beat 35+ platforms for mission-critical applications.

%3Aformat(webp).webp)

How France's leading mutual insurer transformed 1.5 million unstructured customer comments into strategic business intelligence, deploying AI-powered analysis across 9+ projects and 60+ users to unlock deeper customer satisfaction insights.

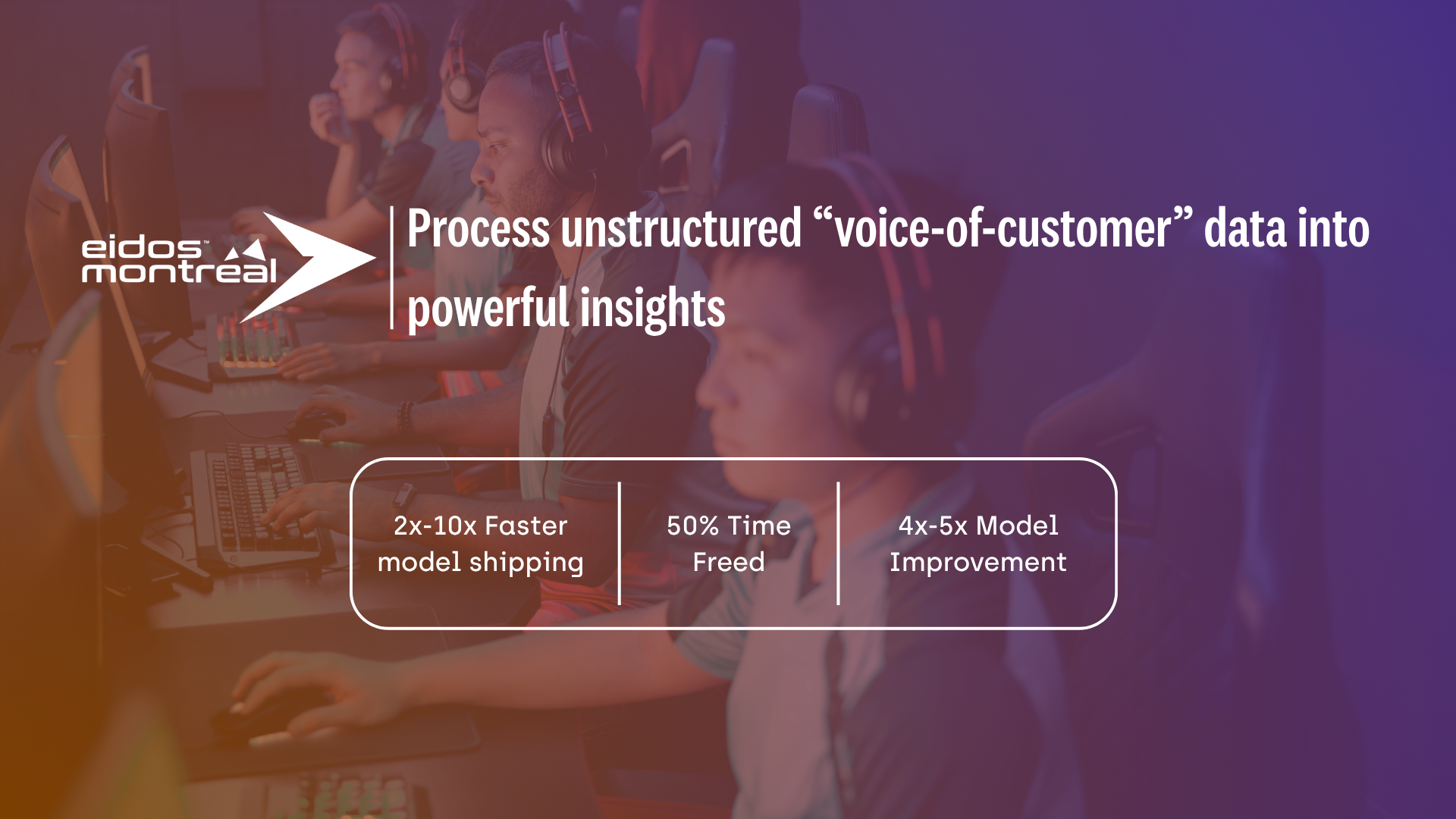

Eidos-Montréal, a leading video game studio, wanted to better understand how players and critics perceived their games. The Data Science and Analytics team set out to turn thousands of customer reviews and articles into actionable insights to guide both business and game design decisions. To achieve this, they needed a scalable and reliable way to annotate large volumes of unstructured text data — and found the right partner in Kili Technology.

.png)

How social media giant Jellysmack transformed their NLP and Video ML capabilities, shipping models 10x faster while reducing project management overhead by 50% to support 300+ content creators across 11 countries.

.png)

How a leading AI research organization partnered with Kili Technology to formalize approximately 2,500 mathematical problems across AMC, AIME, and AMO difficulty levels, enabling breakthrough advances in automated theorem proving with contributions from 68 international researchers.